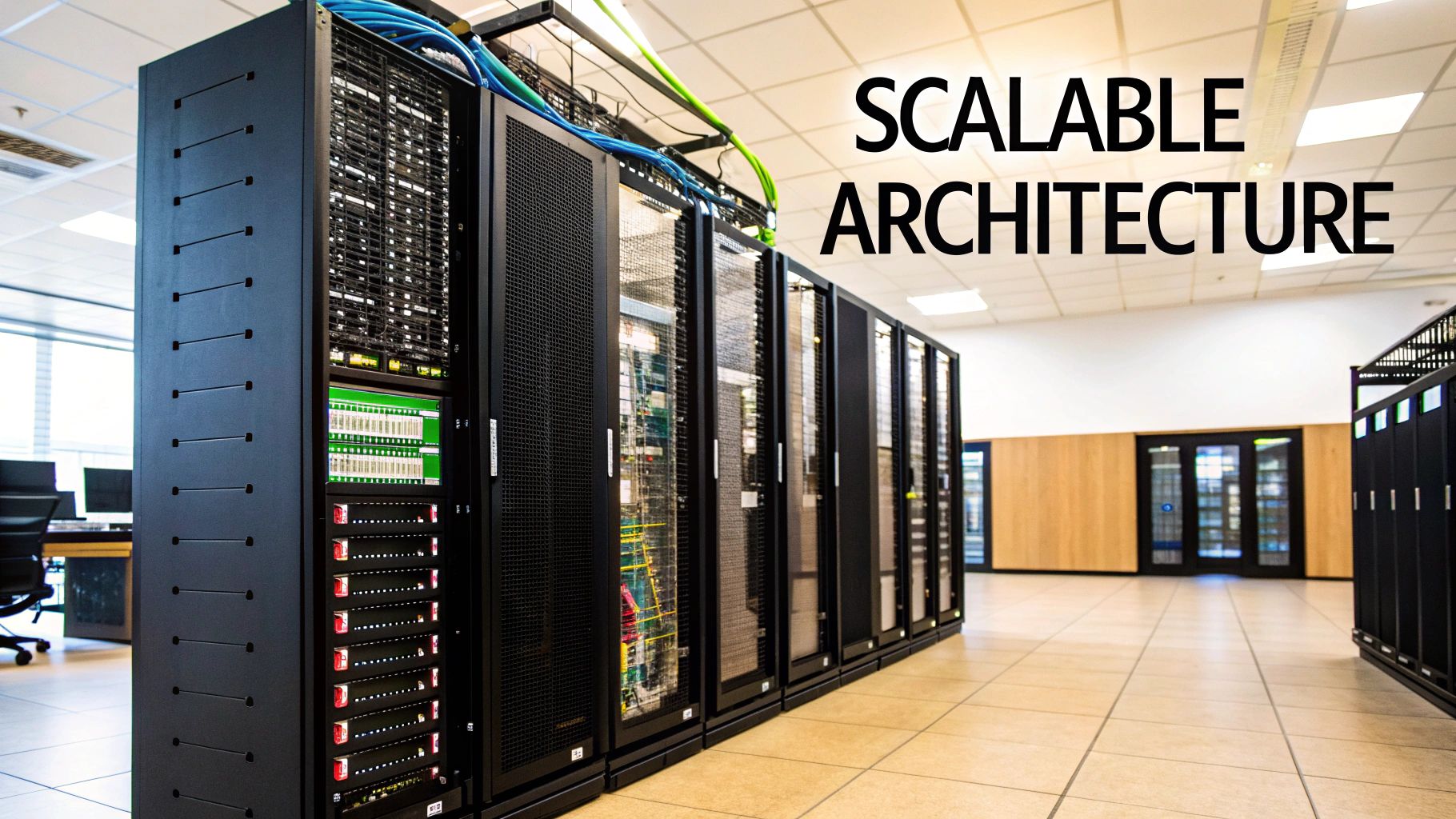

Understanding Cloud Architecture: Your Digital Transformation Foundation

This diagram shows the core parts of a typical cloud setup, highlighting how services, databases, and networking all work together. It shows how interconnected everything is in the cloud and how data flows within the system. This interconnectedness is key to the flexibility and scalability that good cloud architecture offers.

Imagine swapping a stiff, inflexible structure for a dynamic, interconnected ecosystem. That's the core of moving to the cloud. It's not just about hosting your apps somewhere new. It's about fundamentally changing how your tech infrastructure works. It's about designing a system that can adapt and grow along with your business.

Beyond the Lift and Shift: A Mindset Change

Many businesses start their cloud journey with a "lift and shift" approach. They simply recreate their existing infrastructure in the cloud. But this often misses the real power of the cloud. It's like moving your entire office to a new building without changing how you work. New address, same old habits.

Truly adopting the cloud requires a change in thinking. It's about building in cloud architecture design principles like scalability, security, and cost optimization right from the beginning. This means designing apps as interconnected services that can scale independently, building in strong security to protect your data, and making sure you're using your resources efficiently to keep costs down. You might want to explore hybrid cloud architectures further.

The Indian Cloud Landscape: A Growth Story

This new approach to IT infrastructure is gaining rapid popularity in India. Cloud architecture in India has seen major growth, with businesses increasingly using cloud-native design to improve scalability and efficiency. As of 2023, the Indian cloud market is predicted to grow rapidly, fueled by the increasing use of cloud services by companies of all sizes. This growth is linked to the advantages of cloud-native architecture, which allows applications to be built as interconnected microservices. This structure improves fault tolerance and reduces costs. Discover more insights here.

Adopting these principles isn't just a tech task; it's a strategic move for businesses that want to succeed. By going cloud-native, companies can unlock the full potential of the cloud and achieve greater agility, resilience, and cost savings. This understanding sets the stage for exploring these key principles in more detail, beginning with scalability.

Mastering Scalability: Building Systems That Grow With You

Imagine your infrastructure as a bustling Diwali mela. Sometimes you have a small, intimate gathering; other times, you're hosting a massive crowd. Your systems need to handle both extremes seamlessly. This adaptability is the essence of scalability in cloud architecture design principles.

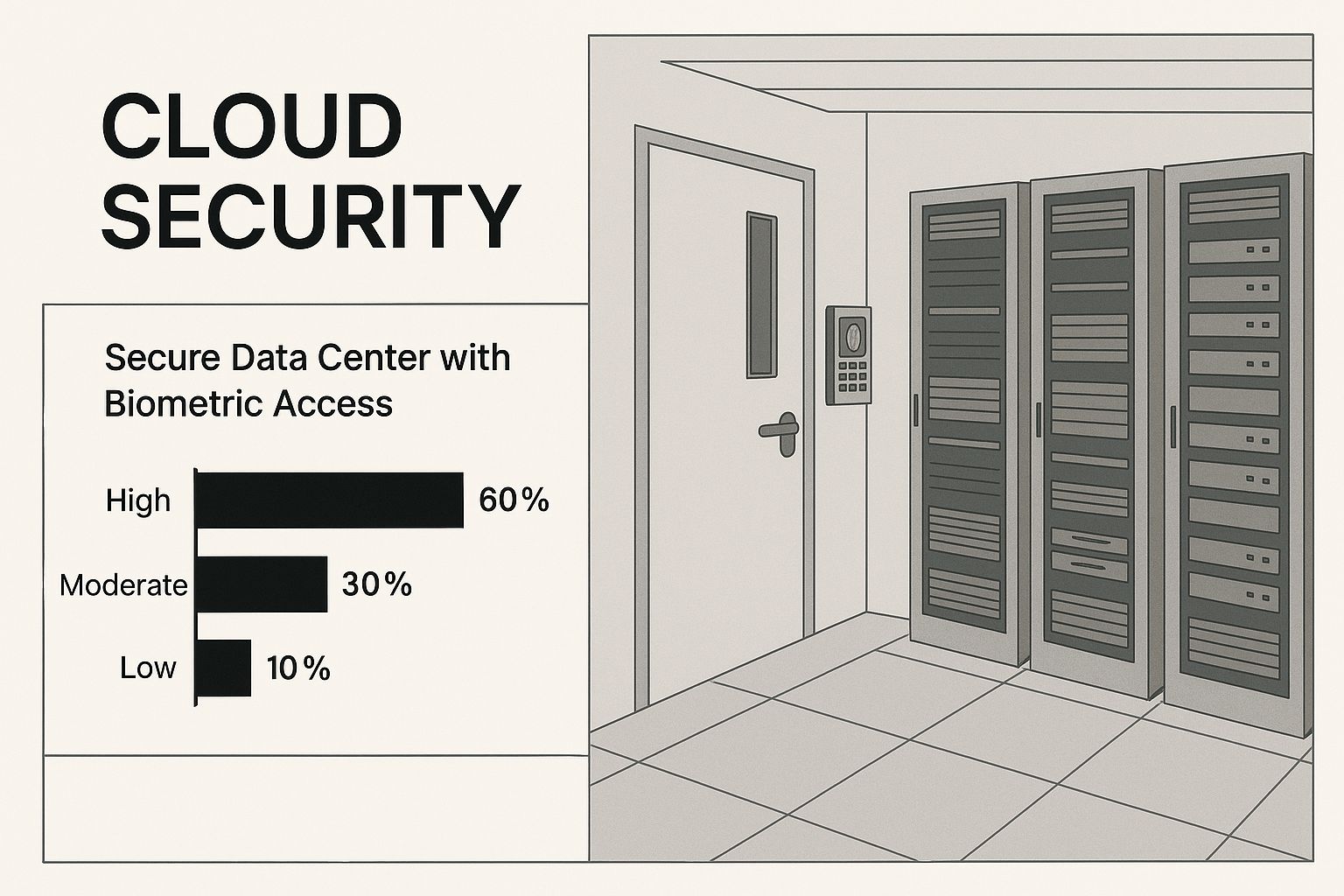

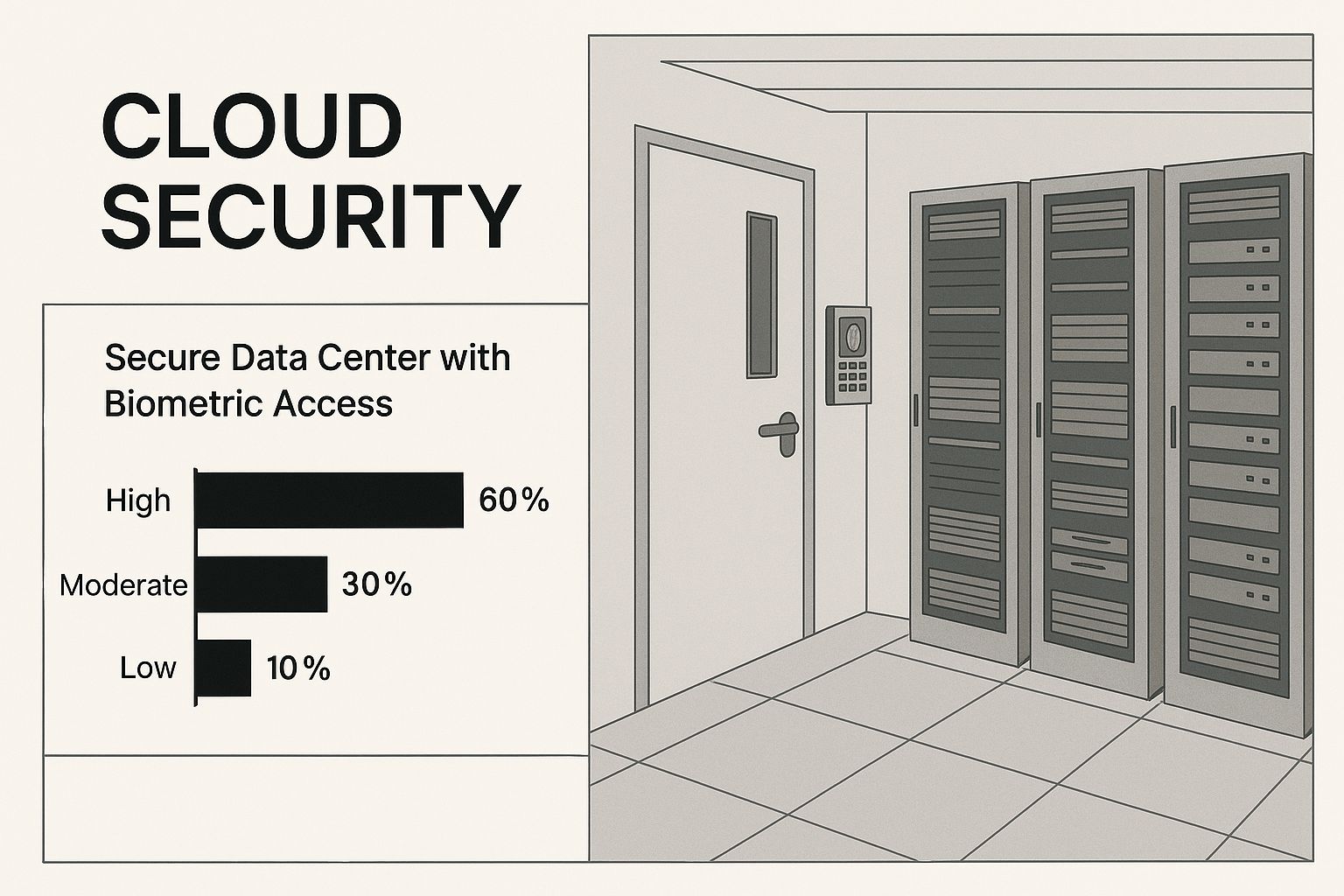

Just as a data center might use biometrics for access control, cloud security measures ensure only authorized users can access resources, even as those resources scale. This is critical for maintaining control and preventing breaches as your system grows. Think of it as having robust security at your mela, no matter how large the crowd becomes.

Scaling Up vs. Scaling Out: Choosing the Right Approach

Many organizations grapple with the challenge of either over-provisioning (paying for resources they don't use) or under-provisioning (not having enough resources to meet demand). This is where understanding the difference between scaling up and scaling out becomes essential.

Scaling up is like upgrading your existing food stalls at the mela – giving them bigger grills, more cooking surfaces, and faster equipment. You're increasing the capacity of individual components. Scaling out, on the other hand, involves setting up more food stalls. You're adding more individual units to handle the increased demand.

Let's illustrate these approaches with a simple comparison table:

Horizontal vs. Vertical Scaling Comparison: A detailed comparison of scaling approaches, their benefits, limitations, and ideal use cases

| Scaling Type | Implementation | Benefits | Limitations | Best Use Cases |

|---|---|---|---|---|

| Vertical (Scaling Up) | Increasing the resources (CPU, RAM, storage) of a single machine. | Simple to implement, no code changes required. | Limited by the maximum capacity of a single machine, single point of failure risk. | Applications with predictable workloads, small to medium-sized databases. |

| Horizontal (Scaling Out) | Adding more machines to the system. | High availability, fault tolerance, handles large traffic spikes effectively. | More complex to implement, may require code changes for distributed systems. | Applications with fluctuating workloads, large databases, high-traffic websites. |

As you can see, each scaling approach has its strengths and weaknesses. Choosing the right approach depends on the specific needs of your application.

This screenshot from AWS documentation shows how auto-scaling works.

The Power of Auto-Scaling Groups

Auto-scaling groups are a fundamental component of scalable cloud architectures. Think of them as the smart manager for your mela, constantly monitoring the crowd size and adjusting the number of food stalls accordingly. They automatically add or remove resources based on predefined rules, ensuring your infrastructure can handle unexpected demand spikes without manual intervention.

For instance, an e-commerce platform can configure auto-scaling to manage traffic surges during a festive sale, guaranteeing a smooth customer experience even under heavy load.

Load Balancers, Containers, and Serverless Functions: Building Flexible Systems

Beyond scaling up and out, other technologies play vital roles in building flexible and scalable systems:

- Load balancers: These distribute incoming traffic efficiently across multiple servers, preventing any single server from being overwhelmed. Imagine directing mela attendees to different food stalls to avoid long queues at any one stall.

- Containers: These package applications and their dependencies into portable units, making deployment and scaling faster and more efficient. Think of them as pre-fabricated food stalls that can be easily set up and taken down as needed.

- Serverless functions: These allow you to run code without managing servers, taking scalability to the next level. It's like hiring caterers for your mela – they handle the food preparation and serving, and you only pay for the services you use.

These components, working together, create systems that dynamically adapt to your business needs. They allow you to focus on delivering value – improving the experience for your "mela attendees" – rather than worrying about the underlying infrastructure. As your business grows, your systems seamlessly scale to meet the increasing demand.

Security-First Thinking: Protecting What Matters Most

This image illustrates the multifaceted nature of cloud security, highlighting the layered approach necessary for protecting valuable data. Notice how interconnected the different security components are, from identity management to threat detection. They all work together to create a comprehensive security posture. This big-picture perspective is essential when designing cloud architectures.

Imagine traditional security like a medieval fortress—thick walls, a single gate, and the hope that nobody gets in. Cloud security, on the other hand, requires a different strategy. Think of it more like a secure embassy, with layers of checks, constant identity verification, and proactive threat monitoring. This is where the smart implementation of cloud architecture design principles truly makes a difference.

The "Assume Breach" Mentality: Not Pessimism, But Preparedness

Rather than hoping for the best, security-first thinking starts by preparing for the worst. This "assume breach" mentality isn’t about pessimism; it's about pragmatism. It means designing your architecture with the understanding that breaches are a possibility and building in mechanisms to limit the damage. This approach moves beyond simple prevention to include rapid detection, containment, and recovery.

For example, imagine someone manages to bypass your initial defenses. Micro-segmentation acts like internal bulkheads in a ship, limiting the attacker’s access and preventing them from moving freely within your system to reach sensitive data. This principle helps you build a much more resilient system that acknowledges the realities of today’s security threats.

Encryption: Turning Data Into Unreadable Puzzles

Even if a breach does occur, encryption scrambles your data into an unbreakable code. This fundamental security principle renders stolen data useless without the decryption keys. It’s like having a secure vault within your "embassy," safeguarding your most valuable information. Encryption is a core component of robust cloud architecture design principles.

The increasing adoption of cloud architecture in India is largely driven by the need for both cost efficiency and security. Businesses are leveraging cloud architecture design principles to optimize their infrastructure costs. A 2022 report showed that roughly 60% of Indian cloud users consider security a primary concern, emphasizing the need for strong security integration. You can find more on this topic here. Also, you might find our guide on cloud security and compliance useful.

Shared Responsibility: Understanding Who Protects What

Cloud security operates on a shared responsibility model. Think of it this way: your cloud provider, like AWS, secures the building (the underlying infrastructure), while you are responsible for securing your own office within that building (your data and applications).

This model clearly defines who is responsible for which aspects of security, allowing you to focus on the security measures specific to your applications and data, with the confidence that the foundational infrastructure is secured by your cloud provider.

Balancing Security and User Experience

While strong security is critical, it shouldn’t come at the expense of user experience. Nobody wants to work in an embassy with endless security checkpoints just to get to their desk. The aim is to create a secure system that’s also user-friendly.

This means implementing security measures that are both effective and unobtrusive. For instance, multi-factor authentication provides an added layer of security without significantly impacting user access. Finding the right balance between security and usability is a key element of effective cloud architecture design.

Smart Cost Management: Getting Maximum Value From Every Dollar

Cloud costs can sometimes feel like a nasty surprise. But it's not that the cloud itself is inherently expensive. The problem often lies in approaching cloud spending with an outdated mindset. Imagine switching to ride-sharing but continuing to pay for a dedicated parking spot—it doesn't make much sense, does it? Smart cloud architecture design principles can transform cost optimization into a real business advantage.

This screenshot from the AWS Cost Management page showcases the variety of tools and services they offer to manage and optimize cloud spending. Notice how the layout emphasizes a comprehensive approach to cost control, highlighting features like cost anomaly detection, budgeting, and cost allocation tagging. This really illustrates how cloud providers are investing heavily in giving customers the power to truly understand and manage their cloud expenses.

Hidden Costs and Unexpected Expenses: Navigating the Cloud Billing Landscape

Many organizations are caught off guard by hidden costs like data transfer fees. These seemingly small fees can quickly accumulate, especially for applications that handle a lot of data. Think of them like toll charges on your ride-sharing trips—small individually, but they can definitely add up over time. Another common oversight is storage. Different storage classes have different costs, and picking the wrong one can lead to unnecessary spending. It's a bit like choosing premium parking when all you really need is street parking.

Reserved instances can offer substantial savings for workloads that are predictable. Think of it like buying season tickets for your favorite sports team—cheaper per game if you know you'll be attending regularly. But reserved instances do require commitment and careful planning. Over-provisioning reserved instances can actually wipe out any cost benefits you were hoping for.

Right-Sizing Resources: Finding the Perfect Fit for Your Workloads

Right-sizing your resources is all about matching them to your actual needs, like choosing the right-sized vehicle for a trip. A small car is perfect for a short commute, while a larger vehicle is necessary for a family vacation. Similarly, a small virtual machine might be sufficient for a low-traffic application, while a more powerful instance is needed for demanding tasks. Regularly reviewing and adjusting your resource allocation is key to optimizing costs.

Picking the right storage class is crucial, too. Frequently accessed data should live in faster, more expensive storage, while less frequently used data can reside in cheaper, slower options. It's like organizing your closet—everyday clothes are readily accessible, while seasonal items are tucked away. This strategic approach to storage management is essential for cost optimization.

Automating Cost Optimization: Lifecycle Policies and Scheduled Scaling

Lifecycle policies can automate the optimization process over time. These policies can automatically delete old snapshots, archive infrequently accessed data, or even shut down unused instances during off-peak hours. Think of it like setting up automatic payments for your bills—it saves time and ensures you don't miss a payment. Scheduled scaling allows you to adjust resources based on predictable demand patterns. You might scale up resources during peak business hours and scale down during quieter periods.

Let's take a closer look at some specific cost optimization strategies:

The following table summarizes some key cloud cost optimization strategies and their potential impact:

| Strategy | Potential Savings | Implementation Effort | Risk Level | Timeline |

|---|---|---|---|---|

| Right-sizing instances | 10-30% | Low | Low | Immediate |

| Reserved Instances | Up to 75% | Medium | Medium | Long-term |

| Deleting idle resources | 5-15% | Low | Low | Immediate |

| Optimizing storage classes | 10-40% | Medium | Low | Short-term |

| Auto-scaling | 20-50% | Medium | Medium | Short-term |

| Spot Instances | Up to 90% | High | High | Short-term |

This table provides a quick overview of different cost-saving tactics, ranging from simple adjustments like right-sizing instances to more complex strategies like using spot instances. It also highlights the trade-offs between potential savings, implementation effort, and risk.

These automated approaches help ensure continuous cost optimization, freeing you to focus on your core business objectives. They bake cost optimization into the foundation of your cloud architecture design principles, making it an integral part of your overall cloud strategy, not just something you think about later.

Building Bulletproof Reliability: Systems That Never Let You Down

Reliability in cloud architecture isn't about preventing every single failure. It's about building systems so resilient they can gracefully handle failures without the user ever noticing. Think of it like a well-designed subway system. Even if one line experiences a delay, the other lines and alternative routes keep people moving, minimizing the overall impact.

This screenshot from the AWS reliability page perfectly illustrates this concept. It highlights various aspects of building dependable systems, from handling faults to recovering from disasters. The key takeaway? Reliability is not an afterthought. It's a core design principle woven into every layer of the cloud architecture.

Designing for Failure: Embracing the Inevitable

Rather than aiming for flawless individual components, designing for failure accepts that failures will occur and prepares accordingly. This principle is the cornerstone of cloud architecture design. Imagine a popular social media platform. They stayed online during a major data center outage thanks to multi-region deployments. By distributing their data and services across multiple geographic locations, they ensured that when one region failed, others seamlessly took over.

Similarly, a financial institution maintained operations during a regional internet outage thanks to robust database replication. Maintaining real-time copies of their database in different regions allowed them to continue operating even when their primary region became inaccessible.

Circuit Breakers: Preventing Cascading Failures

Circuit breakers are essential for stopping small issues from snowballing into widespread outages. Just like a circuit breaker in your home prevents electrical surges from causing damage, circuit breakers in software systems prevent cascading failures. They monitor the health of different services and temporarily isolate failing components to prevent them from impacting other parts of the system.

Let's say a payment gateway starts experiencing intermittent problems. A circuit breaker can temporarily redirect traffic to a backup gateway, preventing payment failures across the entire platform. This localized containment minimizes disruption and buys time for the primary gateway to recover without affecting the overall system.

Chaos Engineering: Deliberately Breaking Things to Build Resilience

Chaos engineering is a surprisingly effective approach to improving reliability. It involves intentionally introducing failures into your system to identify weaknesses. Think of it as a controlled experiment, like a fire drill for your cloud infrastructure. It might seem counterintuitive, but it ultimately strengthens your system's ability to handle unexpected events.

These controlled experiments help companies uncover and address potential vulnerabilities before they become real problems. By embracing chaos engineering, organizations gain invaluable insights into how their systems behave under pressure. This, combined with multi-region deployments and database replication, creates systems that actually become stronger, not weaker, when faced with challenges.

Performance Excellence: Speed That Drives Business Results

Performance optimization in cloud architecture is a bit like tuning a race car. Every millisecond you shave off response times can have a real impact on your bottom line. Even a seemingly small one-second delay in page load time can lead to a 7% drop in conversions. Let's explore how leading companies build systems that deliver lightning-fast experiences to users around the world.

Content Delivery and Database Optimization: Two Sides of the Same Coin

Imagine you're running a popular food truck business. You wouldn't cook everything in one central kitchen and then drive it all over town, would you? That would take forever! Instead, you'd probably have several smaller kitchens strategically located to serve different areas.

That's essentially what a Content Delivery Network (CDN) does. A CDN caches your application's content closer to your users, reducing latency and improving response times. Think of them as those smaller, localized kitchens, serving up your content quickly to users nearby.

Database optimization, on the other hand, is like streamlining your food prep process inside the truck itself. Slow database queries are like bottlenecks in your workflow. Optimizing these queries—perhaps by adding indexes or choosing a more suitable database technology—is like making your kitchen run smoothly, ensuring orders fly out the window.

Real-World Examples: Speed That Translates to Sales

Think about an online retailer that managed to boost sales by 15% just by optimizing their checkout process. By making it faster and easier for customers to complete their purchases, they directly impacted their revenue.

Or consider a software company that saw a significant drop in customer churn after improving their application's responsiveness. A faster, snappier application made for happier users and higher retention rates. These real-world examples demonstrate the undeniable link between performance and business success.

Caching Strategies and User Experience Metrics: Focusing on What Matters

Caching strategies are like having a "fast lane" for your most popular menu items. You keep the ingredients for those dishes readily available so you can serve them up in a flash, without having to start from scratch every time. This dramatically speeds up response times for frequently requested data.

Just as important is focusing on user experience metrics, rather than just server performance. While server metrics are useful, they don’t always tell the whole story. Metrics like page load time and time to first byte give you a much better understanding of how your users actually experience your application.

This screenshot from the AWS performance page showcases their commitment to high performance. It highlights the variety of tools and services they offer to help customers optimize their applications for speed and efficiency. Their focus on performance monitoring and optimization underscores just how important speed is in today's world.

Performance Budgets, Database Patterns, and Continuous Optimization: A Holistic Approach

Many companies use performance budgets to set clear performance targets. These budgets help teams prioritize optimization efforts and keep track of their progress.

Choosing the right database patterns is also critical. Different patterns are suited for different types of data and workloads. Selecting the appropriate pattern can dramatically impact performance.

Finally, continuous optimization based on real user data is key. Regularly analyzing how users interact with your application and then tweaking your architecture accordingly will lead to ongoing performance improvements.

Cloud architects, especially in fast-growing tech hubs like India, are in high demand precisely because of their expertise in performance optimization. Businesses are seeking cloud architects who can optimize their cloud deployments for high availability, fault tolerance, and cost-effectiveness. Learn more about cloud architect salaries in India. These skilled professionals play a crucial role in ensuring cloud architectures are designed to meet the performance demands of today's fast-paced business world.

Your Implementation Roadmap: From Planning To Success

Moving from cloud architecture design principles in theory to putting them into practice requires a strategic, phased approach. Imagine renovating your house while still living in it – you need a careful plan to balance progress and minimize disruption. This section explores proven frameworks and real-world examples to guide your cloud implementation journey.

Assessing Your Current State: An Honest Look

Before making any cloud changes, you need a thorough assessment of your current infrastructure. This means identifying your existing workloads, dependencies, and potential bottlenecks. Think of it like taking inventory before a home renovation—understanding what stays, what goes, and what needs an upgrade. This honest evaluation helps prioritize areas for improvement and sets realistic expectations. For a more structured approach to this assessment, you can check out our guide on cloud adoption frameworks.

Identifying Quick Wins: Building Momentum

Once you have a clear picture of your current setup, focus on identifying quick wins. These are smaller, achievable projects that demonstrate the benefits of cloud adoption early on. Migrating a non-critical application to the cloud, for example, can provide valuable experience and build confidence within your team. These initial successes build momentum and encourage buy-in from stakeholders who might be hesitant about larger changes.

Sequencing Changes: Minimizing Risk

Implementing cloud architecture design principles isn't a one-size-fits-all endeavor. Changes should be sequenced strategically to minimize risk. This means prioritizing critical workloads and carefully planning the migration process for each application. You wouldn’t demolish your kitchen before figuring out where you'll cook, right? A phased approach allows for adjustments and ensures business continuity.

This screenshot from the AWS Well-Architected Framework webpage highlights its five pillars: operational excellence, security, reliability, performance efficiency, and cost optimization. The framework provides a consistent approach for evaluating architectures and implementing designs that scale over time. Aligning your implementation with these principles helps you build a robust and efficient cloud environment.

Building Buy-In and Establishing Governance

Getting stakeholder buy-in is crucial for successful cloud adoption. This involves addressing concerns, communicating benefits, and demonstrating value. Sharing success stories from other organizations, particularly those in your region, can be very effective. A clear governance framework ensures consistency across teams without hindering innovation. This framework defines roles, responsibilities, and processes for managing cloud resources, making sure everyone is working together towards a common goal.

Navigating Common Challenges: Learning From Others

Implementing cloud architecture design principles often presents challenges like integrating legacy systems, training teams, and overcoming cultural resistance. Learning from other organizations’ experiences can help you anticipate and address these hurdles. The story of a retail chain successfully migrating to the cloud during their busiest season, for instance, offers valuable lessons in planning, execution, and risk mitigation.

By adopting a structured approach, focusing on early successes, and learning from others, you can successfully implement cloud architecture design principles and unlock the cloud's full potential. Ready to take the next step? Transform your business with Signiance Technologies’ cloud solutions at https://signiance.com.