Imagine your favourite e-commerce site during a massive Black Friday sale. The site crashes. Even if it’s just for a minute, you and thousands of other frustrated customers will likely go elsewhere. That’s a huge loss in sales and trust. Cloud high availability is the architectural blueprint designed to prevent precisely this scenario, ensuring your systems stay online even when things go wrong behind the scenes.

Why ‘Always-On’ Is the New Standard

In our hyper-connected world, users don’t just hope for 24/7 access they expect it. Whether it’s a banking app, a streaming service, or a critical business tool, downtime isn’t an inconvenience anymore. It’s a direct threat to your business. The entire philosophy behind cloud high availability is building systems that can withstand and recover from failure without missing a beat.

Think of it like a successful retail chain. It doesn’t bet everything on a single, massive flagship store. Instead, it operates multiple branches in different locations. If one store has to close temporarily because of a power cut, customers can simply pop over to another nearby branch. The business keeps running, serving customers, and making money without interruption.

This analogy translates perfectly to cloud architecture. The fundamental goal is to eliminate any single point of failure (SPOF) that one critical component that, if it fails, brings the whole system crashing down. High availability tackles this head-on by building in redundancy. If one piece of the puzzle goes offline, a backup is already there, ready to take over instantly and seamlessly.

The Real Cost of Downtime

The fallout from an outage goes far beyond the immediate loss of a few transactions. Every minute a service is dark can trigger a cascade of negative consequences:

- Direct Revenue Loss: For an e-commerce platform or a subscription service, downtime literally means sales grind to a halt.

- Damaged Customer Trust: Unreliable services send customers straight to your competitors. A single bad experience can undo years of brand loyalty.

- Reduced Productivity: When internal applications go offline, employee workflows freeze, bringing entire business operations to a standstill.

- Brand Reputation Harm: Public outages quickly become news, painting a picture of your brand as unreliable and technically incompetent.

This growing awareness of downtime’s true cost is driving a major shift in tech spending, especially in India. Recent data reveals that Indian small and medium-sized businesses (SMBs) are pouring money into cloud services to bolster their availability. Projections show that by 2025, Indian SMBs will dedicate over 50% of their tech budgets to the cloud. In fact, 54% are already spending more than $1.2 million annually just to build more resilient IT environments. You can explore more on these global cloud spending trends on CloudZero.

Decoding the ‘Nines’ of Availability

So, how do we measure uptime? In the industry, we use a simple shorthand known as “the nines.” This percentage tells you just how reliable a system is over a specific period. While a perfect 100% is the dream, it’s practically impossible to achieve. Instead, organisations set their sights on specific, measurable targets that align with their business needs.

A system’s availability is a direct reflection of its design. It isn’t about hoping for the best; it’s about planning for failure and ensuring the user never even notices.

To put these percentages into perspective, let’s break down what they mean in terms of actual potential downtime.

Understanding the ‘Nines’ of Availability

This table breaks down what different availability percentages mean in terms of potential downtime per year, month, and week, illustrating the real-world impact of each level.

| Availability (%) | Downtime per Year | Downtime per Month | Downtime per Week |

|---|---|---|---|

| 99% | 3.65 days | 7.31 hours | 1.68 hours |

| 99.9% | 8.77 hours | 43.83 minutes | 10.08 minutes |

| 99.99% | 52.60 minutes | 4.38 minutes | 1.01 minutes |

| 99.999% | 5.26 minutes | 26.30 seconds | 6.05 seconds |

As you can clearly see, the jump from 99.9% (“three nines”) to 99.99% (“four nines”) is massive. It’s the difference between nearly nine hours of potential downtime a year and less than an hour. This context is absolutely crucial for making informed decisions about the design, cost, and effort required to build for true cloud high availability.

The Core Principles of Resilient Architecture

If you want to build a system that can take a punch and keep running, you need to start with a solid foundation. True cloud high availability isn’t something you bolt on at the end; it has to be part of your application’s DNA from the very first line of code. These core principles are the pillars holding up any reliable, always-on service.

Think of them as the unbreakable rules of engineering for durability. Without them, even the most sophisticated cloud tools won’t save you from downtime. We’re going to focus on three non-negotiables: redundancy, monitoring, and failover.

Getting these concepts right is essential for anyone building or managing digital products. They give you a practical framework for making smart architectural choices that directly protect your business and keep your customers happy.

The First Pillar: Redundancy

At its heart, redundancy is the simple but incredibly powerful idea of having a backup. It means you create duplicate copies of your critical system components, so if one goes down, another is ready to seamlessly take its place. A great parallel is a commercial airliner; it always has a co-pilot ready to take the controls, ensuring the flight continues safely no matter what.

This principle is your best weapon against single points of failure. In the cloud, this usually translates to running multiple instances of your servers, databases, or even entire application environments.

You’ll typically see redundancy implemented in one of two ways:

- Active-Active: In this model, all your redundant components are live and handling traffic at the same time. Picture a busy supermarket with all checkout lanes open, spreading the customer load evenly. This approach not only boosts availability but also improves overall performance by distributing the work.

- Active-Passive: Here, one primary component handles all the traffic while a secondary (passive) one sits on standby. The backup only springs into action if the primary fails. It’s a lot like the spare tyre in your car it’s there just in case, but it doesn’t do any work until you have a flat.

Choosing between these two really comes down to your budget, performance goals, and how much complexity you’re willing to manage. For a deeper dive into building resilient systems, you can learn more about our take on cloud architecture design principles.

The Second Pillar: Monitoring

If redundancy is your backup plan, then monitoring is your early warning system. It’s the practice of constantly keeping an eye on your system’s health and performance metrics to catch problems before they spiral into a full-blown outage. Without solid monitoring, you’re essentially flying blind.

Think of it like the dashboard in your car. It gives you a constant stream of vital information speed, fuel, engine temperature. If a warning light flashes for an overheating engine, you have time to pull over and fix the issue before it causes catastrophic damage.

A resilient system isn’t one that never fails, but one that detects failure and recovers from it instantly. Proactive monitoring is what makes this instant recovery possible.

Effective monitoring means tracking key metrics like CPU utilisation, memory usage, network traffic, and application error rates. When these numbers cross a certain threshold, automated alerts should fire off, letting your team know it’s time to investigate. This proactive approach helps you stamp out small issues before your users ever feel a thing.

The Third Pillar: Failover

Failover is the critical process that brings your redundancy strategy to life. It’s the automatic mechanism that switches operations from a failed component to a healthy, standby one. The goal is to make this transition so fast and seamless that your end-users don’t even notice a hiccup.

A perfect real-world analogy is a hospital’s backup generator. When the main power grid fails, the generator kicks in automatically within seconds. This ensures that life-support systems and other critical equipment keep running without any interruption. The switch is automatic, immediate, and potentially life-saving.

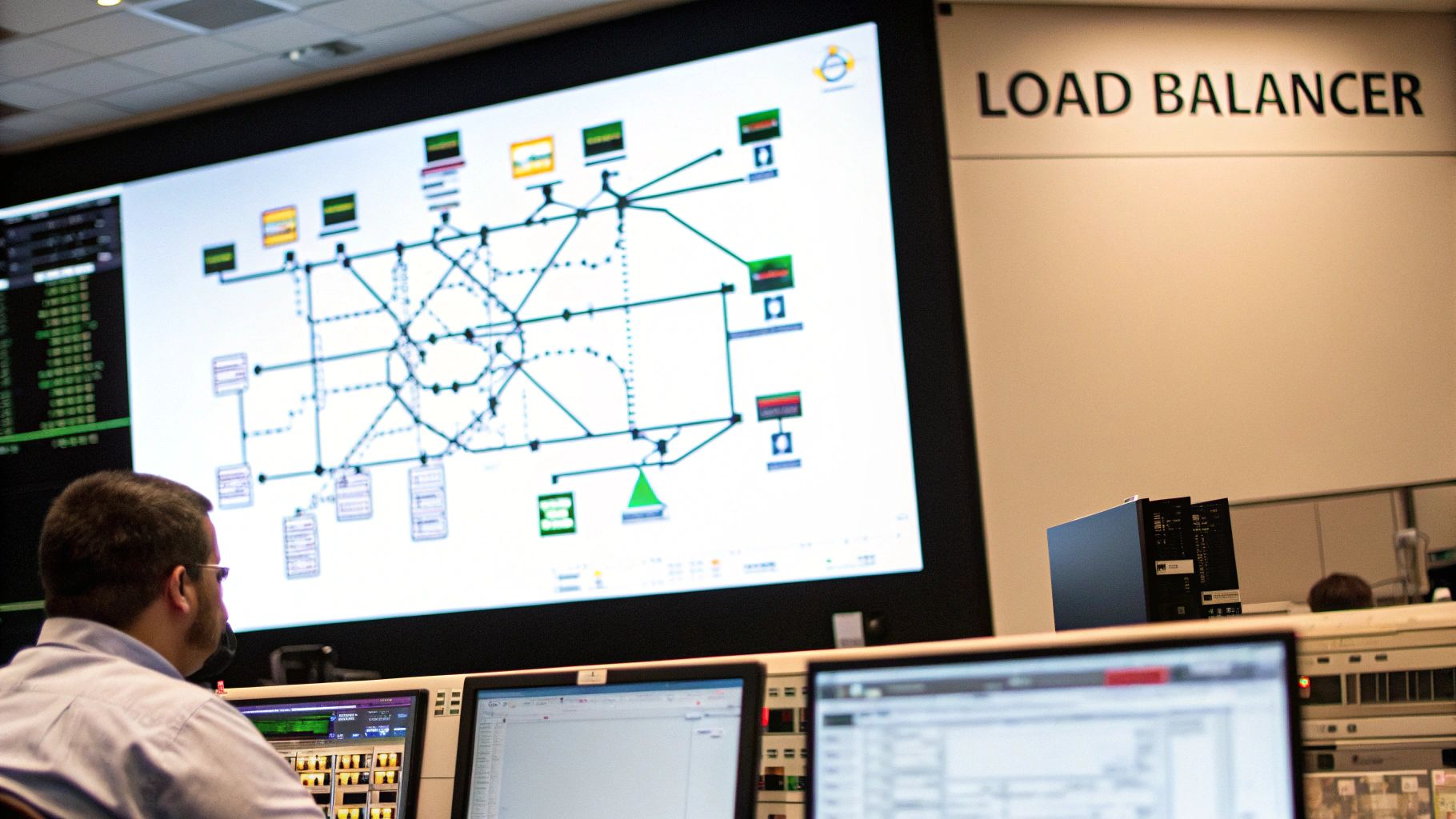

In a cloud high availability setup, this is typically handled by services like load balancers. When a health check from your monitoring system shows a server is unresponsive, the load balancer instantly stops sending traffic to it. It reroutes all new requests to the healthy, redundant servers, ensuring the application stays online and turning a potential disaster into a complete non-event.

Proven Design Patterns for High Availability

Alright, now that we’ve covered the core concepts, let’s get practical. Moving from theory to a live environment means using proven architectural blueprints. These design patterns are the time-tested strategies engineers rely on to build resilient, highly available applications in the cloud. They are the “how” that follows the “why,” giving you a clear roadmap to turn your availability goals into a functional reality.

It’s best to think of these patterns less as rigid rules and more as flexible frameworks. You can, and should, adapt them to your specific needs. By understanding them, you can build a system that doesn’t just react to failure but actively anticipates and handles it gracefully, keeping your service dependable.

Distribute Traffic with Load Balancing

One of the most fundamental patterns for achieving cloud high availability is load balancing. Think of a popular retail shop during a festive sale. If every customer is forced into a single checkout queue, it would quickly become a nightmare. The line would grind to a halt, and frustrated customers would start walking out. The obvious solution? Open more checkout counters and direct people to the shortest line.

A load balancer does exactly this for your application’s traffic. It sits in front of your servers and intelligently routes incoming user requests across a pool of identical server instances. This simple but powerful action achieves two critical goals:

- Prevents Overload: It stops any single server from getting swamped with traffic, which is a common cause of slowdowns and crashes.

- Enables Redundancy: A load balancer constantly checks the health of each server. If one becomes unresponsive, it instantly stops sending traffic its way, effectively taking it out of rotation without any impact on your users.

This pattern is your first line of defence against downtime from server failures or unexpected traffic spikes. It’s an essential building block for almost every highly available architecture.

Build Resilience with Geographic Redundancy

While balancing traffic across servers in one data centre is a great start, what happens if that entire facility has a power cut or a network outage? This is where geographic redundancy comes in. This powerful pattern involves deploying your application across multiple, physically separate locations.

These locations could be different Availability Zones (which are distinct data centres within the same city or region) or even entirely different Regions (geographic areas hundreds or thousands of kilometres apart).

By spreading your infrastructure across multiple locations, you protect your application from localised disasters. An outage in one data centre no longer means an outage for your users.

It’s like a global shipping company having distribution hubs on multiple continents. If a major storm disrupts operations at one hub, packages can simply be rerouted through another, ensuring deliveries continue with minimal delay. Adopting this pattern is a major step towards building a truly robust system that can withstand large-scale failures.

In India, this approach is fast becoming standard practice as the cloud market explodes. With a projected compound annual growth rate of 23.1%, the Indian cloud market is on track to hit a $13 billion valuation by 2026. This growth, particularly in sectors like e-commerce and fintech, absolutely depends on high availability architectures that use strategically placed local data centres to deliver low latency and continuous service.

Simplify Resilience with Managed Database Services

Let’s be honest: your database is often the most critical and complex part of your entire application. If it goes down, everything stops. Manually setting up a database for high availability, complete with replication, health monitoring, and failover logic, is a genuinely difficult and error-prone job.

This is exactly why using a managed database service from a cloud provider (like Amazon RDS, Azure SQL Database, or Google Cloud SQL) is a cornerstone of modern high availability design. These services have resilience baked right in.

- They automatically handle replicating your data to a standby instance.

- They constantly monitor the health of your primary database.

- If a failure occurs, they automatically switch over to the standby instance, often in just a few seconds.

Using a managed service offloads this immense operational headache, freeing up your team to focus on building features instead of wrestling with complex database infrastructure. It’s a powerful shortcut to achieving a highly available data tier with a fraction of the effort. These services are crucial for implementing a solid deployment strategy, and you can see how this all connects to a better user experience in our guide to zero-downtime deployment.

By combining these proven patterns, you can create a multi-layered defence against downtime, building a system that is resilient from the ground up.

Achieving High Availability on AWS and Azure

Knowing the theory behind cloud high availability is a great start, but the real magic happens when you apply those principles using the tools from top cloud providers. This is where Amazon Web Services (AWS) and Microsoft Azure come in. They both offer a powerful lineup of services specifically designed to help you build resilient and fault-tolerant systems.

Think of this section as the bridge between theory and practice. We’re going to look at the specific services each platform provides and see how they map to the high availability design patterns we’ve already covered. This isn’t just a list of features; it’s a practical guide to help you make smart architectural choices on either platform.

Core Building Blocks for Resilience

Both AWS and Azure are architected around a simple but powerful idea: physical and logical isolation. To keep a single point of failure from taking down your entire application, they spread their infrastructure across the globe. Getting to grips with their terminology is the first step.

- AWS Regions and Availability Zones: An AWS Region is a large, separate geographical area, like Mumbai or London. Critically, each Region contains multiple, isolated locations called Availability Zones (AZs). Think of an AZ as a distinct data centre with its own power, cooling, and network. Spreading your application across multiple AZs within one Region is the most common and effective way to achieve high availability.

- Azure Geographies and Regions: Azure follows a similar model. A Geography is a discrete market, such as India or the United Kingdom, that keeps your data within certain borders. Within these Geographies, you’ll find one or more Regions. An Azure Region is a set of data centres deployed close together to ensure low latency. Just like with AWS, the best practice is to deploy your resources across multiple locations to protect against any localised failures.

By using these foundational structures, you can design an application that can withstand the failure of an entire data centre without your users ever noticing.

Implementing Load Balancing and Failover

As we’ve established, spreading traffic effectively is vital for both performance and availability. Luckily, both platforms offer sophisticated load balancing services that are the heart of any high availability strategy.

AWS Elastic Load Balancing (ELB) comes in a few flavours, but the Application Load Balancer (ALB) is the go-to for most web traffic. It’s smart, operating at the application layer to route requests based on the content itself. More importantly, it works hand-in-hand with Auto Scaling groups to automatically add or remove servers based on real-time demand and health checks. This ensures you always have just the right amount of capacity online.

On the other side, Azure Load Balancer works at the transport layer, offering raw speed and low latency. For more advanced, application-aware routing, Azure Application Gateway is the direct equivalent to AWS’s ALB. For global reach, Azure Traffic Manager is the perfect tool. It directs users to the best endpoint based on performance, their location, or a weighted distribution, making it essential for multi-region setups.

A key principle of the AWS Well-Architected Framework is to “stop guessing capacity.” Using services like Auto Scaling and managed load balancers allows your architecture to respond to load automatically, a core tenet of building for reliability.

The choice between these services really boils down to your specific needs whether you require simple traffic distribution or complex, content-based routing rules across the globe.

A Comparative Look at Key Services

To build a truly complete and highly available system, you’ll need more than just load balancers and virtual machines. Here’s a quick comparison of how AWS and Azure handle other critical components.

| High Availability Goal | AWS Service Solution | Azure Service Solution |

|---|---|---|

| Server Redundancy | Auto Scaling Groups across AZs | Virtual Machine Scale Sets with Availability Sets/Zones |

| Global Traffic Management | Amazon Route 53 (DNS Failover) | Azure Traffic Manager |

| Managed Relational Database | Amazon RDS (Multi-AZ) | Azure SQL Database (Geo-Replication) |

| Managed NoSQL Database | Amazon DynamoDB (Global Tables) | Azure Cosmos DB (Multi-region Writes) |

This table shows how both platforms provide very similar, competitive services. The final decision often depends on your team’s existing skills, specific feature needs, or how well a service integrates with the rest of your tech stack.

For businesses targeting the Indian market, the placement of these cloud resources is especially important. Low latency and high availability are non-negotiable for serving a massive user base with unique local demands. India-optimised cloud providers solve this by locating their data centres within the country, ensuring faster page loads and a smoother user experience for sectors like e-commerce. These providers often guarantee uptime levels over 99.9%, which is critical for business continuity. This local-first approach minimises risks from network lag or cross-border data transfer issues. You can find more details about the benefits of local cloud hosting in India at Bluehost.com.

Ultimately, both AWS and Azure offer a complete toolkit for designing and implementing a rock-solid cloud high availability strategy. By understanding their core services and how they align with resilience patterns, your engineering teams can build systems that meet even the most demanding uptime requirements.

Distinguishing High Availability from Disaster Recovery

It’s a common mistake to lump cloud high availability (HA) and disaster recovery (DR) together. While both are crucial for keeping your business running smoothly, they tackle completely different types of problems on very different schedules. Getting this distinction right is the first step toward building a genuinely resilient operation.

I find it helps to think of it like this: high availability is like having a spare tyre in your car. A flat tyre is a pretty common, localised issue. You just pull over, swap it out in a few minutes, and you’re back on your way with hardly any disruption. HA is built for these everyday, component-level failures a single server giving up, a database instance becoming unresponsive, or a network switch glitching.

Disaster recovery, on the other hand, is your full-blown car insurance policy. It won’t help you with a simple flat tyre. You need it for a catastrophic event, like your entire car being written off in a major accident. DR is your master plan to bring your whole application or data centre back from the dead after a massive outage, such as a fire destroying a facility or a region-wide cloud service failure.

To put it simply, HA and DR are two sides of the business continuity coin, but they address very different scenarios. The following table breaks down their core differences.

High Availability vs Disaster Recovery a Comparison

| Aspect | High Availability (HA) | Disaster Recovery (DR) |

|---|---|---|

| Primary Goal | To prevent downtime by automatically handling component failures. | To recover from downtime after a major incident has occurred. |

| Scope | Localised, single points of failure (e.g., one server, a database). | Widespread, catastrophic events (e.g., entire data centre, region). |

| Response Time | Instantaneous and automatic; ideally seamless for the end-user. | Measured in minutes, hours, or even days; a planned recovery process. |

| Typical Strategy | Redundancy, failover clusters, load balancing. | Data backups, replication to a secondary site, recovery plans. |

As you can see, their objectives and methods are fundamentally different. One is proactive and automated for small problems, while the other is a planned, reactive process for large-scale disasters.

Key Metrics and Mindset

The primary goal of high availability is to prevent downtime altogether. It relies on automated systems, such as load balancers and redundant components, to ensure a service never blips offline because of a single fault. The switch from a failing part to a healthy one is designed to be so fast it’s completely invisible to your users.

Disaster recovery’s goal is to recover from downtime. It accepts that a major event has already knocked you offline and focuses on the “what now?” The whole point is to get the system back online within a pre-agreed timeframe. This process is rarely instant and involves restoring data and infrastructure, often in a completely different geographical location.

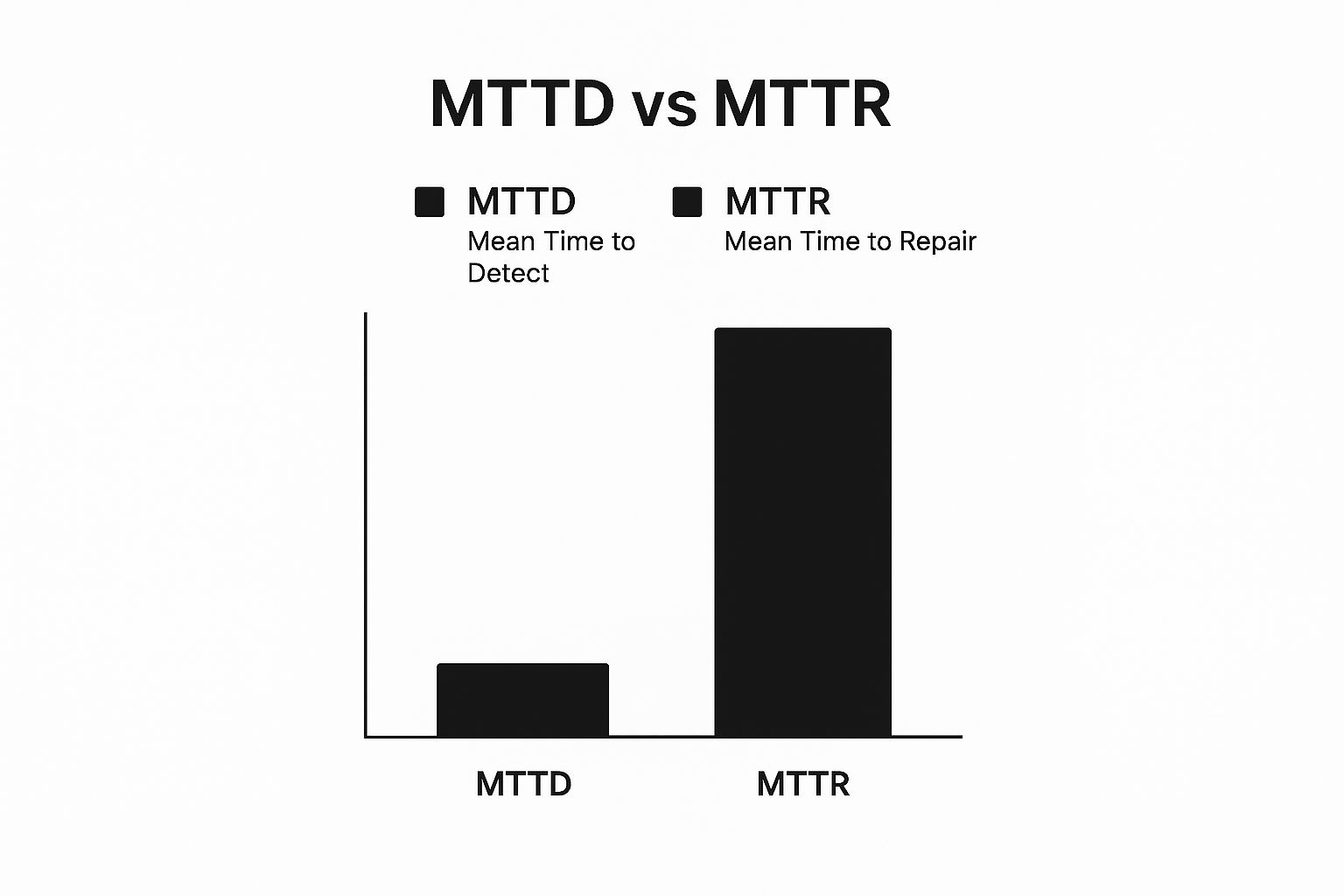

This image visualises two critical metrics in system reliability: Mean Time to Detect (MTTD) and Mean Time to Repair (MTTR). These are central to both HA and DR planning.

The graphic really brings home the point that high availability strives for near-zero detection and repair times through smart automation. In contrast, disaster recovery processes, by their very nature, involve longer timelines for both detecting the full scope of the disaster and repairing it.

Introducing RTO and RPO

When we start planning for disaster recovery, the conversation quickly turns to two essential metrics:

- Recovery Time Objective (RTO): This is the absolute maximum time your application can be offline after a disaster hits before it causes serious harm to the business. Your RTO might be a few minutes for a critical payment system or several hours for an internal reporting tool.

- Recovery Point Objective (RPO): This defines the maximum acceptable amount of data loss, measured in time. If your RPO is one hour, it means that in a worst-case scenario, you could lose up to an hour’s worth of data created just before the incident.

High availability operates with an RTO and RPO of near-zero for component failures. Disaster recovery strategies, however, involve carefully balancing the costs of achieving lower RTO and RPO against the business impact of a major outage.

Ultimately, you don’t choose between HA and DR; a truly robust strategy requires both. You need high availability to handle the daily hiccups and a solid disaster recovery plan to prepare for that “once-in-a-decade” catastrophe.

To see how these concepts translate into practical solutions, take a look at our case study on achieving high availability and cost optimisation. It provides real-world examples of how to build a framework that protects your business from disruptions of all sizes.

Best Practices for Maintaining Uptime

Getting to a state of cloud high availability isn’t a one-and-done project. It’s an ongoing discipline, a commitment to resilience that demands constant vigilance, rigorous testing, and frankly, a culture that prizes reliability above all else.

It’s one thing to design a redundant system on a whiteboard, but it’s another thing entirely to ensure it actually works when disaster strikes. You have to move past hoping your failover mechanisms will work and start actively proving they will. These aren’t just suggestions; they’re the core practices that separate the truly resilient from the merely hopeful.

Intentionally Break Things to Build Strength

How can you be sure your system will survive a real-world failure? By causing one yourself, on your own terms. This is the heart of chaos engineering and planned “Game Days.” Instead of passively waiting for an outage, you proactively inject failures into a controlled environment to watch how your system responds.

What does this look like in practice?

- You might terminate a random server to see if your load balancer and auto-scaling group even notice.

- You could deliberately block network access to your primary database to confirm the failover to a standby replica is as seamless as you designed it to be.

- You could even simulate an entire availability zone going offline to truly test your multi-AZ strategy.

The point of chaos engineering isn’t to create chaos; it’s to uncover weaknesses before they become customer-facing problems. Think of it as a fire drill for your architecture. You practice for the emergency so that when a real one hits, muscle memory takes over.

Make Monitoring Your First Line of Defence

You can’t fix what you can’t see. This might sound obvious, but it’s a fundamental truth. Robust monitoring and alerting are absolutely non-negotiable for keeping services online. Your monitoring platform is your system’s nervous system, giving you a real-time view of its health and flagging strange behaviour long before your users do.

Good monitoring goes far beyond just checking CPU and memory usage. You need to be tracking application-level metrics that directly reflect the user experience, like transaction times, API error rates, and login failures. When a critical metric crosses a threshold you’ve defined, an alert should automatically fire off to the right team, letting them jump on it immediately.

Automate Everything with Infrastructure as Code

At the end of the day, human error is still one of the biggest culprits behind major outages. A single, manual change pushed in a hurry can easily topple an otherwise solid system. This is precisely why Infrastructure as Code (IaC) has become a cornerstone of high availability.

Using tools like Terraform or AWS CloudFormation, you define your entire infrastructure in version-controlled configuration files. This simple shift in process makes every deployment consistent, repeatable, and peer-reviewed, slashing the risk of a clumsy manual mistake.

Better yet, when you need to spin up a new environment for testing or recover from a catastrophic failure, you can do so automatically and perfectly in minutes. It’s the ultimate safety net for your operations.

Frequently Asked Questions

When you start digging into cloud high availability, a lot of practical questions pop up. People often wonder about the real costs, what’s actually possible, and how all the jargon fits together. Let’s tackle some of the most common queries we hear from engineers and business leaders.

How Much Does Cloud High Availability Cost?

There’s no single price tag; the cost really hinges on how resilient you need your system to be. A simple setup with a few backup servers in the same data centre will be much cheaper than a full-blown multi-region architecture designed to withstand a major regional disaster. The main expense comes from running duplicate resources.

The beauty of the cloud, though, is its pay-as-you-go model. This means you can control these costs quite effectively. The trick is to weigh the investment against the potential cost of downtime for your specific application—think lost revenue and damage to your reputation. It’s as much a business strategy decision as it is a technical one.

Can I Achieve 100% Uptime with the Cloud?

Chasing a perfect 100% uptime is a bit like chasing a unicorn. It’s a theoretical ideal, not something you can realistically achieve. Even the biggest cloud providers like AWS offer Service Level Agreements (SLAs) that guarantee incredibly high uptime, like 99.99% (“four nines”) or 99.999% (“five nines”), but they still account for a tiny sliver of potential downtime.

Instead of aiming for an impossible number, the real goal is to build systems that can bounce back from failure so quickly that your users never even notice. A well-designed system makes any interruption practically invisible, which is a much more valuable and achievable objective.

Is High Availability the Same as Fault Tolerance?

It’s a subtle but important difference. Think of it this way:

Fault tolerance is the ability of a system to keep running without a single hiccup even when a part of it fails. It means zero interruption from a single point of failure.

High availability is the bigger picture. It’s about making sure your application is up and running to meet a certain uptime goal (like 99.99%). You often use fault-tolerant components to achieve high availability. A highly available system might have a tiny, almost unnoticeable blip during a failover, whereas a truly fault-tolerant one wouldn’t.

Essentially, fault tolerance is one of the key tools in your toolbox for building a highly available system.

At Signiance Technologies, we specialise in designing and implementing robust cloud architectures that deliver exceptional uptime and performance. Our experts can help you build a resilient, cost-effective infrastructure tailored to your business needs. Unlock the full potential of the cloud with us.