Beyond The Big Three: Who’s Actually Worth Considering

While the cloud provider comparison often revolves around AWS, Azure, and Google Cloud, a deeper analysis reveals a vibrant ecosystem of specialised and regional contenders. These alternative providers aren’t just smaller versions of the giants; they are carving out specific market segments by focusing on distinct advantages, from aggressive pricing models to localised compliance and unique service offerings. For organisations with needs that don’t perfectly match the hyperscalers’ roadmaps, these players offer significant value.

Regional Champions And Specialised Players

One of the most notable trends is the emergence of regional powerhouses. Alibaba Cloud, for instance, holds a commanding position in the Asia-Pacific market, building on its deep integration with the region’s e-commerce and digital payment networks. It has become the go-to choice for businesses targeting substantial growth in China and Southeast Asia. In Europe, providers like OVHcloud and Scaleway are gaining ground by prioritizing data sovereignty and GDPR compliance a major consideration for many EU-based organizations. Their focus on transparent pricing and local data centers directly counters the regulatory and privacy concerns that can arise when using US-based providers.

Beyond geography, specialisation serves as a key point of difference.

- Oracle Cloud Infrastructure (OCI) has established a strong reputation for its high-performance computing and enterprise-grade database services, appealing to businesses with existing Oracle workloads looking for a direct path to the cloud.

- DigitalOcean and Linode (now part of Akamai) have cultivated a dedicated following among developers and startups. They offer straightforward, developer-centric platforms with predictable pricing and excellent documentation, making them ideal for less complex applications and individual projects.

- IBM Cloud remains a formidable competitor in the enterprise sector, particularly for companies prioritising hybrid cloud strategies and the integration of on-premises infrastructure.

The Evolving Indian Market

In the Indian cloud market, the competition is intense as leading providers work to deliver the most scalable and secure solutions. By 2025, major players including AWS, Microsoft Azure, Google Cloud, IBM Cloud, and Oracle Cloud Infrastructure are sharpening their focus on the particular needs of Indian businesses. Their offerings span from cost-effective platforms for emerging startups to powerful, resilient systems for large enterprises. You can discover more about the top cloud service providers in India and see how they are tailoring their services for the local market. This regional competition creates an environment that is highly beneficial for customers.

Opting for a provider outside the main three isn’t about accepting a compromise; it’s about making a precise choice. A multi-cloud strategy frequently includes these specialised providers to optimise for cost, performance, or compliance on a per-workload basis. By looking beyond the most prominent names in your cloud provider comparison, you can find solutions that are better aligned with your specific business objectives and technical requirements.

Performance Reality Check: What Actually Matters

While marketing materials often highlight impressive performance figures, the true test of a cloud provider is how its infrastructure performs under the strain of real-world operations. Raw compute speed represents only one piece of the puzzle. The critical factors that dictate application responsiveness and user satisfaction are network latency, data throughput, and architectural resilience. A meaningful cloud provider comparison must go beyond surface-level metrics to analyse which platforms deliver consistent results when it counts.

The core of a provider’s performance capability is its global infrastructure. The quantity of regions and Availability Zones (AZs) has a direct impact on your ability to deploy services closer to your end-users, which is essential for reducing latency. For instance, a company targeting customers across India and Southeast Asia would gain a distinct advantage by choosing a provider with multiple, well-connected data centres in that geography. This architectural depth also builds resilience. A properly configured multi-AZ deployment ensures that if one data centre experiences an outage, your services can failover to another within the same region, maintaining continuous operation.

Architecture, Latency, and Edge Computing

A fundamental bottleneck for performance is the physical distance data must travel. While all major providers operate extensive global networks, their strategic differences become clear when examining their edge capabilities. Edge locations are compact infrastructure deployments designed to cache content and execute code closer to users, significantly reducing response times.

- AWS uses its expansive network of over 100 AZs and hundreds of edge locations, powered by its CloudFront CDN and services like AWS Outposts for hybrid cloud solutions.

- Microsoft Azure differentiates itself with a strong enterprise orientation, offering over 60 regions and robust hybrid capabilities through Azure Arc, which extends Azure management across any infrastructure.

- Google Cloud Platform (GCP), drawing on its experience managing a global network for Google Search, excels in data analytics and low-latency networking, supported by a growing footprint of regions and edge points of presence.

Consider an e-commerce platform during a major promotional event. Its ability to manage sudden traffic surges depends less on theoretical CPU limits and more on the provider’s load balancing efficiency, database performance under pressure, and the network’s capacity to serve static content quickly from the edge. Providers with more mature and geographically distributed infrastructure generally manage these unpredictable workloads with greater stability.

To help you visualise these architectural differences, the table below provides a side-by-side analysis of the core infrastructure and service level agreements for the leading providers.

| Provider | Global Regions | Availability Zones | Edge Locations | Network Performance | Uptime SLA |

|---|---|---|---|---|---|

| AWS | 33 | 105+ | 600+ | High-performance global backbone with custom silicon | 99.99% for most core services |

| Azure | 60+ | Varies per region | 190+ | Extensive global network with deep enterprise integrations | 99.99% for most core services |

| GCP | 40 | 121 | 180+ | Premium Tier network built on Google’s private fibre | 99.99% for most core services |

This data highlights that while all providers offer robust SLAs, their infrastructure scale and network strategies differ. The sheer number of regions from Azure appeals to enterprises seeking widespread presence, while the scale of AWS’s AZs and edge network provides fine-grained control for global applications.

Situational Performance Recommendations

Selecting a provider based on performance means matching their architectural strengths to your specific application requirements. For a startup developing a globally distributed mobile app, a provider with an extensive and high-performing edge network like AWS or GCP is crucial. In contrast, an enterprise processing large datasets for machine learning would prioritise a provider offering specialised hardware and high-throughput internal networking, an area where Google Cloud Platform often has an advantage.

Ultimately, the most effective cloud provider comparison looks past advertised benchmarks and scrutinises the underlying architectural reality. By assessing the distribution of regions, the resilience model of availability zones, and the strategic placement of edge networks, you can make a well-informed decision that ensures your applications perform reliably for your target users.

The True Cost Of Cloud: Beyond Surface Pricing

Pricing transparency separates smart cloud decisions from expensive mistakes. A thorough cloud provider comparison must go beyond advertised rates to uncover complex billing structures and hidden costs that can seriously affect your budget. By analysing realistic cost scenarios, you can see how different usage patterns influence expenses across providers, from small development environments to large-scale enterprise applications.

Dissecting Hidden Costs and Billing Models

While compute resources often represent the largest part of a cloud bill, focusing only on hourly rates is a common mistake. True cloud costs are a blend of compute, storage, data transfer fees, and charges for supporting services like load balancers and monitoring tools. Data egress fees the cost of moving data out of a provider’s network are particularly known for causing budget overruns. A provider might offer a low-cost virtual machine, but if your application frequently sends large amounts of data to users, the egress charges can easily erase any initial savings.

Similarly, storage pricing is tiered, with each level offering different performance and cost implications. Hot storage, for data you access frequently, is more expensive than cold storage meant for archival. Placing data in the wrong tier can lead to significant and avoidable expenses. This is especially relevant in India’s fast-growing market. For instance, the India cloud computing market generated $17,881.7 million in 2024, with Software as a Service (SaaS) making up 70.76% of this amount. As this market grows, a detailed understanding of pricing is vital for profitability. You can find more details on the growth of cloud computing in India.

To better understand how these costs break down, it’s useful to compare the pricing structures of major providers for common services. The table below offers a side-by-side look at how each platform approaches billing for compute, storage, and data transfer.

| Service Type | AWS | Azure | Google Cloud | Oracle Cloud | Cost Factors |

|---|---|---|---|---|---|

| Compute | Per-second billing for EC2 instances. Discounts via Savings Plans, Reserved Instances, and Spot Instances. | Per-second billing for Virtual Machines. Discounts via Reservations and Spot Virtual Machines. | Per-second billing with Sustained Use Discounts applied automatically. Preemptible VMs for big savings. | Per-second billing. Offers Flexible VM shapes for precise resource allocation. | Instance type, vCPUs, RAM, OS, region, and commitment term (1-3 years) heavily influence the final cost. |

| Storage | S3 Tiers (Standard, Intelligent-Tiering, Glacier) with different costs for storage and retrieval. | Blob Storage Tiers (Hot, Cool, Archive) with varying prices for access and transactions. | Cloud Storage classes (Standard, Nearline, Coldline, Archive) with automated lifecycle rules. | Object Storage tiers (Standard, Infrequent Access, Archive). Lower retrieval fees than competitors. | Amount of data stored, frequency of access, number of read/write operations, and data redundancy options. |

| Data Transfer | Egress (outbound) data transfer is tiered and priced per GB. Inbound is free. Data transfer between regions is charged. | Outbound data transfer is priced per GB based on zones. Inbound is free. Inter-region transfer costs apply. | Egress traffic is priced per GB, with premium and standard tiers. Inbound is free. | Very generous free tier (10 TB/month). Significantly lower egress fees compared to the top three providers. | The volume of data transferred out of the cloud provider’s network is the main cost driver. Regional transfers also add to the bill. |

This comparison shows that while the big three AWS, Azure, and Google Cloud have similar pricing philosophies, Oracle Cloud stands out with its more generous free tier and lower data egress costs. This can be a major advantage for applications that are data-intensive.

Predictability vs. Variable Costs

Another key factor in any cloud provider comparison is the predictability of your monthly bill. Some providers, like DigitalOcean, offer simple, flat-rate pricing, which is ideal for startups and teams needing a straightforward budget. The hyperscalers present more complex models with several discount options:

- Reserved Instances (RIs): You commit to a specific instance type for a 1- or 3-year term to get a substantial discount.

- Savings Plans: You commit to a certain amount of hourly spend for a term, which offers more flexibility than RIs.

- Spot Instances/Preemptible VMs: You can use spare cloud capacity at discounts of up to 90%, but the provider can take back the instance with little warning.

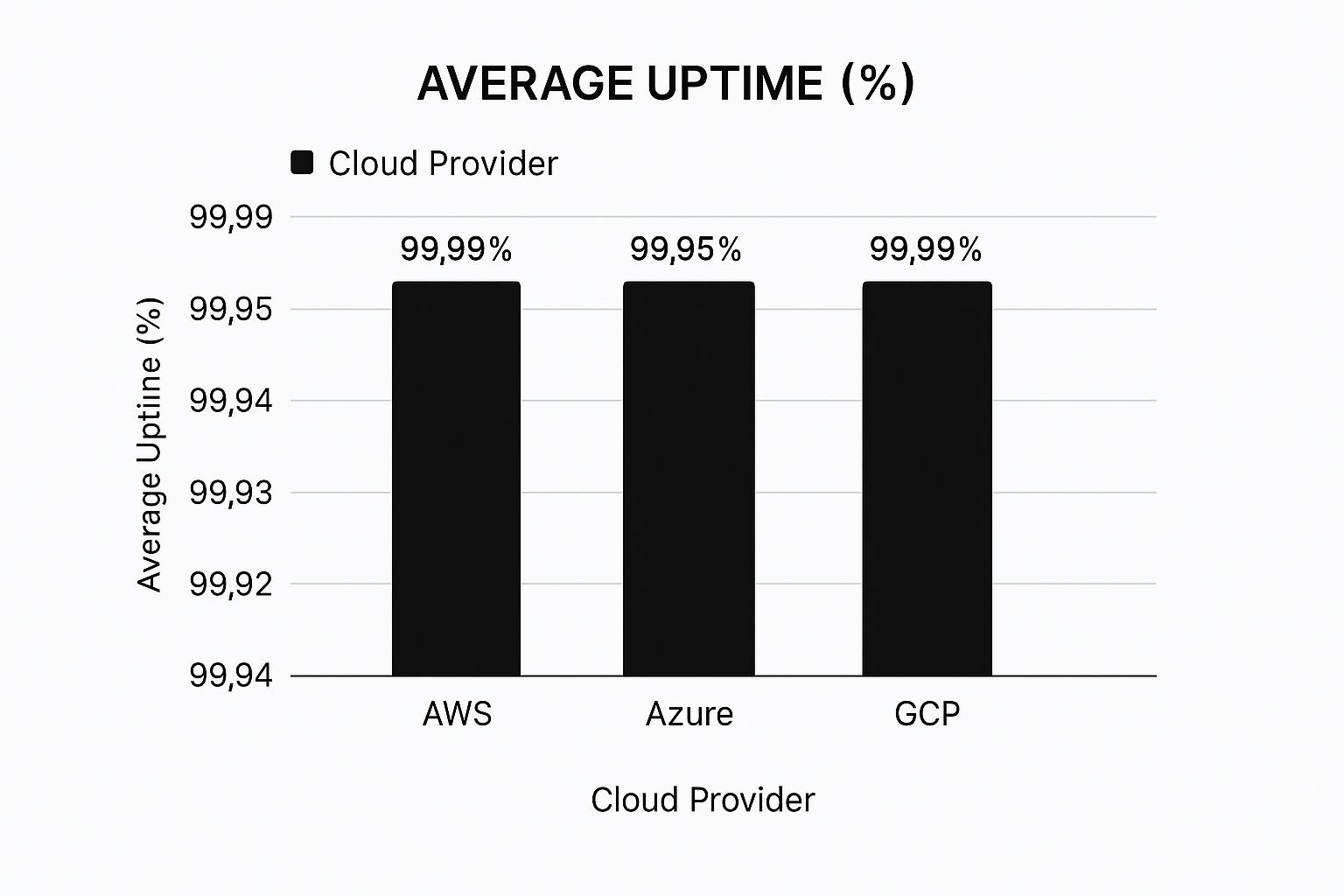

The infographic below illustrates the average uptime percentages for the top providers, which is an important factor when considering the value you get for your money.

The data indicates that while all major platforms provide high reliability, AWS and GCP show slightly higher average uptime. This can be a deciding factor for mission-critical workloads where any downtime has a direct financial impact.

Selecting the right pricing model depends on your workload’s characteristics. A stable, predictable application is an excellent fit for Reserved Instances, securing large savings. In contrast, a flexible, fault-tolerant workload like batch processing can achieve major cost reductions with Spot Instances. However, using Spot Instances effectively requires strong automation to manage potential interruptions. A successful cost strategy often combines these models, matching the right plan to each workload to find the best balance of cost, performance, and reliability.

Security Implementation: Where Providers Actually Differ

Security marketing often presents a uniform message, with every provider claiming robust and comprehensive protection. However, a detailed cloud provider comparison shows that the practical application of security controls, incident response protocols, and compliance frameworks varies considerably. Understanding these subtle differences is essential, as they directly impact your organisation’s risk profile and day-to-day operational security. Beyond the marketing slogans, the true distinctions are found in the architecture of their security services and the degree of granular control given to customers.

The shared responsibility model is the foundation of cloud security, but the way providers fulfil their part of this agreement is where they diverge. This model states that while the provider secures the core infrastructure, the customer is responsible for securing anything they place in the cloud. The key differentiator becomes the suite of tools and services each provider offers to help customers meet their obligations effectively.

Identity, Access, and Network Controls

Identity and Access Management (IAM) is a central security pillar, yet each provider’s approach has distinct practical consequences for users.

- AWS IAM is recognised for its powerful and granular controls, allowing for highly specific policy creation. This strength can also be a weakness, as its complexity can lead to misconfigurations if not managed with expertise.

- Azure Active Directory (Azure AD), now known as Microsoft Entra ID, provides deep integration with on-premises Active Directory and Microsoft 365. This makes it a natural fit for enterprises already invested in the Microsoft ecosystem.

- Google Cloud IAM is frequently commended for its straightforward, project-based resource structure. This can make permission management simpler for teams organised around particular projects.

Network security architecture also involves trade-offs. For instance, Amazon’s Virtual Private Cloud (VPC) delivers strong workload isolation, but establishing complex networking setups can demand considerable knowledge. In contrast, Google Cloud’s global VPC simplifies multi-region networking by default, a significant benefit for globally distributed applications. However, this convenience may not provide the precise regional control that some organisations need for specific compliance mandates.

Compliance and Data Sovereignty

For businesses governed by strict regulatory frameworks like GDPR, HIPAA, or local data protection laws in India, compliance is a critical requirement. Although all major providers hold an extensive list of certifications, their scope and application differ. A provider might be SOC 2 compliant, but this doesn’t guarantee every service they offer is covered by that certification. You must verify that the specific services you intend to use are included in the relevant compliance reports.

Data sovereignty—the principle that data is subject to the laws of the country where it is stored—is another crucial factor. Providers with local data center regions in India, including AWS, Azure, and GCP, present a direct way to meet these requirements. Yet, how they handle data replication, backup, and service administration across borders can still have legal implications. A thorough cloud security assessment is necessary to analyse how a provider’s architecture aligns with your specific compliance and data sovereignty needs. This deeper analysis moves beyond ticking boxes on a compliance checklist to fully understanding the operational realities of securing your data on their platform.

Service Ecosystems: What Actually Adds Value

A detailed comparison of cloud providers must look beyond core infrastructure to evaluate the breadth, depth, and integration quality of their service ecosystems. While compute and storage are the foundation, the real business advantage often emerges from higher-level services like AI/ML platforms, managed databases, and tools that boost developer productivity. The maturity of these ecosystems is what separates a cohesive platform from a mere collection of separate services.

The quality of service integration is a significant differentiator. A provider might list dozens of services, but if they don’t communicate effectively, developers are forced to build custom bridges, which defeats a key benefit of the cloud. For instance, AWS has created a deeply interconnected ecosystem where services like Lambda, S3, and DynamoDB are designed to function together with minimal friction. This tight integration speeds up development and lessens operational overhead, making it a powerful option for organisations creating complex, event-driven systems.

Innovation vs. Feature Parity

When assessing ecosystems, it is important to distinguish between genuine innovation and simple feature matching. Some providers are pioneers in specific areas, while others often follow their lead.

- Google Cloud Platform (GCP) has long been a frontrunner in data analytics and machine learning, drawing on its company’s internal expertise. Services such as BigQuery and Vertex AI frequently set the industry standard, driving new ways for businesses to process and analyse information.

- Microsoft Azure, with its deep history in enterprise software, is excellent at creating a unified experience for businesses already invested in Microsoft products. The integration between Azure services, Microsoft 365, and the Power Platform presents a very attractive ecosystem for corporate digital projects.

- AWS demonstrates its maturity through a vast catalogue of over 200 services, offering an unmatched selection. While this huge range can seem daunting, it guarantees that a tool is available for nearly any conceivable use case, from the Internet of Things (IoT) to quantum computing.

This ecosystem-level view is especially important for high-growth markets. In India, the cloud computing market is expanding quickly, fuelled by digital initiatives across many sectors. By 2025, the public cloud market in India is expected to reach $15.37 billion, with Infrastructure as a Service (IaaS) accounting for the largest share. As companies expand, their dependence on a provider’s integrated services for functions like AI and data management increases significantly. You can explore more about the projected growth of India’s public cloud market.

Situational Recommendations and Vendor Lock-In

The choice of ecosystem has direct consequences for vendor lock-in. Adopting a provider’s specialized database or AI service can create strong dependencies. This is not necessarily a bad thing if the service provides considerable value, but it is a strategic decision. For start-ups focused on rapid growth, an all-in-one ecosystem from AWS or GCP can reduce time-to-market. In contrast, a large enterprise might take a “best-of-breed” approach, using a provider like Snowflake for data warehousing while running compute tasks on Azure.

The crucial point is to consciously select an ecosystem that aligns with your long-term business objectives. This involves understanding the trade-off between the convenience of a single integrated platform and the flexibility of a multi-vendor strategy.

Real-World Scenarios: Matching Providers To Your Needs

A theoretical comparison of cloud providers only gains meaning when applied to real business challenges. The “best” provider is not a fixed title; it’s a decision that depends entirely on your specific workload, growth plans, and technical stack. Examining practical implementation scenarios helps shift the conversation from feature lists to tangible business outcomes. By analyzing these situations, you can arrive at a more informed choice that fits your operational reality.

This situational analysis is crucial for avoiding common missteps. A startup’s priorities, such as rapid development and cost-effective scaling, are fundamentally different from those of a large enterprise focused on migrating legacy systems with minimal disruption. These distinct needs highlight why a one-size-fits-all recommendation is rarely helpful. Let’s explore some common business scenarios to see which providers are better suited for specific challenges.

Scenario 1: The Fast-Scaling Startup

A startup developing a consumer-facing mobile application requires a platform that facilitates quick iteration, manages unpredictable traffic spikes, and offers a strong set of managed services to reduce operational load. The primary objective is to direct engineering efforts towards product innovation, not infrastructure management.

- Top Contenders: AWS and Google Cloud Platform (GCP).

- Analysis: AWS is a frequent choice for startups because of its vast portfolio of services and dominant market position. Offerings like AWS Lambda for serverless computing, Amazon S3 for object storage, and various managed databases enable a small team to construct a highly scalable architecture without managing physical servers. The extensive documentation and active community support are also significant advantages for teams learning as they expand.

- Situational Recommendation: GCP presents a very compelling alternative, particularly for startups concentrated on data analytics and AI. Its developer-centric experience, robust Kubernetes support through GKE, and powerful tools like Big-Query can provide a significant advantage. For a startup prioritizing simple, predictable billing in its initial phases, a provider like Digital Ocean offers a straightforward platform that can serve as a cost-effective starting point before a potential migration to a major provider later on.

Scenario 2: The Enterprise Migrating Legacy Systems

An established company with considerable on-premises infrastructure, including Microsoft-based applications and Oracle databases, is executing a phased cloud migration. The main goals are to preserve business continuity, ensure smooth integration with current systems, and make use of the existing team’s skills.

- Top Contenders: Microsoft Azure and Oracle Cloud Infrastructure (OCI).

- Analysis: Microsoft Azure is the clear frontrunner for organisations deeply integrated with the Microsoft ecosystem. Azure’s hybrid cloud features, especially through Azure Arc, are built to manage both on-premises and cloud resources from a unified control plane. The native integration with Microsoft Entra ID (formerly Azure AD) and Office 365 simplifies identity management, lowering the learning curve for IT personnel.

- Situational Recommendation: For organisations where critical workloads depend on Oracle databases, OCI offers a strong value proposition. It is engineered for high-performance computing and provides notable cost and performance benefits for running Oracle software. OCI’s very low data egress fees, with 10 TB free per month, also make it an attractive choice for data-intensive applications. A multi-cloud strategy often makes sense here, where an enterprise might use Azure for its Microsoft applications and OCI for its specialised database needs.

Scenario 3: High-Performance Computing and Big Data Analytics

A research institution must process enormous datasets and execute complex computational simulations. The key requirements include access to specialised hardware like GPUs, high-throughput networking for data pipelines, and scalable, cost-effective storage for petabytes of information.

- Top Contenders: Google Cloud Platform and AWS.

- Analysis: GCP has established a solid reputation in this domain, drawing on Google’s internal experience with managing immense datasets. Its high-performance network and leading data analytics services like BigQuery offer a powerful platform for large-scale data processing. GCP’s work in AI and machine learning, supported by custom hardware like Tensor Processing Units (TPUs), gives it a distinct advantage for complex analytical workloads.

- Situational Recommendation: AWS is also a formidable contender, providing a broad selection of GPU-powered instances and services like AWS Batch for managing large-scale computing jobs. For workloads that require aggressive cost optimisation, using AWS Spot Instances can lower compute costs by up to 90%, though this necessitates designing applications for fault tolerance. The decision frequently depends on the specific tools and network architecture that align best with the research team’s workflow.

Your Decision Framework: Making The Right Choice

Selecting a cloud provider demands more than a simple feature-for-feature checklist. It requires a structured evaluation process that translates your organisation’s technical needs and business objectives into clear, actionable criteria. This approach ensures your final choice is grounded in a deep understanding of your specific requirements, not just marketing claims or generic advice. It’s about prioritising what truly matters for your long-term success, from raw performance to the subtleties of vendor support and contract terms.

Structuring Your Evaluation

The initial step is to convert broad goals into specific, measurable questions. Instead of just asking, “Which provider is cheapest?” you should ask, “Which provider offers the most predictable cost model for our specific workload profile over the next two years?”

Here are key questions to build your evaluation around:

- Workload Alignment: Does the provider’s core strength align with our primary use case? Consider enterprise integration for Azure, data analytics for Google Cloud, or service breadth for AWS.

- Cost Predictability: Beyond raw compute rates, how will data egress fees, storage transaction costs, and support plans impact our total cost of ownership (TCO)?

- Skill Set Compatibility: Does our team have the necessary skills to manage this platform effectively, or will a steep learning curve slow down critical projects?

- Vendor Relationship: What level of technical support is included? What is the provider’s reputation for responding to issues and partnering with customers for long-term success?

Building a solid strategy for your cloud journey is an excellent starting point. Our guide on creating a Cloud Adoption Framework can help you establish the foundational pillars for this process.

Meaningful Proof-of-Concept (PoC) Testing

A well-designed Proof-of-Concept (PoC) is the most effective method for validating a provider’s capabilities against your actual needs. To make a PoC meaningful, focus on testing a representative slice of your most critical or demanding workload. For example, if your application is data-intensive, your PoC should simulate realistic data processing and transfer scenarios, not just test basic virtual machine performance.

Establish clear success metrics before you begin. These should be tied to business outcomes, such as:

- Achieving a target transaction processing time under simulated peak load.

- Confirming that deployment automation scripts run without significant changes.

- Validating that security controls can be configured to meet your specific compliance requirements.

This hands-on testing also offers an invaluable opportunity to interact with the provider’s billing and cost management tools.

Below is a screenshot of the AWS Pricing Calculator, which helps in estimating costs for specific configurations.

Tools like this are essential for modelling different scenarios and understanding the cost implications of architectural choices before you commit.

Avoiding Common Selection Mistakes

One of the most frequent errors is underestimating the importance of contract terms and vendor lock-in. Pay close attention to data egress policies, as high fees can make a future migration prohibitively expensive. Another mistake is choosing a provider based on a single feature, only to find its broader ecosystem is a poor fit for your team. Your final decision should be a balanced one, weighing technical merit, cost-effectiveness, and the quality of the partnership you are entering.

Building a flexible cloud strategy is key. By carefully evaluating providers through a structured framework and rigorous testing, you can make a choice that supports your business today and adapts to your needs tomorrow.

Ready to build a secure, scalable, and resilient cloud infrastructure? The expert team at Signiance Technologies can help you design and implement the perfect cloud solution matched to your business objectives. Get in touch with us today to start your cloud journey.