Streamlining Your Development with Continuous Integration

Continuous integration (CI) best practices are crucial for faster development cycles, higher quality code, and more frequent, reliable releases. This listicle presents eight continuous integration best practices to optimize your development pipeline. By implementing these practices, your team can improve efficiency and achieve key business goals. Learn how to maintain a single source repository, automate builds, implement self-testing builds, commit daily, maintain build speed, test in production-like environments, simplify access to executables, and ensure build transparency.

1. Maintain a Single Source Repository

Maintaining a single source repository is a cornerstone of effective continuous integration (CI) and, by extension, successful software development. This practice involves consolidating all code, configuration files, scripts, and other related assets into one version control system (VCS). This VCS then serves as the definitive source of truth for the entire project. This centralized approach ensures all team members, whether they’re developers, testers, or operations personnel, work from the same codebase. This shared foundation drastically reduces integration conflicts, maintains consistency across the development environment, and fosters seamless collaboration.

A single source repository, often referred to as a “monorepo” in its purest form, utilizes a centralized VCS like Git, SVN, or Mercurial. Branching strategies, such as GitFlow or trunk-based development, are crucial for managing parallel development efforts within the repository. This setup offers complete codebase visibility for all team members, fostering transparency and shared understanding. Integrated access control and permissions within the VCS further enhance security and control over the codebase.

This approach deserves its place as a top continuous integration best practice due to several key benefits. It provides a single source of truth, simplifying the tracking of changes and versioning. This, in turn, facilitates easier code reviews and streamlines collaboration, particularly valuable for distributed teams. Moreover, a single source repository enables more reliable build and deployment processes, laying the groundwork for robust CI/CD pipelines.

Features and Benefits:

- Centralized Version Control: Using established systems like Git ensures efficient code management and history tracking.

- Branching Strategies: Implementing strategies like GitFlow allows for organized parallel development and feature isolation.

- Complete Codebase Visibility: All team members have access to the entire project, fostering collaboration and understanding.

- Integrated Access Control: Permissions management within the VCS enhances security and ensures controlled access to the codebase.

- Simplified Tracking and Versioning: Changes are easily tracked and managed, leading to a clear development history.

- Easier Code Reviews and Collaboration: A shared codebase simplifies the review process and encourages collaboration among team members.

- Reliable Build and Deployment Processes: Consistent codebase leads to more dependable and predictable build and deployment pipelines.

Pros and Cons:

Pros:

- Provides a single source of truth for all code

- Simplifies tracking of changes and versioning

- Facilitates easier code reviews and collaboration

- Enables more reliable build and deployment processes

Cons:

- May become unwieldy for very large projects

- Requires careful management of branching strategies

- Can create bottlenecks with many developers working simultaneously

- Initial setup and migration can be complex

Examples of Successful Implementation:

Industry giants like Google, Microsoft, and Facebook leverage the power of single source repositories. Google maintains a massive monorepo containing billions of lines of code. Microsoft transitioned to a unified repository for Windows development, streamlining their development process. Facebook also uses a large monorepo for a significant portion of their codebase.

Actionable Tips for Implementation:

- Implement Clear Branching Policies: Establish well-defined branching strategies appropriate for your team size and project complexity.

- Consider Alternatives for Massive Projects: If a complete monorepo isn’t feasible, explore using Git submodules or subtrees to manage dependencies and modularize your codebase.

- Secure Your Repository: Set up appropriate access controls and permissions to protect your code and intellectual property.

- Manage Large Files Effectively: Consider using tools like Git LFS (Large File Storage) for handling large binary files, preventing repository bloat.

- Document Everything: Clearly document your repository structure and contribution guidelines to ensure smooth onboarding and consistent code contributions.

When and Why to Use this Approach:

This approach is particularly beneficial for fast-moving development teams, startups, and projects that require high levels of integration and collaboration. It’s highly recommended for teams practicing continuous integration and continuous delivery (CI/CD), as it provides a stable and consistent foundation for automated build and deployment pipelines. While it can present challenges for extremely large projects, the benefits of a single source repository often outweigh the complexities, particularly when coupled with well-defined branching strategies and robust tooling.

2. Automate the Build Process

A cornerstone of continuous integration best practices is automating the build process. This involves transitioning from manual execution of build steps to a scripted or declarative approach. Instead of developers manually compiling code, running tests, and packaging applications, these tasks are handled by automated systems. This ensures builds are reproducible, consistent, and can be triggered automatically whenever code changes are committed, or on a scheduled basis. This consistency is crucial for continuous integration, enabling faster feedback cycles and quicker identification of integration issues.

Automating your build process offers several key features such as script-based or declarative build configurations, providing flexibility in how you define your build pipeline. Robust dependency management and resolution mechanisms ensure that all required libraries and components are correctly fetched and integrated. The process culminates in the automated generation of artifacts, which can be anything from compiled binaries and deployment packages to container images. Build environment consistency is also paramount, and automation helps ensure that builds behave predictably across different environments. Finally, many build tools support parallel execution capabilities, significantly reducing build times and improving resource utilization.

This practice deserves its place on the list of continuous integration best practices because it significantly improves efficiency and reliability. Manual builds are prone to human error and inconsistencies, whereas automation eliminates these risks, leading to more stable and predictable deployments.

Examples of Successful Implementation:

- Netflix: Employs extensive build automation to enable thousands of deployments per day, showcasing the scalability and robustness of automated build systems.

- Google (Bazel): Utilizes its powerful Bazel build system to manage its enormous codebase, demonstrating the ability to handle complex projects with numerous dependencies.

- Amazon: Leverages automated build systems to manage its diverse microservices architecture, highlighting the flexibility and adaptability of these systems.

Pros:

- Eliminates manual build errors and inconsistencies

- Saves developer time and increases productivity

- Creates reproducible builds across different environments

- Enables faster feedback cycles

- Facilitates better resource utilization through parallel processing

Cons:

- Initial setup requires effort and expertise

- Build scripts require maintenance as the project evolves

- Complex builds may have long execution times

- Tools and plugins may have compatibility issues

Tips for Implementing Automated Builds:

- Version Control: Keep build scripts in version control alongside your code. This allows for tracking changes and reverting to previous versions if necessary.

- Appropriate Tools: Use build tools appropriate for your technology stack (e.g., Maven or Gradle for Java, npm or yarn for JavaScript, etc.).

- Containerization: Containerize build environments for consistency and portability across different platforms. Tools like Docker are highly recommended.

- Caching: Implement caching strategies to speed up builds by reusing previously built artifacts.

- Parallelism: Break large builds into smaller, parallel steps where possible to take advantage of multi-core processors and reduce build times.

- Documentation: Document build requirements and dependencies clearly for easier maintenance and troubleshooting.

Popular Tools & Influencers:

- Jenkins: Open-source automation server widely used for continuous integration and continuous delivery.

- Travis CI: Continuous integration service provider that integrates seamlessly with GitHub.

- CircleCI: Continuous integration/continuous delivery platform offering cloud-based and self-hosted solutions.

- GitHub Actions: Workflow automation tool integrated directly into GitHub, enabling streamlined build and deployment processes.

- Mike Mason: Co-author of “Continuous Delivery,” a seminal work on continuous delivery practices.

By implementing these continuous integration best practices, particularly automating your build process, organizations in IN, regardless of size or industry, can significantly improve their software development lifecycle, accelerating time to market and delivering higher-quality software.

3. Make Builds Self-Testing

A cornerstone of effective continuous integration (CI) is the practice of making builds self-testing. This means integrating automated tests directly into your build process. Whenever new code is committed and a build is triggered, a comprehensive suite of tests automatically runs, verifying code functionality, integration points, and checking for regressions. This continuous validation of code quality prevents defects from propagating to later stages of development or, worse, into production. It allows developers to catch issues early, when they are easier and less expensive to fix. This is crucial for maintaining a rapid and reliable development lifecycle, a key element of successful continuous integration best practices.

Self-testing builds incorporate various levels of testing, ensuring comprehensive coverage. These levels typically include:

- Unit Tests: These test individual components or functions in isolation to ensure they work correctly.

- Integration Tests: These verify the interaction between different modules and services, ensuring they function correctly together.

- System Tests: These test the entire system as a whole, simulating real-world scenarios.

- UI Tests: These focus on the user interface, ensuring that the application behaves as expected from the user’s perspective.

The build process generates reports and metrics based on the test results, providing valuable insights into code quality and potential issues. Notifications are automatically sent if the build fails due to failing tests, alerting the development team to take immediate action. Code coverage analysis is often included to measure how much of the codebase is actually being tested.

Pros of Self-Testing Builds:

- Immediate Identification of Regressions and Integration Issues: Bugs are caught early, significantly reducing debugging time and effort.

- Reduced Manual Testing Burden: Automation frees up testers to focus on exploratory testing and other critical tasks.

- Increased Confidence in Code Changes: Developers can deploy code with greater confidence, knowing that it has passed a comprehensive suite of tests.

- Living Documentation Through Test Cases: Test suites act as living documentation, providing a clear picture of the system’s behavior.

- Improved Code Design: The need for testability often leads to better code design and modularity.

Cons of Self-Testing Builds:

- Upfront Investment in Test Automation: Creating and maintaining automated tests requires significant initial effort.

- Increased Build Times: Running extensive test suites can add to the overall build time.

- Flaky Tests: Tests that sometimes pass and sometimes fail (“flaky tests”) can cause false build failures and erode trust in the CI process.

- Maintenance Overhead: Test suites need to be maintained and updated as the codebase evolves.

Examples of Successful Implementation:

- Google reportedly runs over 150 million tests daily as part of their CI/CD pipeline.

- Microsoft’s shift to test-driven development for Windows development demonstrates the value of self-testing builds at scale.

- Spotify’s robust testing strategy enables them to achieve over 200 production deployments per day.

Tips for Implementing Self-Testing Builds:

- Prioritize Test Speed: Optimize tests for speed to maintain fast feedback cycles.

- Implement a Testing Pyramid: Focus on having more fast-running unit tests and fewer slower UI tests.

- Utilize Test Parallelization: Run tests in parallel to reduce overall build time.

- Create Dedicated Test Environments: Replicate production environments as closely as possible for accurate testing.

- Implement Test Data Management Strategies: Manage test data effectively to ensure consistent and reliable test results.

- Monitor Test Metrics: Track key metrics like code coverage, execution time, and failure rates to identify areas for improvement.

Key Figures and Tools:

The principles of self-testing builds are deeply rooted in the work of figures like Kent Beck (creator of Test-Driven Development) and Martin Fowler (advocate for Continuous Integration testing). Tools like JUnit (unit testing framework), Selenium (web UI testing framework), and Cucumber (behavior-driven development tool) have played crucial roles in facilitating automated testing.

Self-testing builds are an essential best practice for continuous integration, offering significant advantages for startups, enterprise IT departments, cloud architects, DevOps teams, and business decision-makers alike. By automating the testing process, organizations can improve code quality, accelerate development cycles, and deliver software with greater confidence. The initial investment in test automation pays dividends in the long run through reduced bugs, faster time to market, and increased customer satisfaction.

4. Everyone Commits to the Mainline Every Day

This continuous integration best practice, often referred to as trunk-based development, emphasizes integrating code changes into the main branch (typically ‘main’ or ‘trunk’) at least once per day. This seemingly simple act has profound implications for development workflows and drastically improves the overall software delivery process. It’s a cornerstone of effective continuous integration and a key differentiator between high-performing development teams and those struggling with integration headaches.

How it Works:

Instead of developers working in isolation on long-lived feature branches, they commit small, incremental changes directly to the mainline. This encourages frequent merging and early detection of integration conflicts. For features that are not yet ready for release, techniques like feature toggles (also known as feature flags) are used to hide the incomplete functionality from end-users. For larger, more disruptive changes, the branch by abstraction pattern can be employed to incrementally refactor and integrate the new functionality while maintaining a working mainline.

Examples of Successful Implementation:

Industry giants like Facebook, Google, and Etsy have demonstrated the power of daily mainline integration. Facebook, for instance, sees thousands of commits to their main branch every day, showcasing the scalability of this approach. Google employs trunk-based development across its massive codebase, and Etsy practices continuous deployment directly from their trunk to production, emphasizing the stability and releasability achieved through this practice.

Actionable Tips:

- Pre-Commit Hooks: Implement pre-commit hooks to automatically run linters, formatters, and basic tests before every commit. This catches simple errors early and prevents them from reaching the mainline.

- Feature Toggles: Use feature toggles extensively. This allows you to merge unfinished features into the mainline without impacting users. You can then activate the feature when it’s ready.

- Pull-Based Development and Code Reviews: Encourage pull-based development where all changes are submitted via pull requests. Thorough code reviews are essential before merging into the mainline to ensure code quality and catch potential issues.

- Branch Policies: Enforce branch policies in your version control system (like GitHub, GitLab, or Bitbucket) that require passing tests before a merge is allowed. This helps maintain a stable and functional main branch.

- Pair Programming: For particularly complex changes, consider pair programming. This fosters collaboration and knowledge sharing, leading to higher quality code and fewer integration problems.

- Small, Deliverable Increments: Break down large features into smaller, independently deliverable increments. This allows for more frequent integration and reduces the risk of large, complex merges.

When and Why to Use This Approach:

This practice is highly beneficial for most development teams, particularly those practicing continuous integration and continuous delivery. It’s especially valuable for:

- Startups and Early-Stage Companies: Rapid iteration and quick feedback loops are crucial in early stages, and daily mainline integration facilitates this.

- Enterprise IT Departments: For large organizations, this approach can significantly improve code quality, reduce integration complexity, and speed up delivery.

- Cloud Architects and Developers: Cloud environments are inherently dynamic, and frequent integration ensures compatibility and reduces deployment risks.

- DevOps and Infrastructure Teams: This practice aligns perfectly with DevOps principles of automation, collaboration, and continuous improvement.

- Business Decision-Makers and CTOs: Daily mainline integration leads to faster time-to-market, reduced development costs, and increased business agility.

Pros and Cons:

Pros:

- Reduces merge conflicts and integration headaches.

- Provides early visibility into integration issues.

- Promotes smaller, more manageable code changes.

- Encourages continuous collaboration among team members.

- Maintains a constantly releasable main branch.

Cons:

- May be challenging for teams used to long-lived feature branches.

- Requires disciplined use of feature toggles for incomplete work.

- Can be difficult with interdependent changes across teams.

- Requires strong automated testing to maintain quality.

Why this Item Deserves its Place in the List:

Committing to the mainline daily is a fundamental continuous integration best practice. It addresses the core problem of integration hell by encouraging frequent, small merges, and continuous collaboration. By adhering to this practice, teams can significantly improve code quality, accelerate delivery, and reduce the overall cost and complexity of software development. This practice is crucial for any team striving to embrace the benefits of a truly agile and efficient development process.

5. Keep the Build Fast

Maintaining fast builds is a cornerstone of effective continuous integration (CI), making it a critical best practice. Quick builds provide the rapid feedback developers need to catch and correct issues early, minimizing disruptions and maintaining a productive flow state. A sluggish build process, on the other hand, discourages frequent integration, leading to larger, more complex integrations and slowing down the entire development cycle. This is crucial for any team practicing continuous integration, from startups in India to established enterprise IT departments.

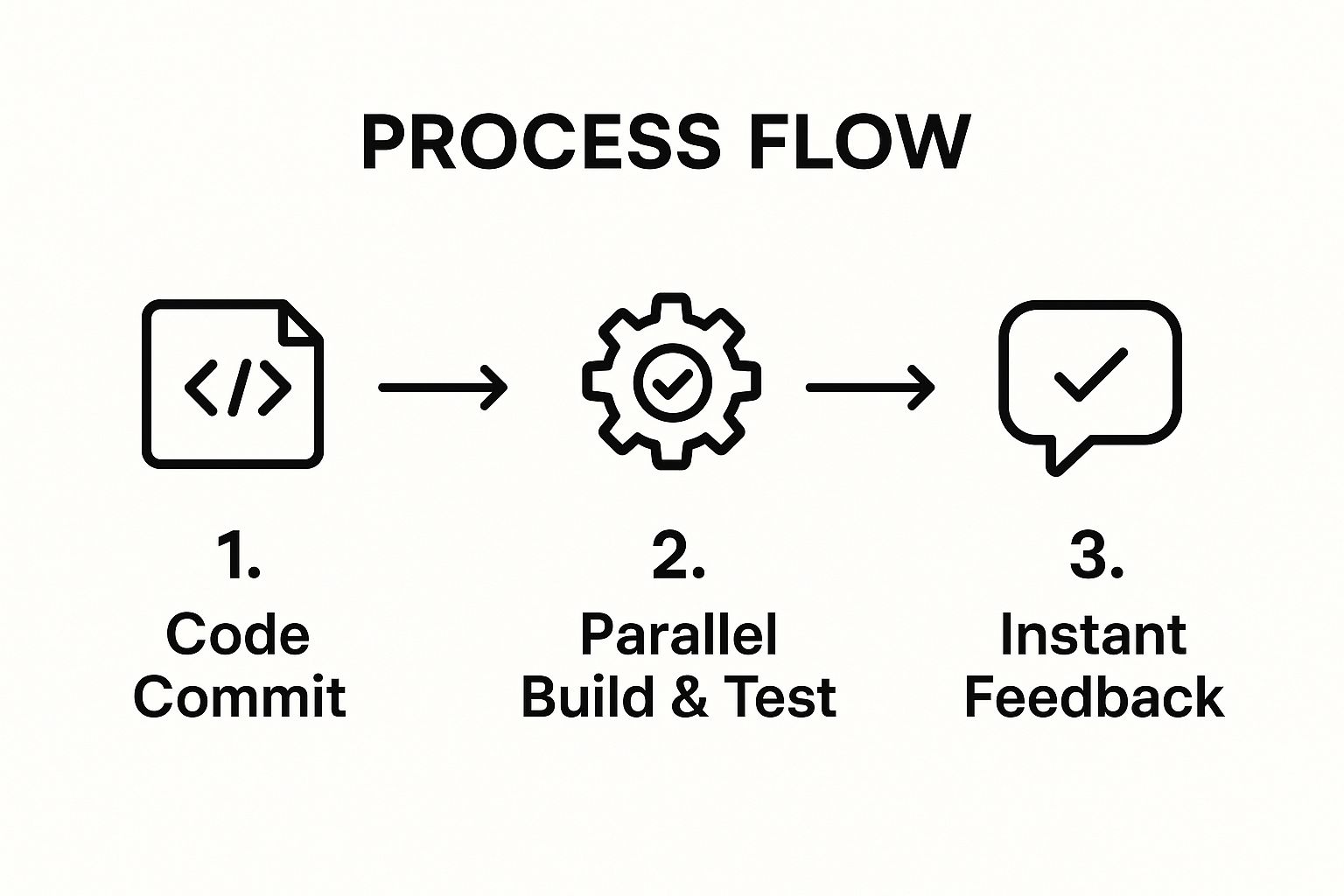

The infographic illustrates the process of optimizing build times within a CI/CD pipeline. The process starts with “Code Changes” and proceeds through “Build Trigger,” “Build Execution,” and “Test Execution,” finally culminating in “Deployment.” Each step highlights potential bottlenecks and corresponding optimization strategies, such as caching, parallelization, and incremental builds, underscoring the importance of streamlining each stage for optimal speed and efficiency. As the visualization clearly shows, optimizing each stage contributes to faster overall build times, which in turn reduces delays and ensures faster feedback loops.

Fast builds are achieved through a combination of build time optimization techniques, such as incremental builds that process only changed components, build parallelization and distributed execution across multiple machines, test suite optimization and selective test execution, and multi-stage build pipelines with quick feedback loops. Features like these are what enable organizations to achieve truly rapid feedback and development cycles.

This practice deserves its place in the list of continuous integration best practices because it directly impacts developer productivity and the overall efficiency of the development process. The benefits of fast builds are numerous: faster feedback to developers, encouragement of more frequent commits and integrations, reduced context switching for developers waiting for builds, increased team velocity and productivity, and a greater number of iterations and improvements achievable each day.

However, achieving and maintaining fast builds can also present challenges. It can require a significant engineering investment, potentially leading to trade-offs between build speed and the thoroughness of testing. Ongoing maintenance is necessary as the codebase grows, and additional infrastructure costs might be incurred to support distributed builds or caching mechanisms.

Despite these potential drawbacks, the advantages often outweigh the costs, especially for growing companies and enterprises. Examples of successful implementations highlight the value of investing in build speed. Google’s build system, for example, processes changes in under 10 minutes despite billions of lines of code, a testament to the power of dedicated build optimization. Facebook’s Buck build system, designed for their massive monorepo, provides another example of speed optimization at scale. Shopify, too, realized significant gains, reducing their build times from 40 minutes to under 10 minutes.

For companies in India and beyond, here are some actionable tips for keeping your builds fast:

- Separate fast tests (unit) from slower tests (integration, UI): This allows for quicker feedback on code changes.

- Implement tiered build pipelines with progressive validation: Start with quick checks and progress to more comprehensive tests later.

- Use build caching and incremental compilation: Avoid redundant work by caching build artifacts and compiling only changed code.

- Optimize test selection to run only relevant tests for changes: This significantly reduces test execution time.

- Consider distributed build systems like Bazel or Buck: Distribute the build workload across multiple machines for faster execution.

- Monitor build times and investigate increases promptly: Address any performance regressions immediately.

- Dedicate engineering time specifically to build optimization: Make build speed a priority and allocate resources accordingly.

Thought leaders like Mike Cohn (advocate for fast feedback cycles), creators of build systems like Gradle (focused on build performance) and Bazel (Google’s open-source build tool), pioneers at Microsoft (in distributed build systems), and microservices advocate Sam Newman (emphasizing simplifying build complexity) have all popularized the importance of fast builds. By prioritizing build speed, organizations can create a more efficient and productive development environment, resulting in higher quality software delivered faster.

6. Test in a Production-like Environment

Testing in a production-like environment is a crucial continuous integration best practice that significantly reduces the risk of deployment failures and improves the reliability of software releases. This approach involves creating a testing environment that closely mirrors the actual production environment, replicating infrastructure, data patterns, network configurations, system dependencies, and even security policies. By doing so, you can validate that your application behaves consistently when deployed and catch environment-specific issues early in the development cycle, long before they impact your users. This is particularly important for continuous integration as it allows for rapid and reliable deployments.

How it Works:

The core principle is to minimize the differences between testing and production. This means using similar hardware, software versions, network topologies, and data sets. The closer the resemblance, the more accurate your testing results will be and the higher your confidence in a successful deployment. This can be achieved through various techniques like Infrastructure-as-Code, containerization, and data masking.

Features of a Production-like Environment:

- Infrastructure-as-Code (IaC): Tools like Terraform, CloudFormation, or Ansible allow you to define your infrastructure in code, ensuring consistent environment provisioning across testing, staging, and production.

- Containerization: Docker and Kubernetes enable packaging applications and their dependencies into portable containers, guaranteeing consistent execution across different environments.

- Production Data Sampling or Simulation: Using sanitized or simulated production data provides realistic test scenarios and helps uncover data-related bugs.

- Network Condition Replication: Simulate network latency, bandwidth limitations, and even network failures to test application resilience.

- Similar Scaling and Performance Characteristics: The test environment should have similar scaling capabilities to production to identify potential performance bottlenecks under load.

- Realistic Security Configurations: Implementing security policies and access controls mirroring production helps uncover vulnerabilities early on.

Why This Approach Matters:

This best practice directly addresses the pervasive “works on my machine” problem by eliminating the discrepancies between development, testing, and production environments. It also allows teams to validate non-functional requirements like performance, security, and scalability much earlier in the development lifecycle. This reduces firefighting during deployments and fosters a culture of proactive problem-solving.

Pros:

- Identifies environment-specific issues before production: Catching bugs early saves time and resources.

- Increases confidence in deployment success: Thorough testing in a realistic environment minimizes deployment anxieties.

- Helps validate non-functional requirements (performance, security): Ensures your application meets performance and security standards.

- Reduces “works on my machine” problems: Standardizes environments across the development pipeline.

- Better prepares teams for real-world operational challenges: Provides opportunities to practice incident response and troubleshooting in a safe environment.

Cons:

- Can be expensive to maintain duplicate infrastructure: Replicating production infrastructure can be costly, especially for complex setups.

- Requires sophisticated infrastructure automation: Managing and synchronizing environments requires robust automation tools and expertise.

- May introduce data privacy concerns with production-like data: Care must be taken to anonymize or mask sensitive data to comply with privacy regulations.

- Complex to keep synchronized with production changes: Maintaining parity between the production and test environments requires ongoing effort.

Examples of Successful Implementation:

- Netflix’s Simian Army: This suite of tools tests the resilience of Netflix’s infrastructure in production-like conditions by simulating various failure scenarios.

- Amazon’s extensive pre-production testing environments: Amazon utilizes rigorous pre-production testing environments to ensure the reliability of its vast e-commerce platform.

- Google’s Spinnaker continuous delivery platform: Spinnaker facilitates consistent environment deployments across multiple cloud providers.

Actionable Tips:

- Use IaC tools: Leverage tools like Terraform, CloudFormation, or Ansible for consistent infrastructure provisioning.

- Containerize applications: Use Docker and Kubernetes for environment consistency and portability.

- Implement data masking/anonymization: Protect sensitive data while maintaining realistic test scenarios.

- Perform chaos engineering: Introduce controlled disruptions to test system resilience (e.g., using tools like Chaos Monkey).

- Automate environment provisioning and teardown: Streamline environment management and reduce manual effort.

- Consider ephemeral environments for pull requests or feature branches: Create temporary environments for testing specific code changes.

- Test database migrations and schema changes in production-like conditions: Validate database changes before deploying to production.

Who This Benefits:

This best practice is highly relevant for various stakeholders including:

- Startups and early-stage companies looking to build robust and scalable systems.

- Enterprise IT departments managing complex applications and infrastructure.

- Cloud architects and developers responsible for designing and deploying cloud-native applications.

- DevOps and infrastructure teams tasked with automating and optimizing the software delivery pipeline.

- Business decision-makers and CTOs concerned with application reliability and minimizing downtime.

By embracing the “Test in a Production-like Environment” best practice, organizations in the IN region and globally can significantly improve their continuous integration processes, enhance software quality, and increase confidence in their releases. This approach fosters a proactive and preventative approach to software development, reducing the risk of costly production failures and empowering teams to deliver high-quality software at speed.

7. Make it Easy for Anyone to Get the Latest Executable

A crucial continuous integration best practice is ensuring easy access to the latest executable for all stakeholders. This involves setting up a robust and accessible artifact repository where build artifacts are stored, versioned, and easily retrievable. Streamlining access to executables facilitates smoother testing, simplified demonstrations, and consistent deployments, ultimately contributing to a more efficient and reliable CI/CD pipeline. This practice is especially important for implementing continuous integration best practices effectively.

How it Works:

An artifact repository acts as a central hub for all your build outputs. When your CI pipeline completes a build, it publishes the resulting executable (along with other artifacts like libraries or documentation) to this repository. The repository assigns a unique version number to the build and stores metadata associated with it, such as the commit ID, branch information, and test results. Team members can then easily access and download the specific version they need through a user-friendly interface, ensuring everyone works with consistent and verified binaries.

Examples of Successful Implementation:

- Large organizations like Microsoft and Google maintain extensive internal artifact repositories that serve thousands of developers, enabling them to easily share and access the latest builds of various software components.

- Spotify’s sophisticated release system, heavily reliant on artifact management, allows developers to deploy to production with confidence, leveraging automated versioning and deployment pipelines tied into their artifact repositories.

When and Why to Use This Approach:

Implementing an artifact repository should be a priority from the early stages of any software project. It becomes increasingly crucial as the project grows, the team expands, and the number of builds increases. This approach is particularly valuable in the following scenarios:

- Distributed Teams: Ensures consistent access to the latest executables regardless of location.

- Microservice Architectures: Simplifies management and deployment of numerous interconnected services.

- Frequent Releases: Facilitates rapid iteration and deployment of new features and bug fixes.

- Compliance Requirements: Provides a clear audit trail of build artifacts and their associated metadata.

Actionable Tips for Readers:

- Implement Semantic Versioning: Use a clear and consistent versioning scheme (e.g., major.minor.patch) for easy identification of builds.

- Automate Artifact Publishing: Integrate artifact publishing into your CI pipeline to ensure every successful build is automatically stored in the repository.

- Add Comprehensive Metadata: Include relevant information like commit IDs, branch info, and test results with each build artifact for better traceability and debugging.

- Create Self-Service Portals: Develop user-friendly interfaces or APIs to allow team members to easily search, browse, and download artifacts.

- Consider Release Channels: Establish different release channels (e.g., stable, beta, nightly) within the repository to cater to different testing and deployment needs.

- Implement Artifact Validation and Scanning: Integrate security scanning tools to identify vulnerabilities in your artifacts before they are deployed.

- Document the Process: Clearly document the process for accessing and using build artifacts from the repository.

Pros:

- Ensures consistent access to verified build artifacts.

- Simplifies deployment and testing processes.

- Provides historical versioning for rollbacks.

- Reduces “works on my machine” issues.

- Enables non-technical stakeholders to access latest versions.

Cons:

- Requires management of artifact storage and retention.

- May need governance policies for compliance and security.

- Can consume significant storage for large applications.

- Needs proper versioning discipline.

Popularized By:

JFrog Artifactory, Sonatype Nexus, Docker Hub, GitHub Packages, and Maven Central are examples of widely used artifact repository platforms that can help you implement this continuous integration best practice. Choosing the right platform depends on your specific needs and the technology stack you’re using.

This practice deserves its place in the list of continuous integration best practices because it addresses a fundamental challenge in software development – ensuring that everyone involved has access to the correct, verified version of the software. By investing in a robust artifact management system, you can significantly improve your development workflow, reduce integration issues, and accelerate the delivery of high-quality software.

8. Ensure Transparent Build Process with Visible Results

A crucial best practice in continuous integration (CI) is ensuring a transparent build process with readily visible results. This transparency, a cornerstone of effective CI/CD pipelines, empowers teams to proactively monitor build health, swiftly identify and resolve issues, and foster a shared sense of responsibility for code quality. By making build status, test results, and other key metrics easily accessible to all stakeholders, organizations can significantly improve their development workflows and deliver higher-quality software faster. This practice is especially important for startups and enterprise IT departments in the IN region looking to scale their development efforts and maintain a competitive edge. For cloud architects, developers, DevOps, and infrastructure teams, transparency offers valuable insights into the health and efficiency of the CI/CD pipeline. Even business decision-makers and CTOs can benefit from the clear overview provided by transparent build processes, enabling them to make informed decisions based on real-time data.

How it Works:

Transparency in CI/CD involves implementing tools and practices that make various aspects of the build process readily observable. This includes:

- Real-time build status dashboards: Dashboards offer an at-a-glance view of the current state of builds, highlighting successes, failures, and ongoing processes.

- Comprehensive build logs and artifacts: Detailed logs provide a record of each step in the build process, making it easier to pinpoint the cause of failures. Access to build artifacts allows for thorough examination of the generated outputs.

- Automated notifications for build events: Notifications through email, chat platforms, or dedicated monitoring tools alert teams to critical events like build failures or successful deployments.

- Historical build performance metrics: Tracking metrics like build time, success rate, and test coverage over time provides valuable insights for process optimization.

- Test coverage and quality reports: Visibility into test results, including code coverage and the details of failed tests, helps ensure code quality and identify areas for improvement.

- Integration with communication platforms: Connecting the CI/CD pipeline with platforms like Slack or Microsoft Teams facilitates seamless communication and collaboration around build events.

Examples of Successful Implementation:

- Imagine a team in Bengaluru using a large wall-mounted screen displaying the build status of their various projects, much like Atlassian’s wallboards. This allows everyone, from developers to product managers, to instantly understand the current state of development.

- A startup in Hyderabad could implement a system similar to Amazon’s internal deployment dashboards, providing all engineering teams with real-time visibility into the deployment pipeline, fostering a culture of shared responsibility.

- Similarly, a development team in Mumbai could leverage tools akin to Spotify’s squad health monitoring and build transparency tools to gain a deep understanding of their team’s performance and identify bottlenecks in the CI/CD process.

Actionable Tips:

- Install build monitors in team areas: Highly visible displays showcasing build status create shared awareness and prompt faster responses to issues.

- Integrate build status with chat platforms: Real-time notifications in Slack or Microsoft Teams keep the team informed without requiring them to actively monitor dashboards.

- Create custom dashboards for different stakeholders: Tailor dashboards to display the most relevant information for each audience, from detailed technical metrics for developers to high-level summaries for management.

- Implement notification systems with appropriate urgency levels: Prioritize notifications based on severity to avoid alert fatigue and ensure that critical issues receive immediate attention.

- Capture and visualize trends over time: Tracking historical data provides valuable insights into build performance and helps identify areas for optimization.

- Make build failures highly visible but blameless: Focus on identifying and resolving issues rather than assigning blame, fostering a culture of continuous improvement.

- Ensure security for sensitive build information: Implement appropriate access controls to protect confidential data exposed through the build process.

Pros & Cons:

Pros:

- Creates shared awareness of build health.

- Enables quick identification of build failures.

- Promotes collective ownership of code quality.

- Builds trust through transparency.

- Provides historical context for troubleshooting.

Cons:

- Information overload without proper organization.

- Can expose sensitive information if not properly secured.

- May require cultural shift for teams not used to transparency.

- Dashboard maintenance adds overhead.

Popularized By: Tools like Jenkins, Travis CI, TeamCity, GitHub Actions, and GitLab CI/CD, along with methodologies like Extreme Programming, have significantly popularized the concept of transparent build processes and information radiators.

By embracing transparency in your continuous integration process, you can create a more efficient, collaborative, and ultimately more successful development environment. This best practice is not just a technical implementation but also a cultural shift that empowers teams and drives continuous improvement.

8 Key Continuous Integration Best Practices Comparison

| Practice | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Maintain a Single Source Repository | Medium to High: Requires careful branching policy and migration | Moderate: Needs version control system setup and access controls | Centralized codebase, reduced integration conflicts | Large/mature teams needing consistency | Single source of truth, easier collaboration |

| Automate the Build Process | Medium: Initial scripting and tool setup needed | Moderate: Build servers and tooling | Reproducible, consistent builds and faster feedback | Projects with frequent code changes | Eliminates manual errors, saves developer time |

| Make Builds Self-Testing | High: Requires extensive automated test development | High: Test infrastructure and maintenance | Immediate defect detection and continuous quality validation | Teams practicing CI/CD and Test-Driven Development | Early regression detection, better code quality |

| Everyone Commits to the Mainline Every Day | Medium: Cultural change and tooling required | Moderate: Strong testing and merge tools | Frequent integration, fewer merge conflicts | Agile teams focusing on continuous integration | Smaller, manageable changes, early conflict discovery |

| Keep the Build Fast | High: Engineering effort for optimization | High: Infrastructure for parallel/distributed builds | Rapid feedback enabling faster development cycles | Large or fast-moving projects needing quick iteration | Increased productivity and team velocity |

| Test in a Production-like Environment | High: Complex environment replication | High: Duplicate or containerized infra | Early detection of environment-specific issues | Applications with critical non-functional requirements | Increased deployment confidence, fewer surprises |

| Make it Easy for Anyone to Get Latest Executable | Medium: Requires artifact repo setup and governance | Moderate: Storage and management systems | Streamlined access to builds and simplified deployment | Teams needing easy artifact distribution | Consistent artifact access, reduces environment issues |

| Ensure Transparent Build Process with Visible Results | Medium: Dashboard and notification integration | Moderate: Monitoring tools and communication integration | Improved build visibility and accountability | Teams prioritizing transparency and quality culture | Quick issue identification, shared ownership |

Elevating Your CI/CD Game

Mastering continuous integration best practices is crucial for any organization aiming to streamline its development process. From maintaining a single source repository and automating builds to ensuring self-testing builds and frequent commits, each practice plays a vital role in achieving faster release cycles and higher-quality code. We’ve covered eight key practices in this article, emphasizing the importance of a production-like testing environment, easy access to the latest executable, and a transparent build process. These practices, when implemented effectively, significantly improve team productivity and reduce time to market, especially important for startups and enterprise IT departments in the IN region looking to stay competitive. Remember, these best practices are adaptable – tailor them to suit your specific project requirements and team dynamics.

If you’re looking to delve deeper into optimizing your CI/CD pipeline, we recommend checking out this comprehensive guide: 12 Proven Continuous Integration Best Practices That Drive Development Success. This resource from Mergify provides additional insights and practical tips to further enhance your CI/CD workflows.

By embracing and implementing these continuous integration best practices, you empower your teams to develop and deploy software more efficiently and reliably. This translates to not just better code, but a more agile and responsive organization, ready to meet the demands of today’s dynamic market. Ready to take your CI/CD implementation to the next level? Signiance Technologies specializes in helping organizations like yours optimize their cloud and DevOps strategies for maximum impact. Visit Signiance Technologies today to explore how we can help you achieve your development goals.