Generative AI is all about creating something entirely new from scratch. It learns the patterns, styles, and structures from massive amounts of existing data be it text, images, code, or music and then uses that knowledge to produce original content that feels impressively human. This isn’t just about analysing data; it’s a massive leap forward into genuine creation and smart automation.

What Is Generative AI, Really?

Let’s cut through the noise and get to what generative AI truly is. Don’t think of it as some magical, unknowable box. A better analogy is a brilliant apprentice who has spent years studying a colossal library of human work. The key here isn’t rote memorisation; it’s about spotting the deep, underlying patterns.

This apprentice doesn’t just look at thousands of paintings and learn to copy them. It learns the very essence of what makes a painting feel like a Van Gogh versus a Monet the brush strokes, the colour palettes, the composition. Armed with that understanding, it can then generate a brand-new image in either artist’s style. This ability to synthesise knowledge and produce something novel is what sets it apart.

The Engine of Creation

At its core, generative AI is a content creation engine. This single function is incredibly flexible and has far-reaching implications across different fields. Think about what this means in practice:

- For Writers and Marketers: It can act as a tireless creative partner, drafting emails, outlining blog posts, or generating a dozen social media captions in seconds.

- For Developers: It’s like having a pair-programmer on call 24/7. It can suggest code snippets, write entire functions, and even help hunt down bugs, speeding up the whole development process.

- For Designers: It can take a simple text prompt and turn it into a unique logo, concept art for a video game, or marketing visuals.

This is precisely why generative AI has become so central to modern business and tech strategies. It directly tackles the relentless demand for fresh, high-quality content and efficient problem-solving. By handling the initial, often repetitive parts of the creative workflow, it frees up human experts to focus on the big picture strategy, refinement, and tackling truly complex challenges.

Generative AI isn’t here to replace human creativity; it’s here to amplify it. It offers a starting point, a first draft, or an unexpected perspective, letting creators explore ideas faster than they ever could before.

More Than Just an Algorithm

It’s crucial to see this technology as more than just a clever piece of code. It marks a fundamental change in how we work with computers. For decades, software has been a passive tool. You give it a direct command click a button, run a process and it executes a predefined task.

Generative AI, on the other hand, is collaborative. You give it a prompt, a goal, or a rough idea, and it actively works with you to build the final result. This dynamic, back-and-forth interaction turns the machine into a creative partner. This shift is what makes generative AI so powerful, paving the way for us to explore its core architectures and its ever-growing list of real-world uses.

How Core Generative AI Models Work

To really get what makes generative AI tick, you have to pop the bonnet and look at the engines driving it all. While the field gets complicated fast, a handful of core models do most of the heavy lifting. Each one has its own way of “thinking” and creating, which makes it a better fit for some jobs than others.

The easiest way to think about these models is with a few simple analogies.

- Generative Adversarial Networks (GANs) are like an artist and a critic locked in a fierce competition.

- Variational Autoencoders (VAEs) act like a skilled summariser, boiling down complex information to its essence and then building it back up.

- Transformers are the language experts, masters at understanding the deep, contextual connections between words.

Let’s unpack these three foundational models to see how they actually work. Getting a feel for this is crucial to understanding how generative AI can churn out everything from photorealistic images to sensible text and even working code.

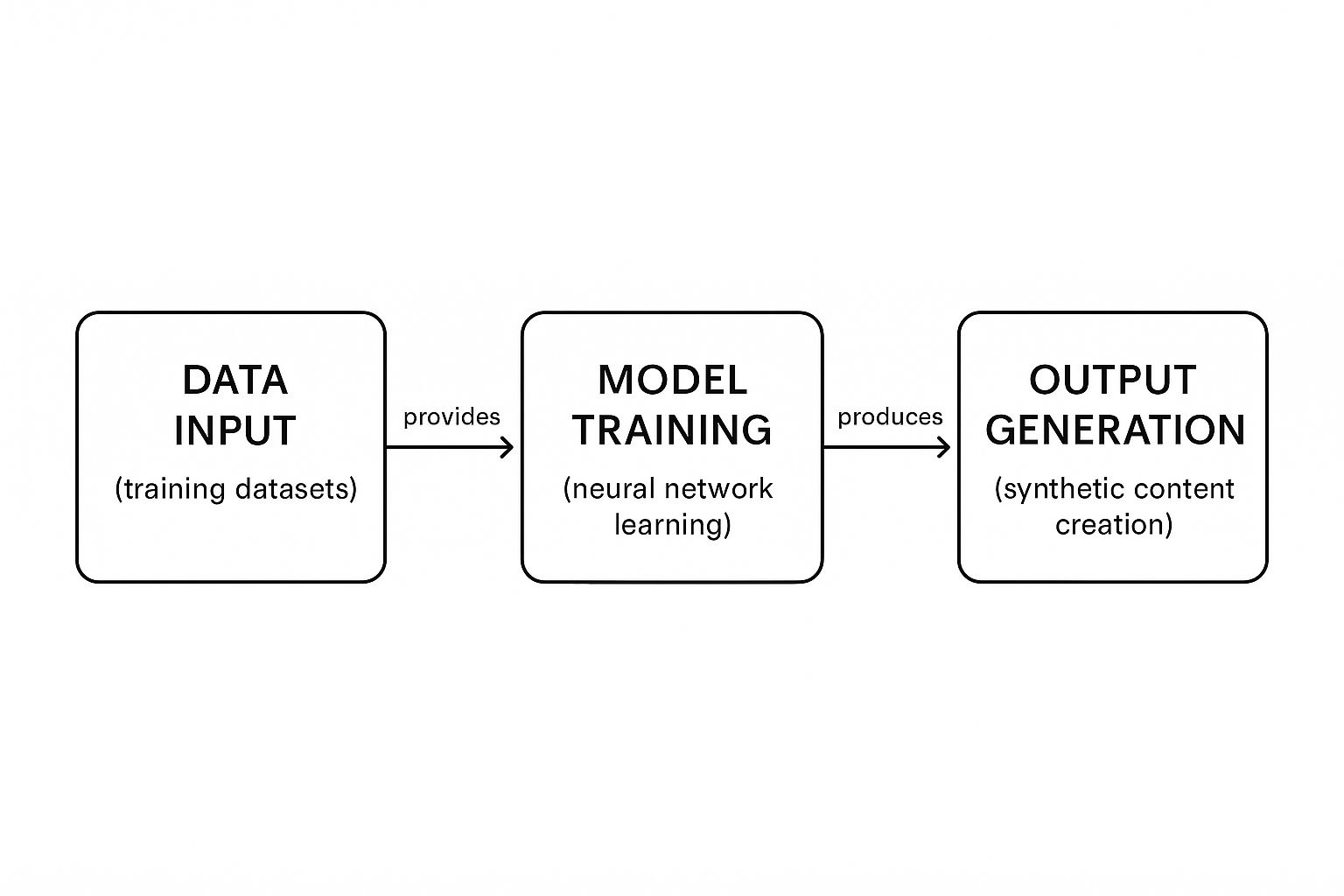

This infographic simplifies the whole process, showing the journey from raw data input and model training all the way to the final generated output.

The key takeaway from the visual is that the quality of what comes out is directly tied to the quality of the data that went in and how well the model was fine-tuned.

Generative Adversarial Networks (GANs): The Competitive Duo

Picture an aspiring forger (the Generator) trying to paint a perfect replica of the Mona Lisa. Standing right next to them is a world-renowned art critic (the Discriminator) who has studied the original for decades and knows every brushstroke.

The forger creates a copy and shows it to the critic. The critic, knowing the real deal inside and out, immediately points out the flaws. “The smile is slightly off,” they might say. The forger takes this feedback, returns to the easel, and tries again.

This cycle of creating and critiquing happens over and over. With each round, the forger gets better at faking it, and the critic gets sharper at spotting the fakes.

This constant competition is what forces both networks to improve relentlessly. Eventually, the Generator gets so good at creating convincing fakes that the Discriminator can no longer reliably tell the difference between the generated image and the real thing.

This back-and-forth struggle is the soul of a GAN. It’s an incredibly effective way to create stunningly realistic outputs, especially when it comes to images.

Key Strengths of GANs:

- High-Fidelity Outputs: They are brilliant at producing sharp, detailed, and photorealistic images that are often indistinguishable from actual photographs.

- Creative Content Generation: Beyond realism, GANs are used for artistic work, like creating deepfakes, dreaming up new fashion designs, or designing video game characters.

Variational Autoencoders (VAEs): The Efficient Summariser

Now, imagine a VAE as a highly skilled researcher told to summarise a dense, 1,000-page scientific textbook into a single, one-page abstract. To pull this off, the researcher must first read and deeply understand the entire book, compressing all of its core concepts into a compact, essential form. This compression phase is handled by the encoder.

Next, a second researcher is given just that one-page summary and tasked with rewriting the original 1,000-page book from it. The new book won’t be a word-for-word copy, of course, but it will capture the same themes, writing style, and crucial information. This reconstruction phase is done by the decoder.

VAEs work much the same way. They learn a compressed, simplified representation of data known as the latent space. This isn’t just a simple file compression; it’s more like an organised map of the data’s features. By picking a point on this map, the decoder can generate brand-new data that looks a lot like the original training set but is entirely unique.

Transformers: The Context Experts

Transformers are the architecture that powers most of the large language models (LLMs) we hear about daily, like OpenAI’s ChatGPT. Their big breakthrough was a mechanism called self-attention.

Think about how you read this sentence: “The crane lifted the heavy cargo onto the ship.” Your brain instantly knows “crane” is a piece of machinery, not the bird. You figure this out from the surrounding words “lifted,” “heavy cargo,” and “ship” which provide vital context.

Transformers do something very similar. The attention mechanism lets the model weigh the importance of every other word in a sentence when it’s looking at a single word, no matter how far apart they are. It understands that in the sentence “The robot picked up the ball because it was round,” the word “it” refers to the ball, not the robot.

This uncanny ability to grasp long-range dependencies and subtle contextual clues is what makes Transformers so dominant for any task involving sequential data.

- Language Understanding: They’re masters of translation, summarisation, and question-answering because they truly get the nuances of language.

- Code Generation: They can understand the syntax and logic of programming languages, making them great assistants for writing and debugging code.

By mastering context, Transformers can generate text that isn’t just grammatically correct but is also coherent and logically consistent over long stretches. This has made them the absolute cornerstone of modern generative AI.

Comparing Core Generative AI Architectures

With these three models in mind, it’s helpful to see them side-by-side. Each has a distinct approach and shines in different areas. This table breaks down their core concepts and best-fit applications to help you quickly tell them apart.

| Model Architecture | Core Concept Analogy | Primary Strength | Common Use Cases |

|---|---|---|---|

| Generative Adversarial Network (GAN) | An artist and a critic in competition. | Creating highly realistic, high-fidelity images. | Image generation, style transfer, deepfakes, data augmentation. |

| Variational Autoencoder (VAE) | A summariser and a re-constructor. | Learning compressed data representations. | Image generation (often softer/blurrier than GANs), anomaly detection, data compression. |

| Transformer | A language expert who understands context. | Grasping long-range relationships in data. | Natural language processing (NLP), translation, text summarisation, code generation. |

Ultimately, choosing the right architecture depends entirely on the problem you’re trying to solve. Need a photorealistic face for a virtual avatar? A GAN is likely your best bet. Need to build a chatbot that can hold a sensible conversation? A Transformer-based model is the way to go. Understanding these differences is the first step toward applying generative AI effectively.

Generative AI’s Growing Role in India

Generative AI might be making waves globally, but its impact in India is something else entirely. The country isn’t just adopting this technology; it’s rapidly becoming a critical hub for AI innovation, talent, and development. This isn’t some far-off prediction. It’s happening right now, fundamentally changing how industries operate and unlocking enormous commercial value.

The financial figures tell a story of explosive growth. India’s generative AI market is projected to reach about USD 1.18 billion by 2025. From there, it’s expected to accelerate at a compound annual growth rate (CAGR) of around 37.01% between 2025 and 2031. This trajectory puts the market on track to hit nearly USD 7.81 billion by the decade’s end. Such a steep climb highlights the incredible demand for AI-driven solutions across India’s most important economic sectors. For a deeper dive into these numbers, you can explore this detailed Statista analysis on India’s AI market.

Key Sectors Driving Adoption

This incredible momentum isn’t just happening on its own. It’s being actively driven by specific industries that see a clear competitive edge in automation, smarter processes, and deeper personalisation. A few sectors, in particular, are leading this charge.

Here are three areas where generative AI is already making a significant mark:

- IT and Business Process Outsourcing (BPO): For years the backbone of India’s tech story, IT giants are now using generative AI to automate routine coding, speed up software testing, and build more sophisticated solutions for clients. In the BPO space, it’s the engine behind intelligent chatbots and streamlined customer support.

- Healthcare and Pharmaceuticals: The applications here are genuinely life-changing. AI is accelerating drug discovery, making sense of complex clinical trial data, and even helping to create personalised treatment plans for patients. It’s speeding up the entire research pipeline and leading to better health outcomes.

- Banking, Financial Services, and Insurance (BFSI): This sector is using AI to get smarter and faster. Think advanced fraud detection that works in real-time, personalised financial advice for millions, automated risk assessments, and much smoother customer onboarding.

These industries are effectively becoming the testing grounds where the real-world value of generative AI is proven daily.

The Forces Fuelling the Boom

So, what’s behind this rapid uptake? It’s a powerful combination of factors that have created the perfect environment for innovation. We’re seeing grassroots energy, strategic government backing, and major corporate investment all coming together.

India’s unique position is defined by its massive pool of skilled tech talent, a dynamic startup culture eager to experiment, and strategic government initiatives like ‘Make in India’ and ‘Digital India’. This combination is turning the nation into a global powerhouse for AI development.

This thriving ecosystem gets another boost from major investments by both Indian corporations and global tech leaders who see the immense potential. By setting up AI research centres and partnering with local startups, they are putting fuel on the fire, speeding up the creation and rollout of new AI tools.

The takeaway is simple: India isn’t just a passive user of generative AI; it’s an active participant in shaping its future. For any business, this represents a golden opportunity to connect with a market that is not only ready for smart solutions but is actively demanding them.

Practical Business and DevOps Use Cases

Let’s move from the theory to the real world. Generative AI isn’t just an interesting experiment anymore; it’s a practical tool that businesses and technical teams are using right now to get faster, smarter, and more efficient.

The real magic happens when this technology automates creative and analytical work that, until recently, only humans could do. For businesses, this opens up new doors for personalised customer engagement and rapid content creation. For technical teams like DevOps, it’s about getting rid of repetitive tasks and speeding up how they solve complex problems. Let’s look at how this plays out in both worlds.

Driving Business Growth and Efficiency

In departments like marketing, sales, and strategy, generative AI is becoming a powerful co-worker. It’s helping teams keep up with the constant demand for fresh content and make sense of complicated data, leading to results you can actually measure.

These tools are tackling common business headaches head-on, from breaking through creative blocks in the marketing team to making expensive market research cycles faster and cheaper.

Here are a few concrete examples:

- Automated Content Creation: Imagine getting a first draft of a blog post, a social media update, or an email campaign done in minutes. This frees up human writers to do what they do best: refine the message, focus on strategy, and inject the company’s unique voice.

- Hyper-Personalised Customer Service: AI can fuel chatbots that give customers instant, relevant answers. It can also draft personalised follow-up emails and support scripts, creating a much better customer experience that can scale effortlessly.

- Intelligent Market Research: Instead of a person manually reading through thousands of customer reviews, generative AI can analyse all that unstructured data at once. It pulls out the key themes, gauges customer sentiment, and spots emerging trends, delivering a neat summary.

By taking care of the initial, time-sucking parts of these tasks, generative AI lets teams move from routine production to high-value strategic work. The idea isn’t to replace people, but to make them better at their jobs.

To explore how these ideas could work for your own company, you can find out more about custom generative AI services designed to fit your specific business needs.

Accelerating the DevOps Lifecycle

For anyone in DevOps or software development, speed and accuracy are the name of the game. Generative AI is quickly becoming an essential partner in the software development lifecycle (SDLC), helping engineers write better code faster and keep systems stable.

This isn’t some separate tool; it plugs right into a developer’s existing workflow, almost like an intelligent coding assistant. It cuts down on manual effort and mental strain, which means teams can ship new features more quickly and fix problems with greater urgency.

Here’s how DevOps teams are using it:

- Code Generation and Completion: When integrated into a code editor, AI can suggest code snippets, write entire functions based on a simple comment, or even translate code between programming languages. This gives development a serious speed boost.

- Automated Test Case Generation: Writing good tests is crucial but often a grind. AI can look at a function and automatically generate the necessary tests, including tricky edge cases a human might miss. This directly improves code quality.

- Smart Incident Report Summaries: When a system fails, engineers get buried in logs and alerts. Generative AI can sift through this mountain of data and produce a clear, human-readable summary that points straight to the likely cause, helping teams resolve incidents much faster.

By automating these fundamental yet repetitive jobs, generative AI frees up developers to focus on what really matters: architecture, innovation, and solving unique engineering puzzles. This doesn’t just make them more productive; it makes their jobs more interesting.

Navigating the Hurdles of Enterprise Adoption

While the buzz around generative AI is undeniable, bringing it into a large organisation is rarely a simple plug-and-play exercise. Many businesses, especially here in India, are discovering that the road from a proof-of-concept to a full-blown, integrated system is paved with some serious obstacles. Getting real about these challenges is the first step to creating a strategy that actually works.

Despite all the hype, a 2025 survey from EY revealed a telling statistic: only 36% of Indian enterprises have actually set aside a dedicated budget for generative AI. This tells us that most companies are still dipping their toes in the water, trying to figure out where this powerful tech really fits into their operations. This gap between global excitement and the more cautious approach in India highlights some unique local hurdles we need to tackle head-on. You can dig into the specifics in the full EY report on generative AI adoption in India.

The Main Roadblocks to Watch Out For

Time and again, we see a few key issues that consistently pump the brakes on generative AI projects. These aren’t just tech problems they’re deeply rooted in people, company policies, and existing processes. Spotting them early can save a world of headaches down the line.

Here are the most common blockers we see Indian companies run into:

- Complex Data Privacy Rules: Working with data, particularly sensitive customer information, is a minefield. India’s data protection laws are stringent, and ensuring your AI solution is compliant from day one is a huge legal and technical undertaking.

- The AI Talent Gap: Let’s be honest: finding people who truly understand this stuff is tough. The demand for skilled data scientists and ML engineers who can build, manage, and refine these complex models is soaring, but the supply hasn’t caught up. This makes hiring both competitive and expensive.

- Infrastructure That Isn’t Ready: Generative AI is hungry for computing power. It needs a robust cloud setup to run effectively, which is simply non-negotiable. Many organisations realise too late that their current IT infrastructure just can’t handle the strain, creating a massive technical gap.

A great generative AI strategy isn’t just about a clever algorithm. It stands on three pillars: a solid foundation of clean data, the right people with the right skills, and a scalable infrastructure that won’t buckle under pressure.

Forging a Realistic Path Forward

Getting past these obstacles isn’t about finding a magic bullet; it’s about smart, strategic planning from the top down. It all begins with securing a proper budget and building a compelling business case that everyone can get behind. From there, you have to plan for those essential infrastructure upgrades. In many ways, this process mirrors other big technology shifts, a topic we explore in our guide to common cloud migration challenges.

Ultimately, adopting generative AI successfully is so much more than just buying a new piece of software. It demands a frank look at where your organisation stands today and a clear, actionable plan to fill the gaps in talent, data governance, and technology. By anticipating these challenges, you can pave a much smoother road to unlocking the real, tangible value of generative AI.

A Practical Framework for Integrating Generative AI

To get real value from generative AI, you need more than just exciting tech you need a smart, deliberate game plan. Successful integration isn’t about a single “aha!” moment. It’s about a methodical, business-first approach that balances big ambitions with practical, responsible steps.

Too many projects fizzle out because they start with the solution (the shiny new AI) instead of the problem. The best way forward is to first identify a specific, high-value business challenge. Are you trying to slash customer support response times? Or maybe you need to speed up content creation for the marketing team? Pinpointing a clear goal from the get-go ensures your AI initiative is built to deliver real, measurable results.

Start with Data and People

Once you know what you’re trying to fix, your attention has to turn to two fundamental pillars: your data and your team. A generative AI model is only as good as the information it learns from. If you train it on messy, irrelevant, or biased data, you’re guaranteed to get flawed outputs. It’s that simple.

That’s why building a high-quality, relevant dataset is non-negotiable. This isn’t a quick job; it requires careful curation and cleaning to make sure the information truly reflects the context of your business problem. Just as important is having a “human in the loop” from day one. This isn’t just about catching mistakes. It’s about providing critical oversight, refining the AI’s outputs, and making sure its behaviour aligns with your company’s ethical standards and brand voice.

A successful generative AI integration is really a partnership between human expertise and machine capability. The human provides the context, judgement, and ethical guardrails, while the AI provides the scale and speed. This collaboration is the key to building trust and ensuring quality.

Build for Scale and Transparency

Thinking too small is a classic pitfall. A pilot project might run perfectly on a limited dataset, but you have to plan for scalability right from the start. This means building your solution on a flexible cloud infrastructure that can handle the growing computational demands and data volumes when you eventually move from a small test to full-blown production.

Transparency is another cornerstone of a responsible AI framework. Your team needs to understand, at least at a high level, how the model is reaching its conclusions. This “explainability” is vital for debugging, refining performance, and building trust with everyone involved, from stakeholders to end-users. Without it, the AI is just a “black box,” which makes it incredibly difficult to manage and improve over time.

A solid integration plan always includes these essential steps:

- Define a Clear Business Case: Find a specific, measurable problem that generative AI is genuinely suited to solve.

- Curate High-Quality Data: Assemble and clean a dataset that is directly relevant to that business problem.

- Keep Humans in Command: Put processes in place for human review and oversight to guide the model and validate its work.

- Plan for Future Growth: Build your system on a scalable architecture that can support wider adoption down the road.

- Foster a Learning Culture: Encourage experimentation and create feedback loops so your teams can learn, adapt, and continuously improve the model.

By following this practical framework, your organisation can move beyond just playing with the technology and start integrating generative AI as a reliable, value-driving part of your everyday operations.

Your Generative AI Questions, Answered

As generative AI makes its way into more and more of our daily tools, it’s completely normal to have questions. This is powerful stuff, and with that power comes a fair bit of curiosity and even some scepticism. Let’s break down a few of the most common questions to get a clearer picture of what this technology is all about.

Is it Genuinely Creative, or Just a Fancy Copier?

This is probably the biggest question on everyone’s mind. The short answer? It’s much more sophisticated than just copying. These models don’t just find and repeat something they’ve seen before. Instead, they learn the underlying patterns, styles, and rules from the vast amounts of data they’re trained on.

Think of it like a chef who has spent years studying French cuisine. They don’t just replicate classic recipes; they understand the fundamental techniques, flavour profiles, and principles. Armed with that knowledge, they can create an entirely new dish that is distinctly French, yet completely original. Generative AI operates on a similar principle, using its “knowledge” to generate work that is genuinely new.

How Do You Make Sure the AI’s Output is Safe and Accurate?

This is a critical piece of the puzzle. You can’t just let an AI run wild, especially in a business setting. The gold standard for ensuring quality and safety is what we call a “human-in-the-loop” approach. It simply means that a person reviews and signs off on the AI’s output before it goes live.

A human reviewer brings context, nuanced judgement, and a sense of ethics to the table things that AI models just don’t have yet. This step is non-negotiable for fact-checking, spotting subtle bias, and ensuring the final product reflects your brand’s standards and values.

Beyond that, a couple of other practices are essential:

- Start with Good Data: The quality of the output is directly tied to the quality of the input. Using high-quality, diverse, and carefully curated data for training is the first line of defence against bias and errors.

- Build in Safety Filters: We can also implement technical guardrails that act like safety nets, automatically catching and blocking harmful or inappropriate content before it’s ever generated.

Is Generative AI Going to Take Everyone’s Jobs?

It’s easy to see why this is a concern. While generative AI will certainly automate a lot of tasks, it’s far more likely to change jobs rather than eliminate them entirely. Think of it as a collaborator a very powerful one that enhances what people can do, rather than replacing them.

The real shift will be in how we work. By letting the AI handle the repetitive, time-consuming tasks, professionals are freed up to focus on the things humans do best: strategic thinking, creative problem-solving, and building client relationships. This means job roles will evolve, and new skills centred on working alongside AI will become incredibly valuable.

Interestingly, while businesses across India are embracing AI, public familiarity is still catching up. A 2025 Google-Kantar study highlighted this gap, revealing that about 60% of Indians surveyed were unfamiliar with AI, and only 31% had actually used a generative AI tool. You can learn more about these findings on the AI awareness gap in India.

Ready to see how these advanced technologies can directly benefit your business? At Signiance Technologies, we build powerful cloud solutions that drive growth and efficiency. Explore our services at https://signiance.com.