Unlocking Business Potential with Hybrid Cloud

Want to optimize your IT infrastructure for flexibility, scalability, and cost efficiency? This listicle details eight key hybrid cloud architectures including cloud bursting, multi-cloud federation, and data gravity patterns empowering you to make informed decisions about your cloud strategy. Understand how these hybrid cloud architectures help businesses in the IN region improve disaster recovery, optimize resource utilization, and gain a competitive edge. Let’s explore these patterns.

1. Cloud Bursting Pattern

The Cloud Bursting Pattern is a powerful hybrid cloud architecture that allows organizations to maintain the majority of their computing infrastructure on-premises or in a private cloud while leveraging the scalability of a public cloud during periods of peak demand. This approach offers a compelling blend of cost-effectiveness, security, and flexibility, making it an attractive option for businesses of all sizes, particularly those experiencing fluctuating workloads. Essentially, the system operates with a baseline capacity locally and “bursts” into the public cloud when additional resources are required, providing elastic scalability without the need for constant over-provisioning. This ensures that companies only pay for the extra computing power they need, when they need it.

This hybrid cloud architecture hinges on the seamless integration and orchestration between the private and public cloud environments. Automatic workload scaling is a key feature, dynamically allocating resources based on pre-defined demand thresholds. When the on-premises infrastructure approaches its capacity limit, the system automatically shifts a portion of the workload to the public cloud, ensuring uninterrupted service delivery even during peak periods. This dynamic scaling is complemented by robust failover and failback mechanisms, which guarantee business continuity in case of unforeseen outages in either environment. The system can seamlessly transfer workloads back to the private cloud once the demand subsides, optimizing resource utilization and minimizing public cloud expenditure.

The Cloud Bursting Pattern offers several distinct advantages. Cost-effective scaling eliminates the need for investing in expensive hardware that may sit idle during off-peak periods. Maintaining core systems on-premises or in a private cloud provides enhanced security control over sensitive data and workloads, addressing data sovereignty and compliance requirements. Furthermore, the public cloud component acts as a built-in disaster recovery solution, offering redundancy and resilience in case of local infrastructure failures.

However, implementing a Cloud Bursting Pattern also presents certain challenges. Configuring the network for seamless communication between the private and public cloud can be complex, potentially introducing latency issues that need careful consideration. Data transfer costs associated with moving workloads to and from the public cloud can also accumulate, necessitating careful planning and cost management. Furthermore, successful implementation requires sophisticated monitoring and orchestration tools to manage the dynamic scaling and workload distribution effectively. Finally, ensuring application compatibility across both environments can be a hurdle, requiring modifications or cloud-native development approaches.

Several real-world examples showcase the successful implementation of the Cloud Bursting Pattern. Netflix utilizes this architecture to scale its content delivery network during peak viewing hours, ensuring smooth streaming experiences for millions of users globally. Financial institutions leverage cloud bursting to handle the increased load on their trading platforms during periods of market volatility. E-commerce platforms, like those in India experiencing high traffic during festive sales like Diwali or during special shopping days like Big Billion Day, benefit significantly from cloud bursting to handle the massive spikes in online transactions. Even healthcare systems can leverage this pattern to manage data processing during seasonal events like flu outbreaks.

For organizations considering implementing a Cloud Bursting Pattern, several key tips can contribute to a successful deployment. Implementing robust monitoring tools is crucial to predict burst requirements accurately and prevent performance bottlenecks. Designing applications with cloud-native principles, such as containerization and microservices, enhances portability and interoperability across environments. Establishing clear cost thresholds and scaling policies helps control public cloud expenditure and optimize resource utilization. Regularly testing burst scenarios is essential to ensure the reliability and resilience of the hybrid cloud architecture. Popularized by major cloud providers like AWS, Azure, VMware vCloud, and Flexera (formerly RightScale), the Cloud Bursting Pattern has become a valuable tool for organizations seeking a cost-effective and scalable hybrid cloud solution. This architecture deserves its place in any discussion of hybrid cloud architectures because it offers a practical and effective solution for addressing fluctuating workloads while maintaining security and control. It is particularly relevant for organizations in the IN region experiencing rapid digital transformation and increasing demands on their IT infrastructure.

2. Multi-Cloud Federation Pattern

The Multi-Cloud Federation Pattern represents a sophisticated approach to hybrid cloud architectures, enabling organizations to distribute workloads across multiple public cloud providers while maintaining unified management and orchestration. This pattern is a significant step up from simply using multiple clouds independently, as it focuses on creating a cohesive, interconnected environment across different providers. It’s a powerful way to leverage the best-of-breed services from various vendors while mitigating the risks of vendor lock-in. This interconnectedness is achieved through a central management plane that offers a single point of control for resources, policies, and operations across the entire federated cloud infrastructure.

In essence, a multi-cloud federation creates a hybrid cloud environment where the on-premises component is replaced by multiple public clouds acting as a distributed “on-premises” equivalent. This architecture offers numerous benefits, including increased flexibility, resilience, and cost optimization. It allows businesses to choose the most suitable cloud platform for specific workloads based on factors like performance, cost, geographic availability, and regulatory compliance. For example, an organization might leverage AWS for its compute power, Azure for its robust enterprise-grade services, and Google Cloud for its data analytics capabilities, all within a single, unified management framework. This approach is particularly relevant in the IN region, given the growing presence of multiple cloud providers and the increasing demand for flexible and scalable cloud solutions.

The Multi-Cloud Federation Pattern offers key features such as a unified management plane, enabling consistent control across all providers. Cross-cloud workload orchestration and scheduling tools allow for automated deployment and management of applications across the federated infrastructure. Federated identity and access management ensures secure access to resources across different clouds, while centralized monitoring and compliance reporting provides a consolidated view of the entire environment. Provider-agnostic application deployment, often facilitated by containerization technologies, ensures application portability and simplifies the process of moving workloads between different cloud environments.

Several companies have successfully implemented multi-cloud federation strategies. Spotify, for instance, utilizes AWS, Google Cloud, and Azure for various services, leveraging the strengths of each provider. Capital One employs a multi-cloud strategy across AWS and Microsoft Azure, while Dropbox combines AWS with custom infrastructure in a hybrid approach. BMW Group also utilizes a multi-cloud implementation to support its global operations. These examples demonstrate the viability and effectiveness of this approach for diverse organizational needs.

This pattern is especially beneficial for organizations seeking to avoid vendor lock-in, maximize the benefits of various cloud services, enhance disaster recovery and business continuity, and improve their negotiating power with cloud vendors. The geographic distribution capabilities are also attractive, especially for businesses operating in multiple regions like within IN.

However, it’s crucial to acknowledge the inherent complexities. Managing a federated multi-cloud environment requires specialized expertise and robust tooling. Networking and data transfer costs can be higher compared to single-cloud deployments, and security and compliance requirements become more intricate due to the integration of multiple platforms. Increased complexity in management and operations is another significant consideration.

For organizations considering this approach, several tips can ease the transition. Starting with containerized applications enhances portability, while infrastructure as code ensures consistent deployments across different cloud providers. Leveraging cloud-agnostic monitoring and management tools simplifies oversight and control. Establishing clear data governance and migration strategies is crucial for maintaining data integrity and minimizing disruption. Platforms like Google Anthos, VMware Tanzu, Red Hat OpenShift, and HashiCorp Terraform are popular choices for building and managing multi-cloud federated environments.

While the Multi-Cloud Federation Pattern presents some challenges, its benefits in terms of flexibility, resilience, and cost optimization make it a compelling option for organizations looking to maximize the potential of hybrid cloud architectures. It’s particularly relevant for businesses operating in the rapidly evolving cloud landscape of the IN region, providing the adaptability needed to thrive in a competitive market.

3. Data Gravity Pattern

The Data Gravity Pattern is a crucial consideration when designing hybrid cloud architectures, especially for organizations dealing with large datasets. This pattern recognizes the inherent “gravity” of data, dictating that compute resources should be placed as close as possible to where the data resides. This minimizes costly and time-consuming data movement across networks, leading to significant performance gains and reduced latency. This approach is particularly relevant for startups and early-stage companies looking to scale efficiently, enterprise IT departments managing complex data landscapes, cloud architects and developers building performant applications, DevOps and infrastructure teams optimizing deployments, and business decision-makers and CTOs seeking cost-effective solutions. Its focus on data locality while maintaining hybrid connectivity makes it a powerful tool for optimizing various workloads.

In essence, the Data Gravity Pattern flips the traditional model of moving data to compute. Instead, it brings compute to the data. This is achieved by strategically deploying processing resources, such as analytics engines or application servers, within the same environment (cloud or on-premises) where the data is stored. This co-location significantly reduces the distance data needs to travel, minimizing latency and bandwidth consumption. While prioritizing data locality, the hybrid cloud aspect of this pattern ensures that other functionalities, like centralized management, security services, and disaster recovery, can still leverage the broader capabilities of the cloud.

Several key features define the Data Gravity Pattern within a hybrid cloud architecture:

- Co-location of compute and storage resources: This is the core principle, ensuring data and processing power reside in close proximity.

- Minimized data movement between environments: Reducing data transfer across the hybrid cloud divide is a primary objective.

- Edge processing capabilities for real-time analytics: Deploying compute resources at the edge, closer to data sources like IoT devices, enables real-time insights.

- Hierarchical data tiering and lifecycle management: Data can be tiered across different storage locations (e.g., hot, warm, cold storage) based on access frequency and business needs, optimizing cost and performance within the hybrid environment.

- Bandwidth-optimized data synchronization: Essential for maintaining data consistency across different locations within the hybrid cloud, employing techniques like change data capture and event-driven architectures.

The benefits of adopting the Data Gravity Pattern are substantial:

- Reduced data transfer costs and latency: Moving less data translates directly into lower network costs and faster processing times.

- Improved application performance: Applications benefit from reduced latency, delivering quicker responses and better user experiences.

- Enhanced data security through reduced movement: Minimizing data transfers reduces the attack surface and simplifies compliance with data security policies.

- Better compliance with data residency requirements: Processing data locally can be essential for adhering to specific regulatory requirements related to data sovereignty.

- Optimized bandwidth utilization: Less strain on network bandwidth frees up resources for other critical operations.

However, implementing the Data Gravity Pattern also presents certain challenges:

- Potential data silos and governance challenges: Distributing compute resources closer to data can lead to data silos if not managed carefully. Robust data governance policies are crucial.

- Complex data consistency management: Ensuring data consistency across different locations within a hybrid cloud environment requires careful planning and implementation of synchronization mechanisms.

- Increased infrastructure management overhead: Managing distributed compute resources across multiple locations can introduce complexities.

- Challenges in maintaining data freshness across locations: Real-time synchronization and data consistency mechanisms are essential to avoid using stale data.

Several real-world examples illustrate the power of the Data Gravity Pattern:

- Manufacturing companies processing IoT sensor data at factory edge locations: Analyzing sensor data locally enables real-time monitoring and control of production processes.

- Content delivery networks placing compute near cached content: Serving content from edge servers closer to users reduces latency and improves delivery speed.

- Financial services keeping trading data processing close to exchanges: Minimizing latency is critical for high-frequency trading, requiring compute resources to be located near the exchanges.

- Healthcare organizations processing medical imaging data locally: Processing large medical images locally improves the speed of diagnosis and treatment planning.

For successful implementation of the Data Gravity Pattern, consider these tips:

- Implement data cataloging to understand data gravity points: Identify where your largest and most frequently accessed datasets reside.

- Use event-driven architectures for efficient data synchronization: Trigger data synchronization based on changes, minimizing unnecessary data transfer.

- Deploy edge computing solutions for real-time processing needs: Leverage edge computing platforms for applications requiring immediate data processing.

- Establish clear data governance policies across locations: Ensure data consistency and compliance across your hybrid cloud environment.

Learn more about Data Gravity Pattern

The Data Gravity Pattern has been popularized by thought leaders like Dave McCrory, who coined the term, and is supported by major cloud providers through services like AWS Outposts, Azure Stack, and Google Cloud Anthos. These platforms facilitate the deployment and management of on-premises infrastructure that seamlessly integrates with their respective cloud offerings, making it easier to implement hybrid cloud architectures based on the Data Gravity Pattern. By understanding and leveraging this pattern, organizations can build highly performant, cost-effective, and scalable hybrid cloud solutions.

4. Gateway Aggregation Pattern

The Gateway Aggregation Pattern is a powerful hybrid cloud architecture that simplifies access to services spanning both on-premises and cloud environments. It leverages API gateways to act as a central point of entry for all client requests, abstracting the complexity of the underlying infrastructure. This pattern offers a unified and consistent interface, regardless of where the backend services reside, making it a crucial element in modern hybrid cloud strategies. This approach is particularly valuable for organizations looking to leverage the flexibility of the cloud while maintaining existing on-premises investments.

This pattern works by routing all client requests through a single API gateway. The gateway then determines the appropriate backend service, whether it’s located in a private data center or a public cloud, and forwards the request. The gateway can also perform additional functions such as request transformation, protocol translation, and response aggregation. This centralization simplifies client integration and allows for consistent enforcement of security policies and monitoring across all services.

Several organizations have successfully implemented the Gateway Aggregation Pattern to manage their hybrid cloud environments. Netflix, with its Zuul gateway, pioneered this approach for orchestrating microservices across its vast infrastructure. Similarly, Uber uses an API gateway to handle requests directed to both on-premises and cloud-based services. E-commerce platforms utilize this pattern to aggregate inventory information from various sources, including warehouses, third-party vendors, and cloud-based databases. Even traditional sectors like banking are adopting this approach to seamlessly combine core banking systems with modern cloud-based services. These examples demonstrate the versatility and scalability of this pattern across diverse industries.

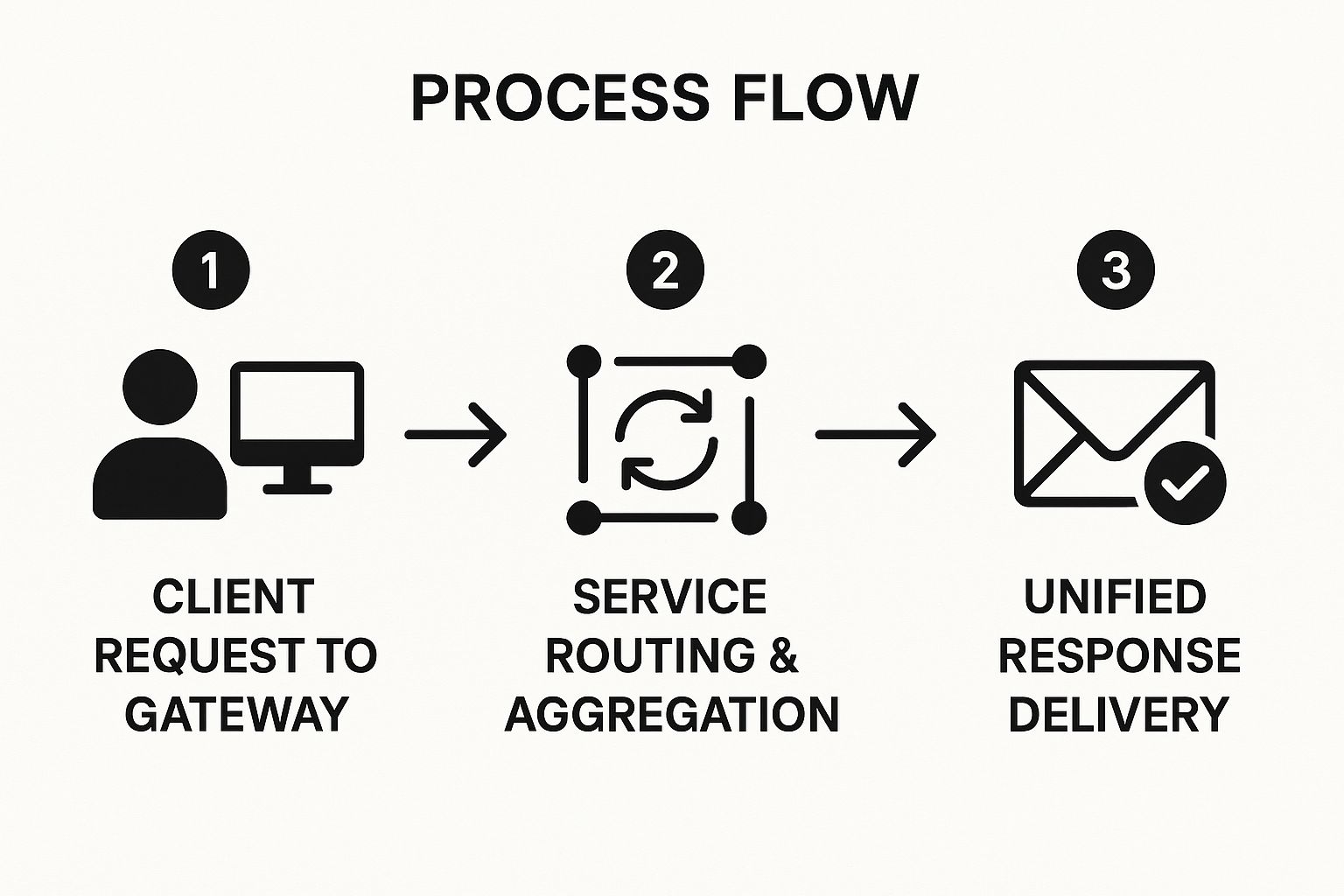

The following infographic illustrates the basic flow of a request within a Gateway Aggregation Pattern architecture:

The infographic depicts the three key steps: the client sends a request to the gateway, the gateway routes and aggregates services, and finally, the gateway delivers a unified response back to the client. This streamlined process underscores the simplicity and efficiency offered by the Gateway Aggregation Pattern.

This pattern offers numerous advantages. It provides a simplified client integration experience through a single endpoint, eliminating the need for clients to interact with multiple services directly. Centralized security and policy enforcement become significantly easier to manage through the gateway. The pattern also reduces client-server communication overhead by optimizing requests and aggregating responses. Furthermore, it facilitates seamless service migration between on-premises and cloud environments without impacting clients. Finally, it enables consistent API versioning and documentation, streamlining development and maintenance.

However, it’s crucial to be aware of the potential downsides. The API gateway introduces a potential single point of failure. While this risk can be mitigated with proper redundancy and failover mechanisms, it remains a consideration. The additional network hop introduced by the gateway can also add latency to request processing. Under heavy load, the gateway can become a performance bottleneck. Lastly, configuring and managing a sophisticated API gateway can introduce complexity.

To effectively implement the Gateway Aggregation Pattern, consider the following tips: Implement gateway clustering for high availability and redundancy. Leverage caching strategies to improve performance and reduce latency. Design for graceful degradation to handle situations where backend services are unavailable. Continuously monitor gateway performance metrics and implement circuit breakers to prevent cascading failures.

The Gateway Aggregation Pattern deserves a place in this list because it provides a robust and scalable solution for managing the complexity of hybrid cloud architectures. Its ability to unify access to disparate services while simplifying client integration and enhancing security makes it an essential pattern for organizations looking to fully realize the potential of hybrid cloud. Popularized by solutions like Netflix Zuul, Amazon API Gateway, Kong Inc., and Ambassador Labs, this pattern has proven its effectiveness in diverse real-world scenarios. By carefully considering the pros and cons and following the implementation tips provided, businesses can leverage the Gateway Aggregation Pattern to create a more agile, efficient, and secure hybrid cloud infrastructure.

5. Strangler Fig Pattern

The Strangler Fig Pattern is a powerful hybrid cloud architecture strategy that allows organizations to gradually migrate legacy on-premises systems to the cloud, minimizing disruption and risk. Just like a strangler fig plant gradually envelops and replaces a host tree, this pattern involves incrementally replacing functionalities of existing applications with new cloud-based services. This approach is particularly valuable within hybrid cloud architectures, allowing companies to leverage cloud benefits while maintaining existing systems until fully transitioned. For startups and early-stage companies looking for scalable and cost-effective solutions, enterprise IT departments modernizing legacy systems, cloud architects and developers building robust architectures, DevOps and infrastructure teams ensuring smooth transitions, and business decision-makers & CTOs seeking strategic technological advantages, the Strangler Fig Pattern offers a controlled and effective migration pathway.

This method works by creating a “facade” layer that intercepts requests to the legacy system. This facade acts as a router, directing traffic to either the existing on-premises application or the new cloud-based services. As more functionality is migrated to the cloud, more traffic is routed to the new services. Eventually, the legacy system becomes redundant and can be safely decommissioned, leaving the new cloud-based system fully in control.

How it Works:

- Identify a Module: Start by identifying a well-defined, non-critical module within the legacy application to migrate.

- Develop in the Cloud: Build the equivalent functionality for the chosen module in the cloud environment, leveraging cloud-native services and technologies.

- Implement the Facade: Create the facade layer to manage traffic routing. This layer intercepts incoming requests and directs them to either the legacy system or the new cloud-based module, depending on the specific function being requested.

- Test and Monitor: Thoroughly test the new cloud-based module and the facade layer to ensure they function correctly and meet performance requirements. Implement robust monitoring to track performance and identify potential issues.

- Gradual Traffic Shifting: Gradually shift traffic from the legacy module to the new cloud-based module. This can be achieved using techniques like feature flags or weighted routing.

- Rollback Capability: Implement a mechanism for rolling back to the legacy system if any issues arise during the migration process.

- Repeat: Repeat steps 1-6 for other modules within the legacy application, gradually migrating more and more functionality to the cloud.

Successful Implementations:

Several prominent organizations have successfully utilized the Strangler Fig Pattern for their cloud migration initiatives. Monzo Bank leveraged this pattern for their transition from legacy banking systems to a cloud-native architecture, enabling them to scale rapidly and offer innovative financial services. Similarly, The Guardian employed this approach to migrate their monolithic Content Management System (CMS) to a microservices architecture, enhancing flexibility and agility. Walmart and British Airways have also used this pattern for modernizing their legacy systems, demonstrating its efficacy across diverse industries.

Actionable Tips:

- Start Small: Begin with less critical, well-defined components to minimize risk and gain experience.

- Comprehensive Monitoring: Implement detailed monitoring during each phase to detect and address issues promptly.

- Feature Flags: Use feature flags to control traffic routing and enable quick rollbacks if necessary.

- Data Synchronization: Plan for data synchronization between the legacy and new systems to maintain data consistency.

Pros and Cons:

Pros:

- Minimizes migration risks through a phased approach.

- Allows for continuous operation during the migration process.

- Enables learning and adjustment during the transition.

- Reduces the impact on end-users.

- Provides rollback options at each phase.

Cons:

- Can lead to an extended migration timeline and increased costs.

- Introduces complexity in maintaining two systems concurrently.

- Presents potential data consistency challenges.

- Requires careful planning and coordination.

When and Why to Use This Approach:

The Strangler Fig Pattern is particularly suited for:

- Large, complex legacy systems: It allows for a controlled, incremental migration, reducing the risk associated with a “big bang” approach.

- Applications with high uptime requirements: The gradual transition minimizes downtime and disruption to end-users.

- Organizations seeking to adopt cloud-native architectures: It enables the gradual introduction of cloud-native services and technologies.

Learn more about Strangler Fig Pattern and explore its potential for your specific application modernization strategy. This pattern provides a robust framework for migrating to a hybrid cloud architecture, maximizing benefits and mitigating risks throughout the transition. Understanding the complexities of maintaining both legacy and modern systems simultaneously, and addressing data consistency challenges, will be crucial for successful implementation. With careful planning and execution, the Strangler Fig Pattern can pave the way for a successful journey to the cloud.

6. Event-Driven Integration Pattern

In the realm of hybrid cloud architectures, seamless integration between on-premises and cloud-based systems is paramount. The Event-Driven Integration Pattern emerges as a powerful solution, leveraging event streams and messaging for asynchronous communication. This pattern facilitates loose coupling, enabling real-time data synchronization and automated business processes across hybrid environments, making it a crucial consideration for modern businesses. This approach is particularly beneficial for hybrid cloud architectures, enabling businesses to connect their existing on-premises infrastructure with the agility and scalability of the cloud.

How it Works:

The core concept revolves around “events” – occurrences signifying a change in state or a noteworthy action within a system. For instance, a new customer registration, a completed order, or a sensor reading could all be represented as events. These events are published onto an event stream, a continuously flowing sequence of data records. Subscribers, which can be various applications or services in either the cloud or on-premises environment, then listen to the stream and react to relevant events. This decoupled architecture means systems don’t need to know about each other directly; they only interact through the event stream.

Benefits for Hybrid Cloud Architectures:

The Event-Driven Integration Pattern offers several key advantages for hybrid cloud setups:

- Loose Coupling: Systems evolve independently without impacting each other. This flexibility is crucial in hybrid environments where on-premises systems might have different update cycles than cloud-based services.

- Real-time Data Synchronization: Event streams facilitate near instantaneous data propagation across the hybrid environment, ensuring consistency and enabling real-time analytics and decision-making.

- Scalability and Fault Tolerance: Event brokers, like Apache Kafka, are inherently distributed and can handle large volumes of events. This scalability is essential for handling peak loads and ensuring system resilience.

- Simplified Integration with Microservices: This pattern aligns perfectly with microservices architectures, allowing individual services to communicate asynchronously through events, fostering independent deployments and scalability.

- Auditing and Monitoring: The immutable nature of events provides a natural audit trail, simplifying debugging and monitoring. Tracking the flow of events offers valuable insights into system behavior and performance.

Examples of Successful Implementation:

Several leading companies have successfully implemented event-driven architectures within their hybrid cloud strategies:

- Airbnb: Utilizes event-driven architecture for booking and payment processing, enabling real-time updates and seamless user experience across their platform.

- Uber: Leverages real-time event processing for ride matching and tracking, ensuring efficient dispatch and accurate location updates.

- Netflix: Employs an event-driven content recommendation and delivery system, personalizing user experience and optimizing content distribution.

- Zalando: Their fashion e-commerce platform utilizes event-driven inventory management for real-time stock updates and optimized logistics.

Actionable Tips for Implementation:

- Design Immutable Events: Events should be self-contained and represent a single, factual occurrence. Avoid changing events after they are published.

- Implement Robust Error Handling: Utilize dead-letter queues to handle message delivery or processing failures, ensuring data integrity and system stability.

- Version Your Events: Implement event versioning to maintain backward compatibility as your systems evolve. This ensures that older systems can still process events even after schema changes.

- Monitor Performance: Track event processing latency and throughput to identify bottlenecks and optimize performance. Tools like Prometheus and Grafana can be invaluable for this.

Pros and Cons:

While powerful, this pattern has its tradeoffs:

Pros:

- High scalability and fault tolerance

- Real-time processing capabilities

- Loose coupling enabling independent system evolution

- Natural audit trail through event logs

- Supports complex business process orchestration

Cons:

- Eventual consistency challenges

- Complex event ordering and duplicate handling

- Debugging and monitoring complexity

- Potential message delivery and processing failures

Popular Technologies:

Several technologies facilitate implementing event-driven architectures within hybrid cloud environments:

- Apache Kafka: A robust, distributed streaming platform ideal for high-volume event processing.

- Amazon EventBridge: A serverless event bus that simplifies event ingestion and routing within AWS and hybrid environments.

- Azure Event Grid: A fully managed event routing service in Azure, designed for large-scale event delivery.

- Google Cloud Pub/Sub: A scalable messaging service for distributing events within Google Cloud and hybrid setups.

The Event-Driven Integration Pattern is a valuable asset in architecting modern hybrid cloud solutions. Its ability to enable real-time data synchronization, facilitate loose coupling, and support scalable processing makes it an essential consideration for organizations seeking to leverage the full potential of their hybrid infrastructure. By carefully considering the tips and best practices mentioned above, organizations can successfully implement event-driven architectures and reap the benefits of a more agile and responsive hybrid cloud environment.

7. Tiered Storage Pattern

The Tiered Storage Pattern is a crucial component of efficient hybrid cloud architectures, enabling organizations to optimize both storage costs and performance. This pattern leverages the flexibility of a hybrid environment by strategically distributing data across different storage tiers based on access frequency, age, business requirements, and compliance needs. This makes it an essential consideration for startups, enterprises, cloud architects, and anyone looking to maximize the value of their hybrid cloud infrastructure.

Essentially, a tiered storage architecture mimics the way we organize information in our daily lives. Frequently used items are kept close at hand, while less frequently accessed items are stored away. In the cloud context, this translates to placing frequently accessed “hot” data on high-performance storage, either on-premises or in premium cloud storage tiers like SSDs. Less frequently accessed “warm” data resides in more cost-effective standard cloud storage tiers, often based on HDDs. Finally, rarely accessed “cold” data, such as archives and backups, is stored in the most cost-effective long-term archival storage solutions, like cloud object storage with lifecycle policies.

This dynamic allocation of data across different tiers is managed through automated data lifecycle management policies. These policies dictate when and how data moves between tiers based on pre-defined criteria, such as age, access frequency, or specific tags. For example, a policy might automatically move data from hot storage to warm storage after 30 days of inactivity and then to cold storage after 90 days. This automated movement ensures that data is always stored in the most cost-effective tier while maintaining accessibility.

The benefits of this tiered approach are significant:

- Cost Savings: Perhaps the most compelling advantage is the substantial cost savings achieved by storing data in the appropriate tier. Archiving infrequently accessed data in cheaper storage significantly reduces overall storage expenses.

- Performance Optimization: Keeping frequently accessed data in high-performance storage ensures optimal application performance and responsiveness. This is critical for businesses that rely on real-time data processing and low-latency access.

- Automated Compliance: Tiered storage simplifies compliance with data retention policies by automating the movement and deletion of data based on predefined rules. This is particularly relevant for industries with stringent regulatory requirements, such as finance and healthcare.

- Scalability: A tiered architecture provides inherent scalability, allowing organizations to easily adapt to changing storage needs. As data volumes grow, the architecture can seamlessly accommodate the increased capacity across different tiers.

- Reduced Management Overhead: Automating the data lifecycle management process reduces the administrative burden on IT teams, freeing up resources for more strategic initiatives.

However, there are some potential challenges to consider:

- Retrieval Delays: Retrieving data from archival storage can incur delays, as the data might need to be restored from tapes or other offline media. This needs careful consideration, especially for data with potential retrieval requirements.

- Policy Management Complexity: Defining and managing data lifecycle policies can be complex, requiring careful planning and ongoing monitoring. It’s crucial to accurately predict access patterns to avoid unnecessary data movements and costs.

- Predicting Data Access Patterns: Accurately predicting future data access patterns can be challenging. Inaccurate predictions can lead to suboptimal data placement and impact performance or cost efficiency.

- Vendor Lock-in: Opting for proprietary tiering solutions can lead to vendor lock-in, making it difficult to migrate to a different platform in the future.

Several examples highlight the effectiveness of tiered storage in hybrid cloud architectures:

- Media Companies: Media companies can store recently released content on high-performance storage for optimal streaming performance, while archiving older content in cheaper storage tiers.

- Healthcare Organizations: Healthcare providers can tier patient records based on access frequency, with frequently accessed records on faster storage and older, less frequently accessed records in archival storage.

- Financial Services: Financial institutions can manage trading data with regulatory retention requirements by automatically moving data to different tiers based on age and compliance needs.

To implement a successful tiered storage strategy, consider the following tips:

- Analyze Data Access Patterns: Thoroughly analyze historical data access patterns to inform the design of your tiering policies.

- Metadata Tracking: Implement metadata tracking to facilitate intelligent tier management and data retrieval.

- Retrieval SLAs: Consider data retrieval service level agreements (SLAs) when designing the tier architecture to ensure timely access to data when needed.

- Regular Review and Optimization: Regularly review and optimize tiering policies to adapt to changing business needs and data access patterns.

Popular cloud providers offer robust tiered storage solutions like Amazon S3 Intelligent Tiering, Microsoft Azure Blob Storage tiers, Google Cloud Storage classes, and solutions like NetApp FabricPool, demonstrating the widespread adoption and maturity of this hybrid cloud pattern. By carefully considering the features, benefits, and potential challenges, organizations can leverage tiered storage to achieve significant cost savings, optimize performance, and enhance their overall hybrid cloud strategy.

8. Container Orchestration Pattern

The Container Orchestration Pattern is a powerful approach to building hybrid cloud architectures, allowing organizations to seamlessly deploy and manage applications across on-premises infrastructure and cloud environments. This pattern leverages containerization and orchestration platforms, such as Kubernetes, to abstract away the underlying infrastructure complexities and provide a consistent operational experience. It’s becoming increasingly crucial for businesses seeking agility, scalability, and cost optimization in their IT operations. This approach enables portable, scalable applications that can run seamlessly across different infrastructure while maintaining operational consistency, making it a key component of modern hybrid cloud strategies.

At its core, this pattern involves packaging applications and their dependencies into containers, lightweight and portable units of software. These containers are then managed by an orchestration platform, which automates tasks like deployment, scaling, networking, and health monitoring across different environments. This allows developers to focus on building applications rather than managing infrastructure.

The Container Orchestration Pattern offers several key features that make it ideal for hybrid cloud architectures: container-based application packaging and deployment ensures consistency across environments; orchestrated scaling and load balancing allows applications to dynamically adapt to changing demands; service discovery and networking facilitate communication between services across hybrid environments; declarative configuration management simplifies infrastructure provisioning and management; and rolling updates and rollback capabilities enable safe and reliable deployments.

The advantages of adopting this pattern are numerous. Application portability across diverse environments, from on-premises data centers to public cloud providers, is a major benefit. This flexibility enables businesses to avoid vendor lock-in and choose the best infrastructure for their specific needs. Consistent deployment and operational practices simplify management and reduce the risk of errors. Efficient resource utilization, enabled by the dynamic scaling capabilities of container orchestration, helps optimize costs. Simplified scaling and management allows businesses to quickly respond to changing market demands. Finally, a strong ecosystem and tooling support provides a wealth of resources and expertise.

However, there are also challenges to consider. There’s a learning curve associated with containerization technologies like Docker and Kubernetes. The added layer of container orchestration introduces additional infrastructure complexity. Security considerations for the container runtime environment are crucial. And managing networking in hybrid cloud scenarios can be complex.

Several companies have successfully implemented this pattern, demonstrating its effectiveness in real-world scenarios. Spotify uses containerized microservices across multiple cloud providers for its music streaming platform. ING Bank leverages a container-based digital banking platform for enhanced agility and scalability. The New York Times uses a containerized content management system for efficient content delivery. And Adidas uses container orchestration for its e-commerce platform to handle peak traffic during major sporting events.

To successfully implement the Container Orchestration Pattern, consider the following tips. Use Infrastructure as Code (IaC) for consistent cluster deployment across environments. Implement proper resource limits and monitoring to ensure optimal performance and cost control. Design applications following the 12-factor principles for cloud-native best practices. And establish security scanning and compliance processes to mitigate security risks.

This pattern is particularly beneficial for startups and early-stage companies looking for scalable and cost-effective infrastructure solutions, enterprise IT departments modernizing their legacy applications, cloud architects and developers building hybrid cloud applications, DevOps and infrastructure teams seeking to automate and streamline operations, and business decision-makers and CTOs evaluating strategic technology investments. The Container Orchestration Pattern’s ability to bridge the gap between on-premises and cloud environments makes it a valuable asset in today’s increasingly hybrid IT landscape.

If you’re considering alternative container orchestration platforms, learn more about Container Orchestration Pattern and explore different options that might better suit your specific needs. This pattern deserves its place in this list due to its ability to provide a robust, scalable, and portable solution for managing applications in the complex world of hybrid cloud architectures.

Hybrid Cloud Architecture Patterns Comparison

| Architecture Type | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Cloud Bursting Pattern | High – requires complex network, monitoring, orchestration | Moderate to High – hybrid private + public cloud resources | Elastic scalability, cost-optimized utilization | Seasonal spikes, backup, big data workloads | Cost-effective scaling, security control, DR capabilities |

| Multi-Cloud Federation Pattern | Very High – multi-cloud management, orchestration, governance | High – multiple cloud providers and networking | Vendor independence, geographic distribution | Applications using best services from multiple clouds | Avoids vendor lock-in, enhanced DR, centralized control |

| Data Gravity Pattern | Moderate – managing data locality and edge compute | Moderate – distributed compute and storage near data | Low latency, reduced data movement costs | IoT processing, content delivery, financial data local processing | Reduced latency, improved performance, compliance support |

| Gateway Aggregation Pattern | Moderate – API gateway config, routing, security | Moderate – relies on API gateway resources | Simplified client integration, unified interface | Microservices orchestration, hybrid service aggregation | Centralized security, reduced client overhead, ease of migration |

| Strangler Fig Pattern | Moderate to High – phased migration with dual systems | Moderate – legacy + new systems operation | Gradual legacy system replacement | Legacy modernization, incremental migration | Minimizes migration risk, rollback options, continuous ops |

| Event-Driven Integration Pattern | High – event stream design, monitoring, eventual consistency handling | Moderate – messaging infrastructure | Real-time processing, loose coupling | Real-time data sync, business automation | Scalability, fault tolerance, auditability |

| Tiered Storage Pattern | Moderate – automated lifecycle and tier policy management | Variable – depends on storage tiers | Cost savings, performance optimization | Data cost optimization, compliance, archival | Automated cost/performance balance, scalable storage |

| Container Orchestration Pattern | High – containerized deployments, orchestration, networking complexity | Moderate to High – container runtime + orchestration platforms | Portable, scalable deployments | Hybrid cloud apps, microservices, scalable platforms | Portability, consistent operations, efficient scaling |

Embracing the Future of Hybrid Cloud with Signiance

From cloud bursting for handling peak loads to leveraging the tiered storage pattern for cost optimization, this article has explored eight key hybrid cloud architectures that empower businesses to tailor their cloud infrastructure to unique needs. Mastering these patterns and understanding where they fit within your organization is crucial for maximizing the benefits of a hybrid cloud strategy – increased agility, enhanced scalability, improved cost-efficiency, and robust security. Successfully implementing these architectures allows you to strategically position your business for growth and innovation in today’s dynamic digital landscape, especially within the rapidly evolving IN region.

Hybrid cloud architectures are not a one-size-fits-all solution. The right approach depends on your specific business objectives, technical capabilities, and regulatory requirements. Choosing the right pattern or combination of patterns is vital for achieving the desired outcomes, whether you’re a startup looking for flexibility or an enterprise seeking enhanced security and control. The power of hybrid cloud lies in its adaptability, enabling you to optimize your infrastructure for both current and future needs.

Ready to harness the full potential of hybrid cloud architectures and navigate the complexities of cloud implementation? Signiance Technologies specializes in helping businesses like yours design, implement, and manage robust hybrid cloud solutions tailored to your unique requirements. Visit Signiance Technologies today to explore how we can help you build a future-proof cloud strategy.