In today’s fast-paced digital economy, a slow or unavailable application is more than an inconvenience; it can be disastrous for business operations and customer trust. The cornerstone of high performance, scalability, and resilience is the effective distribution of network traffic across your server infrastructure. This is precisely the role of load balancing. However, not all approaches yield the same results. A deep understanding of the various load balancing methods is essential for any organisation aiming to build a robust and efficient system architecture.

From the simple, cyclical nature of Round Robin to the more sophisticated, dynamic logic of Resource-Based algorithms, each method provides a unique strategy for managing incoming requests. Selecting the appropriate algorithm can dramatically improve user experience, prevent catastrophic server overloads, and guarantee that your services remain online and responsive, even during unpredictable traffic surges.

This guide is designed to provide clear, actionable insights into seven essential load balancing techniques. We will explore how each one functions, analyse its specific pros and cons, and identify the ideal use cases and environments where it excels. This will empower you to make an informed, strategic decision that aligns perfectly with your infrastructure’s unique demands and business objectives.

1. Round Robin Load Balancing

As one of the most fundamental and widely used load balancing methods, Round Robin operates on a simple, rotational principle. It distributes incoming network or application traffic sequentially across a group of servers. The first request goes to the first server in the list, the second to the second, and so on, cycling back to the first server once it reaches the end of the list.

This method does not analyse server load or capacity; its logic is purely cyclical. Think of it as a dealer distributing cards one by one to a group of players. Each player (server) gets a turn in a fixed, predictable order, ensuring a basic level of distribution. This simplicity is its greatest strength and makes it a default setting in many popular load balancers like NGINX, HAProxy, and AWS Application Load Balancers.

How It Works and When to Use It

The primary advantage of Round Robin is its ease of implementation and minimal computational overhead. Because the load balancer doesn’t need to evaluate server health or connection counts, it can forward traffic extremely quickly. This makes it an ideal choice for environments where the backend servers are homogenous-that is, they have similar processing power, memory, and network capacity.

However, its major drawback is its lack of intelligence. If one server is struggling with a resource-intensive request, Round Robin will continue sending it new requests, potentially leading to server overload and failure while other servers remain underutilised.

Actionable Tips for Implementation

To make the most of the Round Robin method, consider these practical tips:

- Ensure Server Homogeneity: Use this algorithm when your server pool consists of machines with nearly identical specifications. This minimises the risk of one server becoming a bottleneck.

- Combine with Health Checks: Always implement active health checks. This allows the load balancer to temporarily remove a failed or unresponsive server from the rotation, preventing traffic from being sent to a dead endpoint and improving overall reliability.

- Consider Weighted Round Robin: If your servers have different capacities, use Weighted Round Robin instead. This variation allows you to assign a “weight” to each server, directing a proportionally higher number of requests to more powerful machines.

- Implement Session Persistence: For applications that require users to stay on the same server for a session (e.g., e-commerce shopping carts), you must enable session persistence (sticky sessions). Otherwise, a user’s subsequent requests could land on different servers, leading to a loss of session data.

2. Weighted Round Robin Load Balancing

Weighted Round Robin builds upon the simplicity of the standard Round Robin method by introducing a critical element of intelligence: server capacity. Instead of treating every server as equal, this algorithm allows administrators to assign a “weight” to each server, typically based on its processing power, memory, or overall capacity. Servers with a higher weight receive a proportionally larger share of incoming traffic.

This method addresses the primary shortcoming of basic Round Robin, which can easily overwhelm less powerful servers in a mixed-capacity environment. Think of it as a more sophisticated dealer who gives more cards to players with a proven ability to handle them. This approach is a standard feature in leading load balancers like NGINX, F5 BIG-IP, and Amazon’s Elastic Load Balancing (ELB) with weighted target groups, making it one of the most practical and widely-used load balancing methods.

How It Works and When to Use It

The logic is straightforward: if Server A has a weight of 5 and Server B has a weight of 1, the load balancer will forward five requests to Server A for every one request it sends to Server B. This ensures that more powerful machines do more work, leading to optimised resource utilisation and preventing weaker servers from becoming performance bottlenecks.

This method is the ideal choice for heterogeneous server environments, where your server pool consists of machines with varying specifications. It provides a significant step up from basic Round Robin without introducing the complexity of dynamic algorithms that constantly monitor server health. It’s perfect when you have predictable differences in server capabilities and want a simple, effective way to balance traffic accordingly.

Actionable Tips for Implementation

To effectively implement Weighted Round Robin, consider these practical strategies:

- Base Weights on Concrete Metrics: Assign weights based on tangible server specifications like CPU cores, RAM, and network bandwidth. A server with double the resources of another could logically start with double the weight.

- Start Conservatively and Adjust: Begin with modest weight differences (e.g., 3:2 instead of 10:1) and monitor server performance closely. Gradual adjustments based on real utilisation data prevent drastic traffic shifts that could cause new issues.

- Regularly Review and Tune Weights: Server performance can change over time due to hardware degradation or software updates. Periodically review your weight assignments and tune them based on current performance metrics to ensure they remain effective.

- Combine with Health Checks: Just like standard Round Robin, you must use this method with active health checks. This ensures that if a high-weight server fails, it is immediately removed from the rotation, preventing a significant portion of your traffic from being sent to a dead endpoint.

3. Least Connections Load Balancing

Moving beyond simple rotation, the Least Connections method is a dynamic and intelligent algorithm that directs traffic based on real-time server load. It forwards new incoming requests to the backend server with the fewest active connections at that moment. This approach is designed to distribute the workload more evenly, preventing any single server from becoming a bottleneck.

This method actively measures server business, making it a smarter alternative to static methods like Round Robin. Imagine a supermarket checkout where new customers are guided to the cashier with the shortest queue. This ensures that no single cashier is overwhelmed while others are idle, optimising the flow for everyone. This dynamic nature makes it a core feature in load balancers like HAProxy, NGINX (with its least_conn directive), and cloud-native solutions like the AWS Application Load Balancer.

How It Works and When to Use It

The primary benefit of the Least Connections method is its ability to adapt to varying server loads and request processing times. It is particularly effective for applications where sessions or requests have different durations. For example, if one server is tied up with a long-running database query, the load balancer will intelligently route new, short requests to less busy servers, maintaining overall application responsiveness.

However, it assumes all connections require similar processing power once established. If some connections are far more resource-intensive than others despite being a single connection, imbalances can still occur. It also adds a small amount of computational overhead, as the load balancer must track active connections for every server in the pool.

Actionable Tips for Implementation

To effectively implement the Least Connections load balancing method, consider these strategies:

- Ideal for Varying Session Lengths: Use this algorithm when your application handles requests that take different amounts of time to complete. This ensures long-running tasks don’t cause new requests to queue up behind them.

- Always Pair with Health Checks: Just like with other methods, you must implement active health checks. This ensures that a server with zero connections due to a failure is not flooded with all new traffic.

- Monitor Connection Patterns: Regularly analyse your connection data to confirm the algorithm is distributing traffic as expected. Unexpected clustering could indicate issues with connection handling or server performance. For those working in containerised environments, this level of monitoring aligns with Kubernetes best practices.

- Tune Connection Timeouts: Configure appropriate server-side and load balancer connection timeouts. This prevents stale or idle connections from being counted, ensuring the “least connections” count accurately reflects the current, active load.

4. Weighted Least Connections Load Balancing

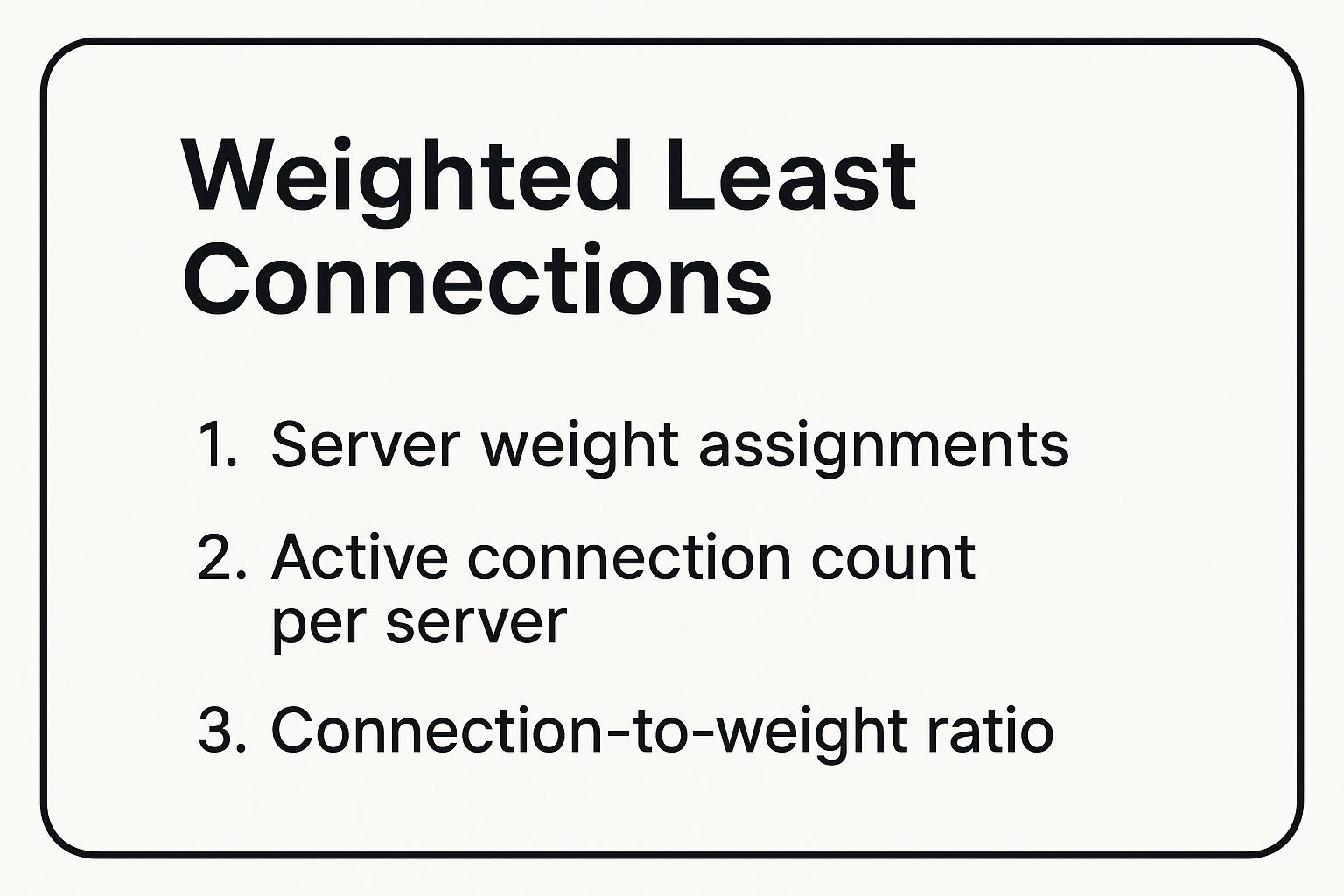

Taking a step beyond simpler algorithms, Weighted Least Connections introduces a more sophisticated layer of intelligence to traffic distribution. This method combines the server capacity awareness of Weighted Round Robin with the real-time load awareness of the Least Connections method. It directs new requests to the server that has the best ratio of active connections to its assigned weight, ensuring that powerful servers handle a proportionally larger load without being overwhelmed.

This dynamic approach makes it one of the most effective load balancing methods for heterogeneous server environments where traffic patterns can be unpredictable. Instead of just considering server capacity (weight) or current connections in isolation, it calculates (Number of Active Connections) / (Server Weight). The server with the lowest resulting value receives the next request, creating a highly efficient and responsive distribution system. This algorithm is a core feature in enterprise-grade load balancers from providers like F5 Networks, Citrix, and VMware.

This infographic breaks down the core components the algorithm uses for its calculations: server weight, active connections, and the resulting ratio that determines traffic flow.

As the data illustrates, the algorithm dynamically identifies the most available server by normalising the current connection count against each server’s capacity, ensuring optimal resource utilisation.

How It Works and When to Use It

The primary strength of Weighted Least Connections lies in its ability to provide the most balanced distribution of traffic in a server pool with varying capacities. It prevents smaller servers from being overloaded while ensuring that more powerful machines are not sitting idle. This makes it ideal for complex applications where requests have varying resource demands and server performance specifications are not uniform.

However, its implementation requires careful initial configuration and ongoing monitoring. Incorrectly assigned weights can lead to inefficient load distribution, negating the algorithm’s benefits. It is best suited for environments where you have a clear understanding of your servers’ relative performance capabilities and can dedicate resources to monitoring and adjusting these settings over time.

For a deeper dive into how this method is applied in real-world scenarios, this video provides a helpful overview:

Actionable Tips for Implementation

To effectively implement the Weighted Least Connections method, consider these strategic tips:

- Calibrate Weights Carefully: Base server weights on comprehensive performance testing, not just CPU cores or RAM. Consider factors like disk I/O, network throughput, and application-specific performance benchmarks to create an accurate reflection of each server’s true capacity.

- Monitor Performance and Connections: Continuously track both the number of active connections and key server performance metrics (CPU utilisation, memory usage). This dual focus helps you verify that the assigned weights are producing the desired load distribution.

- Adjust Weights Dynamically: Use performance data to make gradual adjustments to server weights over time. If a server consistently shows low utilisation despite its high weight, consider lowering its weight. Avoid sudden, drastic changes that could cause traffic instability.

- Implement Connection Pooling: For applications with high connection overhead, use connection pooling on the backend servers. This reduces the latency associated with establishing new connections and allows the load balancer to work more efficiently.

5. IP Hash Load Balancing

IP Hash load balancing uses a mathematical function, a hash, based on the client’s source and destination IP address to determine which backend server receives their request. The key advantage of this method is its ability to create “sticky sessions” naturally, ensuring that requests from a specific user are consistently directed to the same server. This provides inherent session persistence without relying on application-level cookies or session data.

This technique is a deterministic way to map a client to a server. The load balancer calculates a hash value from the client’s IP address and uses this value to select a server from the available pool. This makes it a popular choice in load balancers like NGINX (with its ip_hash directive) and HAProxy (using the balance source algorithm), where maintaining session integrity is crucial for application functionality.

How It Works and When to Use It

The primary benefit of IP Hash is its simple yet effective approach to session affinity. It is an ideal solution for stateful applications where a user’s session data is stored on a specific server, such as e-commerce shopping carts, online gaming platforms, or any service requiring a persistent user login state. By consistently routing a client to the same machine, it prevents the loss of session context that would occur if their requests were scattered across different servers.

However, its effectiveness can be compromised by uneven traffic distribution. If many users access the service from behind a single corporate network address translation (NAT) gateway or a large ISP proxy, their requests will all originate from the same IP address. This can cause all of their traffic to be directed to a single server, creating a performance bottleneck and defeating the purpose of load balancing.

Actionable Tips for Implementation

To implement the IP Hash method effectively, consider these practical recommendations:

- Analyse Client IP Distribution: Before committing to this method, monitor your traffic to ensure you have a diverse distribution of client IP addresses. Avoid this method if a significant portion of your users share a small number of public IPs.

- Plan for Server Maintenance: When a server is added to or removed from the pool, the hash mapping for many clients will change, disrupting their sessions. Use consistent hashing algorithms, if available in your load balancer, to minimise this disruption by remapping only the clients affected by the specific server change.

- Implement for Session Affinity Needs: Use IP Hash specifically when your application requires session persistence and you want to avoid the overhead of more complex session management solutions like a centralised session database or cookie-based persistence.

- Consider a Fallback Strategy: Combine IP Hash with health checks. If the designated server for a particular client IP fails, the load balancer should be configured to temporarily re-hash the request to a healthy server in the pool to maintain service availability.

6. Least Response Time Load Balancing

Least Response Time is a sophisticated and dynamic load balancing method that directs traffic to the server that can handle it the fastest. It is an intelligent algorithm that considers two key real-time metrics: the server’s average response time and its number of active connections. By factoring in both current load and historical performance, it ensures requests are sent to the server best equipped to provide a swift reply.

This method goes beyond simple connection counts to assess actual server performance, making it one of the most effective load balancing methods for optimising user experience. Think of it as a taxi dispatcher sending a new ride not just to the closest driver, but to the closest driver who is also in the fastest-moving traffic. This predictive, performance-based routing is a core feature in advanced load balancers from providers like F5 Networks, Citrix, and AWS.

How It Works and When to Use It

The load balancer continuously measures the time it takes for a server to respond to a health check probe and processes a request. This data, combined with the number of active sessions, creates a performance score for each server. The next incoming request is then forwarded to the server with the best score (lowest response time and fewest connections).

This algorithm is ideal for environments where user experience is paramount and server workloads can be unpredictable. It excels in applications where request complexity varies significantly, as it naturally routes simple, quick requests away from servers bogged down by resource-intensive tasks. However, it requires more computational overhead on the load balancer and can be susceptible to feedback loops if not configured carefully. For instance, a new, fast server could be flooded with traffic, causing its response time to degrade rapidly.

Actionable Tips for Implementation

To effectively deploy the Least Response Time method, consider these practical tips:

- Implement Smoothing Algorithms: Use an averaging or smoothing mechanism for response time data. This prevents the load balancer from making drastic routing changes based on a single slow or fast response, which adds stability to the system.

- Combine with Health Checks: While this method inherently uses response time as a health indicator, it should be supplemented with comprehensive health checks. A server might respond quickly to a simple ping but fail on a complex application-level task.

- Set Response Time Thresholds: Define upper and lower response time thresholds to prevent extreme routing decisions. This can stop a struggling server from being completely overwhelmed or a new server from being instantly flooded with all incoming traffic.

- Leverage Monitoring Tools: To properly measure and analyse server performance, integrate robust application performance monitoring tools. These systems provide the detailed metrics needed to fine-tune the load balancer’s behaviour and diagnose performance issues effectively.

7. Resource-Based Load Balancing

Resource-Based Load Balancing is one of the most intelligent and dynamic load balancing methods, making routing decisions based on the real-time health and utilisation of backend servers. Instead of relying on static rules, it queries servers for specific resource metrics-such as CPU load, memory consumption, disk I/O, and network bandwidth-to determine which one is best equipped to handle the next incoming request.

This method directs traffic to the server with the most available resources, ensuring optimal performance and preventing individual servers from becoming overwhelmed. It’s a sophisticated approach that treats the server pool as a dynamic environment, constantly adapting to changing loads. This level of intelligence is offered by advanced load balancers like F5 BIG-IP, Citrix ADC, and is conceptually similar to how Kubernetes schedules pods based on available node resources.

How It Works and When to Use It

The core strength of the Resource-Based method is its ability to make highly informed routing decisions, which leads to a more balanced and efficient use of infrastructure. It is particularly effective in environments with heterogeneous servers or where workloads are unpredictable and can cause sudden spikes in resource consumption on specific machines. By routing traffic away from busy servers, it prevents performance degradation and service failures.

However, this sophistication comes at a cost. The load balancer must constantly communicate with a monitoring agent on each server to gather resource data, which adds computational overhead and network traffic. If the monitoring is not configured correctly, it can lead to “route flapping,” where traffic is rapidly shifted between servers due to minor, temporary fluctuations in resource usage. This method is ideal for performance-critical applications where maximising resource efficiency is a top priority.

Actionable Tips for Implementation

To implement Resource-Based Load Balancing effectively, consider the following practical tips:

- Implement Comprehensive Monitoring: Install and configure monitoring agents on all backend servers to collect accurate, real-time data on CPU, memory, and network usage. Without reliable data, the load balancer cannot make effective decisions.

- Use Weighted Metrics: Instead of relying on a single metric, create a weighted combination of several. For example, you might assign 50% weight to CPU usage, 30% to memory, and 20% to network I/O to get a holistic view of server health.

- Set Sensible Thresholds: Define clear upper and lower resource utilisation thresholds to prevent excessive route changes. For instance, only redirect traffic when a server’s CPU load exceeds 80% for a sustained period, avoiding reactions to momentary spikes.

- Integrate Predictive Algorithms: For advanced environments, consider using predictive algorithms that analyse historical utilisation patterns to anticipate future resource needs. This allows the load balancer to proactively manage traffic before a server becomes overloaded. To dive deeper into this topic, you can learn more about advanced cloud resource management.

Load Balancing Methods Comparison Table

| Load Balancing Method | Implementation Complexity | Resource Requirements | Expected Outcomes | Key Advantages | Ideal Use Cases |

|---|---|---|---|---|---|

| Round Robin Load Balancing | Low simple circular queue, no state tracking | Minimal no real-time monitoring required | Equal request distribution, predictable pattern | Very simple, low overhead, works well for homogeneous servers | Stateless web apps with similar server specs |

| Weighted Round Robin Load Balancing | Low to Moderate manual weight config needed | Moderate needs weight setup and periodic review | Improved resource utilization over Round Robin | Accounts for server capacity differences, easy to implement | Environments with varied server capacities |

| Least Connections Load Balancing | Moderate requires real-time connection tracking | Moderate to High connection state must be tracked | Better load distribution based on active connections | Adapts to varying request durations, prevents overload | Apps with variable or long-running connections |

| Weighted Least Connections Load Balancing | High combines connection tracking and weight management | High monitors connections and capacities | Adaptive, precise load distribution by capacity and current load | Balances load dynamically respecting weights, best utilization | Complex environments with mixed capacities and dynamic connections |

| IP Hash Load Balancing | Low hash function on client IP | Minimal no dynamic metrics needed | Consistent server assignment per client IP | Natural session persistence, simple setup | Apps needing session affinity with diverse client IPs |

| Least Response Time Load Balancing | High complex metrics collection and monitoring | High requires continuous response time tracking | Optimal user experience by fast, responsive routing | Self-optimizing, adapts in real time, excellent for performance-focused apps | Performance-critical apps prioritizing low latency |

| Resource-Based Load Balancing | High extensive monitoring and threshold tuning | Very High monitors CPU, memory, disk, network | Optimized resource use, prevents overload | Comprehensive health awareness, adapts to resource-intensive workloads | Resource-heavy applications with dynamic computational demands |

Choosing the Right Method for Future-Proof Infrastructure

Selecting the optimal load balancing method isn’t just a technical decision; it’s a strategic one that directly impacts your application’s performance, resilience, and cost-effectiveness. The journey through various load balancing methods, from the straightforward simplicity of Round Robin to the intelligent awareness of Resource-Based algorithms, reveals a crucial truth: there is no universal “best” approach. The ideal choice is fundamentally tied to the unique context of your digital ecosystem.

The effectiveness of your strategy hinges on a deep understanding of your specific needs. A simple, stateless web application might perform perfectly well with the egalitarian distribution of Round Robin. In contrast, an e-commerce platform with stateful user sessions will benefit immensely from the session persistence offered by IP Hash. Similarly, applications with varying server capabilities demand the nuanced distribution of Weighted Round Robin or Weighted Least Connections to prevent high-capacity servers from being underutilized.

From Theory to Practice: Actionable Next Steps

To move from understanding to implementation, your team should focus on a cycle of analysis, experimentation, and monitoring. The key is to transform theoretical knowledge about these load balancing methods into practical, performance-driving decisions.

Here are the actionable steps to take:

- Analyse Your Architecture: Begin by mapping your application’s architecture and traffic patterns. Do you have stateless or stateful services? Are your server resources homogenous or heterogeneous? Answering these questions will immediately narrow down your options.

- Establish Performance Baselines: Before making any changes, benchmark your current setup. Use monitoring tools to measure key metrics like server CPU utilisation, memory usage, and average response times. This data provides the crucial “before” picture for comparison.

- Experiment and A/B Test: Don’t be afraid to experiment. Implement a new algorithm, such as switching from Round Robin to Least Connections, in a controlled environment or for a small percentage of your traffic. Observe the impact on your baseline metrics.

- Continuously Monitor and Iterate: Load balancing is not a “set it and forget it” task. Your traffic patterns will change, your application will evolve, and your infrastructure will grow. Continuous monitoring is essential to ensure your chosen method remains the most effective, allowing you to adapt and optimise proactively.

Mastering these load balancing methods is a cornerstone of building a truly resilient and scalable infrastructure. It empowers you to deliver a consistently fast and reliable experience to your users, even during unpredictable traffic surges. This not only enhances user satisfaction but also optimises resource utilisation, directly impacting your operational costs and providing a competitive advantage in a crowded digital marketplace.

Ready to move beyond theory and build a high-performance, resilient, and cost-optimised cloud infrastructure? The experts at Signiance Technologies specialise in designing and implementing bespoke load balancing strategies that align with your unique business goals. Partner with us to leverage the AWS Well-Architected Framework and ensure your systems are engineered for the demands of today and the growth of tomorrow.