Best Practices for Secure Team and Environment Management

The Real Challenge of Multi-Tenancy

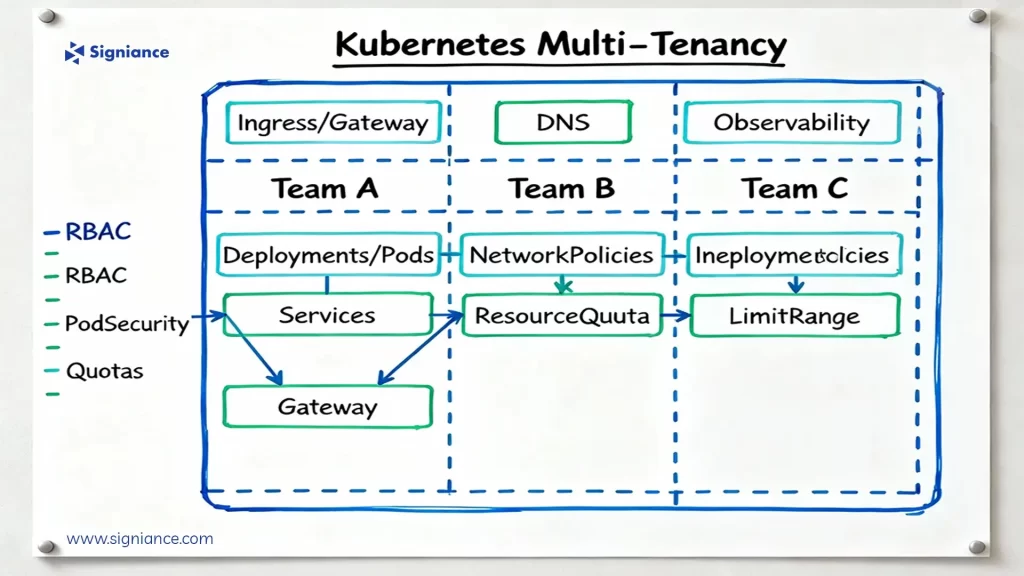

Managing Kubernetes at scale isn’t just about deploying workloads. It’s about orchestrating trust, multiple teams, different environments, one shared infrastructure.

In the early days, Kubernetes clusters were often dedicated to single applications or teams. But as enterprises embraced platform engineering and cost efficiency, multi-tenancy became the norm. The problem? With multiple tenants sharing resources, the potential for misconfiguration, noisy neighbours, and security lapses grows exponentially.

A robust multi-tenant design gives teams autonomy while maintaining strong governance, isolation, and performance consistency, the three pillars of sustainable Kubernetes growth.

Understanding Multi-Tenancy in Kubernetes

Multi-tenancy means multiple users, teams, or applications share the same Kubernetes environment, either within a single cluster or across multiple clusters.

Depending on trust boundaries, it comes in two main forms:

- Soft multi-tenancy: Internal teams that share infrastructure with moderate isolation (e.g., different product teams in one company).

- Hard multi-tenancy: Untrusted tenants, such as different customers in a SaaS environment, requiring strict isolation.

Why enterprises choose multi-tenancy:

- Lower operational cost, fewer clusters to manage.

- Unified governance and policy control.

- Simplified developer onboarding and automation.

But without structure, shared clusters can turn chaotic. The solution lies in architectural discipline.

Architectural Models for Multi-Tenancy

Different organizations adopt different tenancy models based on their scale, team trust level, and compliance needs.

a. Single Cluster, Multiple Namespaces

The most common approach for internal multi-tenancy.

- Each team gets its own namespace, with quotas and policies.

- Central platform team manages access and governance.

Pros: Cost-effective, easy to set up.

Cons: Weak isolation if RBAC and network policies aren’t strict.

b. Multiple Clusters per Tenant

Ideal for enterprises or SaaS providers needing strict isolation.

Each tenant runs in its own cluster, possibly managed by a central control plane.

Pros: Full isolation, independent scaling.

Cons: Higher infrastructure cost and management overhead.

c. Virtual Clusters (vCluster / Capsule)

Emerging hybrid model combining efficiency with isolation.

Each tenant gets a virtual control plane (API server, scheduler) that runs inside a shared host cluster.

Pros: Lightweight, isolated API access.

Cons: Slightly complex to operate.

- Namespaces within one cluster.

- Multiple clusters per tenant.

- Virtual clusters layered within a shared infrastructure.

Security and Isolation Strategies

Security in multi-tenancy isn’t optional, it’s foundational. Kubernetes gives you building blocks, but how you combine them determines safety.

Namespaces as Security Boundaries

Namespaces segment workloads logically and allow per-tenant configurations.Use ResourceQuotas and LimitRanges to prevent overuse:

apiVersion: v1

kind: ResourceQuota

metadata:

name: tenant-a-quota

namespace: tenant-a

spec:

hard:

pods: "50"

requests.cpu: "20"

requests.memory: "40Gi"

limits.cpu: "40"

limits.memory: "80Gi"

Role-Based Access Control (RBAC)

Granular access control prevents privilege escalation.

Example, allow developers to manage pods in their namespace only:

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: tenant-a

name: dev-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list", "create", "delete"]

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: dev-binding

namespace: tenant-a

subjects:

- kind: User

name: dev-user

roleRef:

kind: Role

name: dev-role

apiGroup: rbac.authorization.k8s.io

Network Policies

Prevent cross-namespace traffic leaks with Cilium or Calico:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-other-namespaces

namespace: tenant-a

spec:

podSelector: {}

ingress:

- from:

- podSelector: {}

policyTypes:

- Ingress

This ensures that pods in Tenant A only communicate within their namespace.

Pod Security Standards (PSS)

Adopt restricted profiles to block privileged containers or host mounts.

And integrate Vault or AWS Secrets Manager to manage credentials, never expose them in plain YAML.

Resource Management and Fair Usage

Without controls, one tenant can starve another. Kubernetes offers native mechanisms to ensure fairness:

- ResourceQuotas to cap per-namespace resources.

- LimitRanges to define default pod limits.

- PriorityClasses to prevent low-priority jobs from consuming high-priority resources.

- Cluster Autoscaler and HPA/VPA for elasticity.

Together, they prevent “noisy neighbor” problems while ensuring optimal utilization.

Observability and Monitoring

Visibility keeps multi-tenancy manageable. But remember, metrics and logs should never cross tenant boundaries.

Monitoring

Use Prometheus with label-based filtering per namespace. For enterprise setups, federate metrics via Thanos.

Visualize data in Grafana, but restrict dashboards using role-based filters.

Tracing

Deploy OpenTelemetry to track requests across shared services. Tag traces with tenant metadata to isolate insights.

Logging

Adopt EFK (Elasticsearch, Fluentd, Kibana) or Loki + Grafana with per-tenant indices.

Each team accesses only its logs, maintaining both privacy and clarity.

Automation and Governance

As clusters grow, automation becomes the difference between order and chaos.

- GitOps (ArgoCD, FluxCD): Manage all tenant namespaces, quotas, and policies declaratively.

- OPA Gatekeeper / Kyverno: Enforce compliance policies like “No privileged pods” or “Must define resource limits.”

- Terraform / Crossplane: Automate tenant onboarding, from namespace creation to RBAC setup.

Example Policy (Kyverno):

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: enforce-requests-limits

spec:

validationFailureAction: enforce

rules:

- name: check-resources

match:

resources:

kinds:

- Pod

validate:

message: "All containers must have CPU and memory requests/limits defined."

pattern:

spec:

containers:

- resources:

requests:

memory: "?*"

cpu: "?*"

limits:

memory: "?*"

cpu: "?*"

Governance ensures every tenant’s workload adheres to platform rules automatically.

Tools & Frameworks for Multi-Tenancy

| Tool | Purpose | Benefit |

| Capsule | Multi-tenancy operator | Easy namespace-based isolation |

| vCluster | Virtualized clusters | Separate API control planes |

| KubeFed | Cluster federation | Multi-region tenant sync |

| Kyverno / OPA | Policy enforcement | Governance automation |

| Thanos / Loki | Observability | Tenant-aware metrics |

| Rancher / Lens | Cluster visibility | Simplified management UI |

These tools make it possible to blend flexibility and security at scale.

Real-World Use Case

Scenario:

A SaaS provider hosting analytics dashboards for 30+ enterprise clients.

Challenge:

Each client needed isolation for workloads, logging, and metrics while sharing infrastructure for cost optimization.

Implementation:

- Namespace per tenant with RBAC and network policies.

- Kyverno for security policy enforcement.

- Prometheus + Thanos for monitoring.

- Automated onboarding via Terraform + ArgoCD.

Result:

- 60% reduction in cluster sprawl.

- 40% improved resource efficiency.

- Full compliance with ISO 27001 isolation standards.

Common Pitfalls to Avoid

- Giving too many users cluster-admin roles.

- Delaying network policies until “later.”

- Ignoring per-namespace quotas.

- Logging everything in one index, a compliance nightmare.

- Treating observability as a nice-to-have.

Kubernetes doesn’t forgive sloppy architecture, multi-tenancy multiplies the impact.

The Future of Multi-Tenancy

Kubernetes multi-tenancy is evolving beyond isolation, it’s becoming intelligent.

- AI-driven scaling: Predict workloads and rebalance capacity automatically.

- Cross-cloud orchestration: Unified governance across AWS, Azure, GCP.

- Self-healing governance: Detect and fix policy drifts autonomously.

- Platform engineering maturity: Self-service clusters where developers onboard themselves, securely.

In the near future, the smartest clusters will manage tenants dynamically, not just statically configure them.

Conclusion

Multi-tenancy isn’t just a cost-saving strategy. It’s the foundation for scalable, secure collaboration across modern cloud-native organizations.

With the right mix of isolation, governance, observability, and automation, Kubernetes can become a shared platform that fosters innovation, not friction.

At Signiance, we help enterprises build Kubernetes environments that balance freedom with control, ensuring security, performance, and cost-efficiency stay aligned. Want to make your Kubernetes environment multi-tenant ready?

Let’s talk about building a secure, scalable strategy tailored for your workloads.