When we talk about developing cloud applications, we’re really talking about a fundamental shift in how we build software. It’s no longer about racking physical servers in a data centre. Instead, it’s about crafting applications specifically to thrive on cloud platforms, using their native services and tools to build something truly scalable, resilient, and cost-effective. This isn’t just a tech trend; it’s a strategic move towards greater business agility and innovation.

Why Cloud Development Is a Game Changer

Let’s be honest, the initial hype around “the cloud” has settled. Now, understanding how to build for it is a non-negotiable skill for any business that wants to stay competitive. It’s a complete departure from the old ways of building software, leaving behind rigid, slow development cycles for a far more dynamic and responsive approach.

From Monolith to Modern

Think back to traditional software development. We often built what’s called a monolith a single, massive application where every feature was bundled into one enormous codebase. If you needed to update a simple payment feature, you had to rebuild and redeploy the entire thing. It was a slow, high-risk process that actively discouraged innovation.

Cloud development flips that script entirely, favouring modern architectures like microservices or serverless. Instead of that one giant block of code, the application is broken down into a collection of smaller, independent services. Each service is laser-focused on a single business function, like user authentication or managing inventory, and can be developed, tested, and deployed all on its own.

The real power here is decoupling. When services are independent, a failure in one doesn’t bring down the entire system. This inherent fault tolerance is a massive advantage over monolithic designs, leading to more resilient and reliable applications.

The Business Drivers Behind Cloud Adoption

This move to the cloud isn’t just a technical choice made by developers; it’s driven by solid economic and operational gains. Businesses today can’t afford to wait months for a new feature to launch. The cloud enables rapid iteration, empowering companies to react to market shifts almost in real-time.

You can see this reflected in the market’s explosive growth. In India, for instance, the cloud computing market is booming, spurred on by government support and a wave of digital transformation. Projections showed the market reaching around $17.87 billion in 2024. With a strong compound annual growth rate, it’s expected to surge towards $43.66 billion by 2029. You can dive deeper into these figures in a recent analysis of cloud adoption trends.

This financial momentum highlights a critical point: cloud skills aren’t just a bonus on a CV anymore; they’re essential for economic competitiveness.

This new way of building delivers some major wins:

- Scalability: Applications can automatically adjust resources based on demand. You pay only for what you actually use, not for idle capacity.

- Agility: Small, autonomous teams can work on different services at the same time, dramatically shrinking development timelines.

- Cost-Efficiency: Forget about the huge upfront cost of buying and maintaining physical hardware.

- Global Reach: It becomes straightforward to deploy your application in data centres around the world, getting it closer to your users for lower latency and a better experience.

Ultimately, developing for the cloud means you’re building for the future. It gives you the architectural runway to innovate faster, scale smarter, and create robust systems that can handle whatever comes their way.

Designing a Future-Proof Cloud Architecture

Any great cloud application stands on the shoulders of its architecture. Without a solid blueprint, even the most elegant code can falter under real-world pressure, leading to performance bottlenecks and costly, painful rewrites down the line. Designing this blueprint isn’t about abstract theory; it’s about making concrete decisions that will define your app’s performance and long-term health.

The real goal here is to build something that’s both resilient and adaptable. You’re not trying to perfectly predict the future. Instead, you’re creating a system that can absorb unexpected shocks and evolve without a complete overhaul every time business priorities shift. It’s this proactive thinking that separates a lasting cloud application from a temporary one.

Deconstructing the Application: A Real-World Scenario

Let’s get practical. Imagine you’re tasked with building a new e-commerce platform. The old-school, monolithic approach would be to lump everything user profiles, the product catalogue, shopping cart logic, and payment processing into one massive, interconnected application. While this might feel simpler at the start, it becomes a huge liability. A minor bug in the product recommendation engine could suddenly bring down the entire checkout process.

A modern cloud mindset flips this on its head. We start by breaking the application down into distinct, independent business functions.

For our e-commerce platform, that decomposition might look something like this:

- User Service: Manages everything related to user accounts, from data and authentication to profiles.

- Product Service: The source of truth for the product catalogue, inventory levels, and search.

- Order Service: Handles all new orders, tracks order history, and communicates with fulfilment systems.

- Payment Gateway Service: Securely integrates with payment providers to process transactions.

Each of these becomes its own deployable unit. This separation is the cornerstone of a future-proof design. If the Payment Gateway Service has a temporary outage, users can still browse products and add items to their cart. The failure is neatly contained, drastically minimising its impact on the customer’s experience.

Choosing Your Architectural Pattern

Once you’ve identified these separate functions, you have to decide how to build and run them. This is where you pick an architectural pattern that fits your specific needs. There isn’t one “best” answer; the right choice always depends on the trade-offs you’re willing to make between control, complexity, and operational effort.

| Architectural Pattern | Best For | Key Consideration |

|---|---|---|

| Microservices | Complex applications with distinct, evolving business domains. | Needs mature DevOps practices to manage distributed complexity. |

| Serverless (FaaS) | Event-driven, short-lived tasks like API backends or data processing. | Perfect for unpredictable traffic, but has execution time limits. |

| Containerisation | Apps needing portability and consistent dev/test/prod environments. | Gives a great balance of control and abstraction. |

For our e-commerce example, a hybrid approach is often the most effective. The core User and Product services—which need to be stable and always available—might be perfect candidates for containerised microservices running on a platform like Google Kubernetes Engine (GKE).

On the other hand, a task like processing newly uploaded product images is a fantastic fit for a serverless function on AWS Lambda. It only needs to run when a new image appears, making it incredibly cost-effective. This mix-and-match strategy lets you use the right tool for the job, optimising for both performance and cost.

The essence of good cloud architecture is loose coupling and high cohesion. Services should be loosely coupled, with minimal dependency on each other. At the same time, each service should have high cohesion, meaning its internal parts are all focused on a single, clear purpose.

This philosophy of breaking down systems isn’t new. In India, for example, cloud development practices have long been shaped by the need to manage software complexity while optimising resource usage. The early adoption of concepts like Service-Oriented Architecture (SOA) laid the groundwork for how we now build scalable cloud apps.

Of course, building a robust system means following certain foundational rules. For a deeper look, our guide on essential cloud architecture design principles is a great resource for ensuring your design is both scalable and secure. Ultimately, the architectural choices you make at the beginning are the most critical, directly impacting your ability to innovate and grow in the future.

Choosing Your Cloud Services and Tools

Alright, you’ve got your architectural blueprint. Now comes the part that can feel like walking into a massive supermarket without a shopping list: picking your cloud services and tools. With giants like AWS, Azure, and Google Cloud offering hundreds of options, it’s easy to get overwhelmed.

Let’s be clear. The goal isn’t to find the single “best” service. It’s about finding the right service for your specific application, your team’s existing skills, and, of course, your budget. This is a constant balancing act. Every choice involves trade-offs between cost and performance, or between granular control and ease of management. Getting this wrong can lead to surprise bills or an application that buckles under pressure, effectively wasting all that great architectural planning.

The scale of this ecosystem is staggering. The Indian public cloud services market is a behemoth. In the first half of 2024 alone, the combined value of Infrastructure-as-a-Service (IaaS), Platform-as-a-Service (PaaS), and Software-as-a-Service (SaaS) hit $5.2 billion. This isn’t just a big number; it represents a vast playground of powerful tools, which you can read more about in this market growth analysis.

The Four Pillars of Cloud Services

To make sense of it all, I find it helpful to group the hundreds of services into four core categories. If you get a handle on these, you’re well on your way.

- Compute: This is the engine. It’s the processing power that runs your code.

- Storage: This is your application’s filing cabinet, holding everything from user photos to critical backups.

- Databases: These are sophisticated systems built to store, organise, and fetch your data at speed.

- Networking: This is the plumbing that connects all your services to each other and to the internet.

Let’s dig into the common options in each category, using some real-world examples to highlight the trade-offs you’ll be making.

Choosing Your Compute Service

The compute service you pick has a massive impact on your day-to-day operations and your monthly bill. It’s one of the most fundamental decisions you’ll make.

A classic dilemma I see teams face is choosing between a virtual machine (VM) like Amazon EC2 and a serverless platform like AWS Lambda. With EC2, you get a full-fledged virtual server. You control the operating system, the runtime, everything. This is perfect if you’re lifting-and-shifting a legacy app to the cloud or have long-running background jobs that need a persistent home.

On the other hand, AWS Lambda is all about “just run my code”. You upload your function, and it executes in response to a trigger—an API request, a new file in storage, you name it. There are no servers to patch or manage, and you only pay for the exact milliseconds your code is running. For an API backend with unpredictable traffic, Lambda can be dramatically cheaper.

Key Takeaway: The choice really boils down to control versus convenience. If you need fine-grained control over the server environment, a VM is your friend. If you’d rather forget about servers entirely and just pay for what you use, go serverless.

Picking the Right Storage and Database

When it comes to storage and databases, the nature of your data dictates the best tool for the job.

For things like user-uploaded images, videos, or backups, object storage is the undisputed king. Services like Amazon S3 or Azure Blob Storage are built for this. They’re incredibly cheap, durable, and can scale to handle practically infinite amounts of this kind of unstructured data.

Database selection, however, is a bit more nuanced. The main fork in the road is between a traditional relational database (SQL) and a more modern NoSQL database.

- Relational Databases (SQL): Think Azure SQL or Amazon RDS. These are ideal for structured data with clear relationships, like customer profiles or e-commerce orders. They enforce strict schemas and data integrity, which is vital when consistency is non-negotiable.

- NoSQL Databases: Services like Azure Cosmos DB or Amazon DynamoDB are designed for chaos in a good way. They handle unstructured or semi-structured data with ease and offer incredible flexibility and horizontal scaling. This makes them a perfect match for IoT sensor data, social media feeds, or gaming leaderboards where the data structure might change over time.

For our e-commerce app, a hybrid approach often works best. We could use Azure SQL for the rock-solid transactional data (orders, customers) and something like Cosmos DB for the product catalogue, where different products might have a wide variety of attributes. It’s all about using the right tool for the right job.

To help you place these services into a broader context, here’s a quick look at the main cloud service models.

Choosing Your Cloud Service Model

This table breaks down the three primary cloud service models IaaS, PaaS, and SaaS to help you select the right fit based on control, ease of use, and management needs.

| Service Model | What You Manage | Best For | Key Benefit |

|---|---|---|---|

| IaaS (e.g., EC2) | VMs, OS, Middleware, Data | Teams needing maximum control and migrating existing apps. | High Flexibility |

| PaaS (e.g., Azure App Service) | Application, Data | Developers who want to focus on code, not infrastructure. | Rapid Development |

| SaaS (e.g., Microsoft 365) | Nothing (use the software) | Businesses needing out-of-the-box solutions. | Zero Management |

Ultimately, choosing your services is about a strategic alignment between the technical capabilities available and your real-world business objectives. By thoughtfully considering the trade-offs within these core areas, you’ll lay a solid foundation for building a cloud application that is efficient, scalable, and cost-effective.

Building an Effective DevOps Pipeline

Think of your cloud architecture as the blueprint for your application. If that’s the case, your DevOps pipeline is the automated, high-tech assembly line that actually builds it. For any team serious about developing modern cloud applications, a solid Continuous Integration and Continuous Deployment (CI/CD) pipeline isn’t just a luxury it’s the core engine that drives speed, quality, and reliability. It’s what takes code from a developer’s laptop and turns it into a running application, all with minimal human touch.

The whole point is to automate everything that happens after a developer commits their code. This gets rid of those manual hand-offs between teams, which are notorious for causing errors and slowing everything down. When you codify the entire build and release process, you create a path to production that’s repeatable, predictable, and, most importantly, trustworthy.

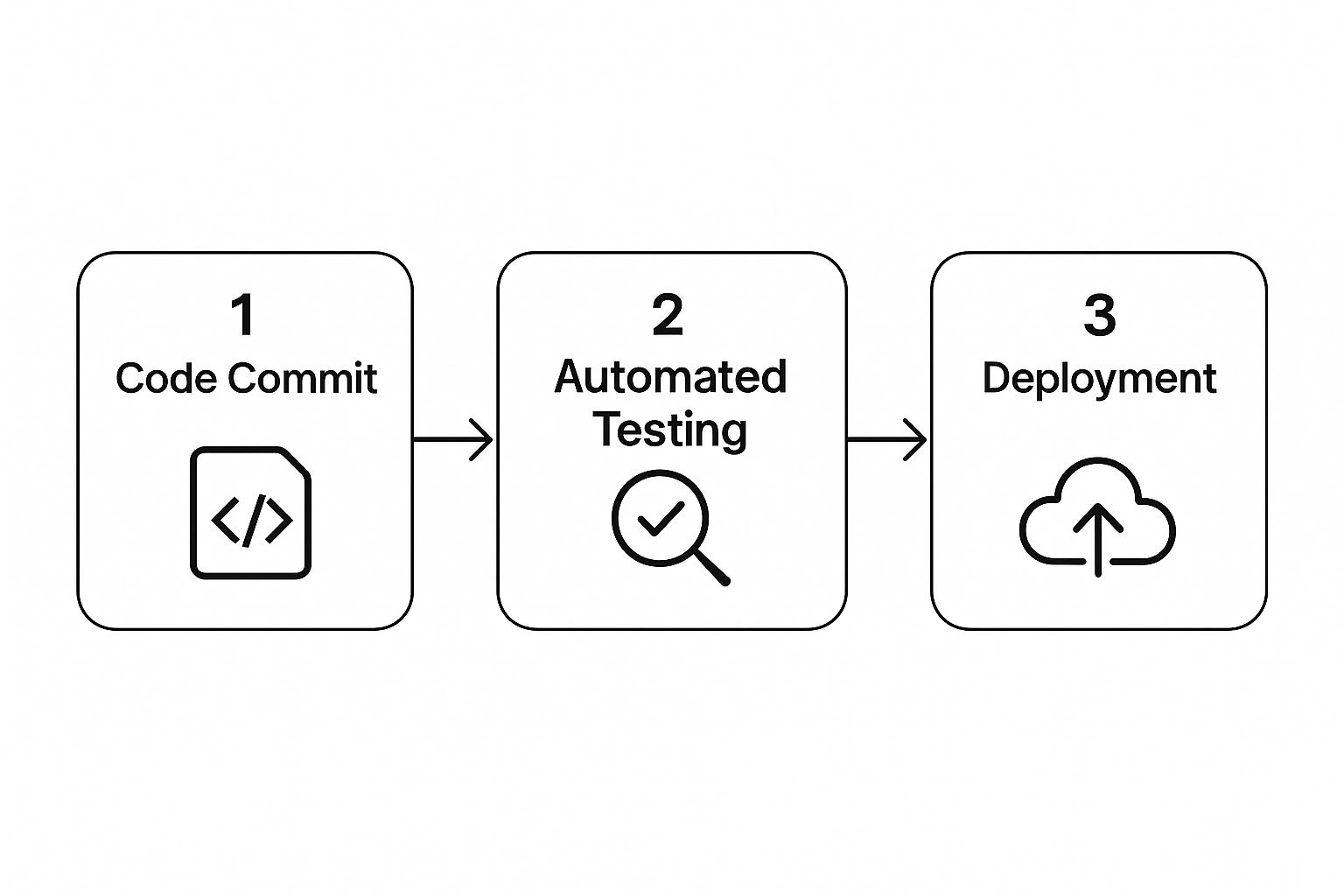

This diagram shows the basic idea in action: a developer’s code commit kicks off automated tests, and once they pass, the code is deployed. Simple, but powerful.

You can see how each stage acts as a quality gate. This ensures only validated code moves forward, which fundamentally improves your software’s health right from the start.

The Stages of a Practical CI/CD Pipeline

So, how do you build one of these pipelines? It’s all about connecting several key stages. The specific tools you choose will vary based on your tech stack and cloud provider, but the underlying principles are universal. I like to think of it as a series of checkpoints your code has to clear before it can get to your users.

A typical, real-world pipeline usually breaks down into these functions:

- Source Control Management (SCM): Everything starts here. Using a system like Git, developers commit their code to a central repository (like GitHub or GitLab). This repository becomes the undisputed single source of truth for your entire application.

- Continuous Integration (CI): The moment new code is pushed, a CI server springs into action. It automatically builds the application and runs a whole suite of tests think unit tests and integration tests to catch bugs as early as possible.

- Artefact Creation: Once all the tests pass, the pipeline packages your application into something that can be deployed. For cloud-native apps, this almost always means building a Docker container image and pushing it to a secure registry like Docker Hub, Amazon ECR, or Azure Container Registry.

- Continuous Deployment (CD): This is the final leg of the journey. The pipeline automatically takes that new container image and deploys it to your various environments, from a staging server for final checks all the way to production.

Implementing Your Pipeline with Code

These days, the best practice is overwhelmingly a “pipeline-as-code” approach. Instead of clicking around in a user interface to set up your pipeline, you define the entire process in a simple text file that lives right in the same repository as your application code. This is a game-changer because it makes your pipeline versionable, easy to reuse, and far simpler to manage.

Take GitHub Actions, for example. You’d create a YAML file inside a .github/workflows directory in your repo. A basic pipeline to build a Docker image and push it to a registry might look something like this:

name: Build and Push Docker Image

on:

push:

branches: [ “main” ]

jobs:

build:

runs-on: ubuntu-latest

steps:

– uses: actions/checkout@v3

– name: Build the Docker image

run: docker build . –file Dockerfile –tag my-image-name:latest

This simple file tells GitHub to run a job every time code is pushed to the main branch. The job checks out the latest code and then runs a standard docker build command. It’s a straightforward example, but you can easily expand it with more steps for running advanced tests, scanning for security flaws, and deploying to your cloud provider.

The real power of pipeline-as-code is its transparency and reproducibility. Anyone on the team can see exactly how the application is built and deployed. This empowers developers and removes the “it works on my machine” problem for good.

Adding Quality and Deployment Gates

A truly great pipeline does more than just stitch commands together; it actively enforces quality. This is where you introduce “gates” automated checks that must pass before the code is allowed to proceed to the next stage.

Here are a few essential gates I always recommend building into a pipeline:

- Static Code Analysis: Tools like SonarQube can automatically scan your code for bugs, code smells, and security vulnerabilities before it’s even built.

- Security Scans: It’s critical to scan your container images for known vulnerabilities before they get deployed. Don’t ship a security hole.

- Approval Gates: For sensitive environments like production, you can add a manual approval step. This notifies key team members (like a lead engineer or product manager) to give a final green light before the release goes live.

Putting these automations in place systematically is the key to success. If you’re just starting this journey, having a clear plan is the most important first step. For a structured approach, you can find some fantastic guidance in this DevOps implementation roadmap. By building these quality checks directly into your automation, you create a powerful feedback loop that constantly improves your application’s health over time.

Integrating Security from the Start

In the world of cloud development, thinking about security as a final checkbox before going live is a recipe for disaster. I’ve seen it happen too many times. A truly resilient application isn’t just patched at the end; it’s secure by design from its very first line of code.

This means embracing a DevSecOps mindset. Security isn’t some separate team’s problem—it’s woven into every single part of your development lifecycle. It’s a shared responsibility. By embedding security practices directly into your architecture and CI/CD pipeline, you’ll catch vulnerabilities when they’re small and easy to fix, not after they’ve become a crisis.

Managing Who Can Access What

Your first line of defence is simply controlling who gets to do what in your cloud environment. This is the entire job of Identity and Access Management (IAM). IAM isn’t about blocking people; it’s about giving them precisely the permissions they need to do their job and nothing more. This is a core concept we call the principle of least privilege.

For example, instead of granting a developer full admin rights to your entire cloud account, you create a specific “developer” role. This role might allow them to push code and check logs but explicitly deny them the power to, say, delete a production database. It’s a simple but incredibly effective way to prevent both accidents and malicious attacks from causing catastrophic damage.

Think of IAM roles like a set of digital keys. You wouldn’t hand every employee a master key to the whole building. You’d give them a key that only opens the doors they actually need to go through. Apply that exact same logic to your cloud resources.

Protecting Your Data, Wherever It Is

Your data is your most precious asset, so protecting it is non-negotiable. Cloud security breaks this down into two fundamental states: data sitting still and data on the move. You need to encrypt both.

- Encryption in Transit: This is all about protecting data as it travels from a user’s browser to your app or between different microservices in your backend. We achieve this with TLS (Transport Layer Security), the same technology behind the padlock you see in your browser (HTTPS).

- Encryption at Rest: This safeguards your data when it’s stored on a hard drive, in a database, or sitting in object storage like Amazon S3. All the major cloud providers make this incredibly easy to enable, often with just a single click in the console.

Getting these encryption strategies right isn’t just good practice; it’s often a requirement for compliance. To ensure you’re on the right track, it helps to review established cloud security standards, which provide a solid framework for meeting industry expectations.

Keeping Your Secrets, Secret

Every application has secrets API keys, database credentials, authentication tokens. The absolute worst thing you can do is hardcode these into your source code or commit them to a Git repository. It’s one of the most common and easily avoidable mistakes I see.

This is exactly why cloud platforms offer dedicated services like AWS Secrets Manager or Azure Key Vault. These tools act as a secure vault. They store your sensitive credentials, and your application can fetch them securely only when it needs them at runtime. Your secrets are never exposed in your codebase, which immediately closes a massive security hole.

Isolating Your Network Resources

Finally, let’s talk about the network. Just because your application runs in the cloud doesn’t mean it should be exposed to the entire internet. This is where you need to create a private, isolated corner of the cloud just for you, using a Virtual Private Cloud (VPC).

A VPC lets you build your own logical network. You can launch resources, like your database servers, into private subnets that have no direct connection to the public internet, creating a secure zone for your critical components.

To manage the flow of traffic, you use tools like security groups and network access control lists (ACLs). Think of these as a highly specific firewall for your cloud instances. You can create rules like, “Only allow incoming HTTPS traffic on port 443 from my load balancer, and block everything else.” This kind of precise control dramatically shrinks your application’s attack surface, making it far more difficult for anyone to find a way in.

Answering Your Cloud Development Questions

As you dive deeper into building for the cloud, you’re bound to hit a few common roadblocks. These are the questions that pop up for nearly every team, from wrestling with which platform to choose to figuring out if a trendy architecture is actually worth the effort. Let’s walk through some of the most frequent challenges with practical, straight-to-the-point answers to help you move forward.

This isn’t just about theory. These are the on-the-ground decisions that will make or break your project’s budget, timeline, and sanity down the road.

What Is the Biggest Challenge in Shifting to a Microservices Architecture?

Honestly, the single biggest challenge is dealing with distributed complexity. It’s a total mindset shift. While breaking a monolith into microservices gives you incredible flexibility, it also throws a whole new set of problems at you that you need to be ready for. You’re no longer managing one application; you’re trying to conduct an orchestra of dozens of independent, moving parts.

Suddenly, a whole new class of operational headaches comes to the surface:

- Service Discovery: How do your services even find each other to talk?

- Network Communication: What’s your plan for when the network gets flaky or a partition happens?

- Distributed Data Consistency: How on earth do you manage a transaction that touches multiple, separate services?

The only way to win this game is to invest heavily in two things right from the start: a rock-solid DevOps culture and powerful monitoring tools. You need to automate everything you possibly can and have visibility into every corner of your system. Tools like Prometheus for metrics and Grafana for dashboards stop being “nice-to-haves” and become absolutely essential. Using a pattern like an API Gateway can also be a lifesaver, giving you a central point of control for how the outside world interacts with your distributed mess. It’s a steep learning curve, for sure, but the payoff in scalability is massive.

How Do I Choose Between AWS, Azure, and Google Cloud?

There’s no magic answer here. The “best” cloud provider is the one that best fits your project, your budget, and, crucially, your team’s existing skills. Each of the big three has its own personality and clear strengths.

- AWS (Amazon Web Services): It’s the 800-pound gorilla with the largest market share and the most services by a long shot. If you need a mature, feature-packed platform with a giant community to help you when you get stuck, AWS is a very safe bet.

- Azure: This is Microsoft’s home turf. If your company already runs on things like Windows Server, Office 365, or Active Directory, Azure offers an incredibly smooth and powerful on-ramp to the cloud. It’s also particularly strong in hybrid cloud setups.

- Google Cloud (GCP): GCP has carved out a reputation as a leader in very specific, high-tech areas. It really shines with Kubernetes (thanks to GKE), big data analytics (BigQuery is a powerhouse), and machine learning. If your app’s secret sauce is in one of those fields, GCP is definitely worth a hard look.

My advice? Start with a practical evaluation. First, take stock of your team’s expertise don’t underestimate the speed boost you get from using tools your team already knows. Then, map your architectural needs to what each platform does best. Finally, spin up a small proof-of-concept on your top one or two choices to see how they perform in the real world and, just as importantly, get a realistic feel for the monthly bill.

When Should I Actually Use Serverless Computing?

Serverless, with services like AWS Lambda or Azure Functions, is an amazing tool, but it’s not a hammer for every nail. It’s the perfect solution for event-driven, short-running, and stateless tasks where you just want to run some code without ever thinking about servers.

It’s the ultimate “just run my code” service and it’s brilliant for scenarios like:

- API backends for web or mobile apps.

- Processing jobs that kick off when a new file is uploaded.

- Automating routine IT or infrastructure chores.

- Powering chatbots and other interactive, event-driven applications.

The two knockout benefits of serverless are its hands-off, automatic scaling and its pure pay-per-use pricing. You only pay for the exact compute time you use, often down to the millisecond, which can be incredibly cheap for workloads with unpredictable or bursty traffic.

That said, it’s the wrong choice for certain jobs. Steer clear of serverless for long-running processes (most platforms have timeout limits), applications needing deep control over the server environment, or services with very steady, predictable traffic where a reserved VM might actually be cheaper in the long run.

How Critical Is Infrastructure as Code for Cloud Projects?

I’ll be blunt: Infrastructure as Code (IaC) is absolutely critical. I’d even say it’s a non-negotiable best practice for any serious cloud project, no matter how small. Committing to IaC from day one is one of the single most important decisions you can make for the long-term health of your system.

When you use tools like Terraform, AWS CloudFormation, or Azure Bicep, you define your entire cloud setup—networks, servers, databases, load balancers in simple text files that you can version control. This gives you superpowers:

- Reproducibility: You can spin up an identical environment for dev, testing, or production with one command. The “it works on my machine” excuse is officially dead.

- Automation: Building and updating infrastructure becomes a fast, automated process, which dramatically cuts down on the risk of human error from manual clicking.

- Accountability: Since your IaC files live in Git, you have a perfect audit trail. You can see every single change made to your infrastructure, including who changed it and when.

Embracing IaC is fundamental to getting the speed and reliability the cloud promises. It’s the key to building systems that don’t just scale, but are also manageable as they inevitably grow more complex.

Ready to build a cloud application that is scalable, secure, and perfectly aligned with your business goals? The expert team at Signiance Technologies can guide you through every stage, from architecture design to security implementation. Discover how we can help you unlock the full potential of the cloud.