When we talk about microservices design principles, we’re really talking about a set of ground rules for building applications in a fundamentally different way.When we talk about microservices design principles, we’re really talking about a set of ground rules for building applications in a fundamentally different way. Forget the old-school, all-in-one monolith. Instead, think of your application as a collection of small, independent services.

Each of these services is built to do one thing and do it well. It has its own codebase, focuses on a single business function, and can be worked on, deployed, and scaled all by itself. This modular approach is the secret sauce for creating software that’s both agile and tough enough to handle real-world failures.

Why Are Microservices Changing the Game in Software Design?

Let’s use a simple analogy. Think of a massive, traditional department store. Everything is under one roof, and all the departments clothing, electronics, groceries are deeply intertwined. If the central payment system goes down, the entire store grinds to a halt. That’s a monolithic application for you; a single failure point can bring the whole thing crashing down.

Now, contrast that with a lively open-air market full of specialised, independent stalls. Each stall owner manages their own stock, pricing, and sales. If the bakery stall closes early, it doesn’t stop the butcher or the cheesemonger from doing business. The market as a whole keeps humming along. That’s the essence of microservices: an architecture that breaks an application into a collection of loosely connected services.

This isn’t just a new technical fad. It’s a strategic shift that helps businesses build software that can keep up with today’s demands for speed, scale, and reliability.

The Core Philosophy: Deconstruct to Construct

At its heart, the microservices philosophy is about breaking down big, unwieldy problems into smaller, more digestible pieces. Each piece each service is designed around a specific business capability.

Take an e-commerce platform, for example. Instead of one giant application, you’d have distinct services for things like:

- User Authentication: Manages everything to do with user accounts, logins, and profiles.

- Product Catalogue: The single source of truth for all product information and stock levels.

- Shopping Cart: Keeps track of what a customer wants to buy.

- Payment Processing: Securely handles all the financial transactions.

This separation is a game-changer. It means different teams can own and work on different services at the same time, choosing the best tools and technologies for their specific job. It gives them autonomy and dramatically speeds up development. If you want to go deeper into these foundational ideas, our guide on https://signiance.com/unlocking-the-potential-of-microservices-architecture/ is a great place to start.

Building for Resilience

One of the most powerful concepts in this world is designing for failure. It sounds counterintuitive, but it’s crucial.

With microservices, you expect things to fail. By isolating services, you ensure that when one component has a problem, it doesn’t cause a domino effect and take down the entire system. The rest of the application can carry on, which means a much better and more reliable experience for your users.

This approach fits hand-in-glove with modern cloud environments. In India, for example, the widespread move to the cloud has been a massive catalyst for microservices adoption. The 2021 IDC CloudView Survey showed that around 76.6% of Indian enterprises were already on the cloud, with Kubernetes adoption also on the rise. This ecosystem makes it easier than ever for businesses to build with small, independently deployable services, helping them adapt faster to market shifts.

This guide will walk you through the essential microservices design principles that make this architecture tick, moving beyond the theory to give you practical, actionable knowledge.

Exploring Core Microservices Design Principles

Building a great microservices architecture isn’t just about breaking a big application into smaller pieces. It’s about designing those pieces to work together effectively. To do that, you need a solid foundation built on a few core design principles.

Think of these principles less like rigid rules and more like a seasoned expert’s advice. They’re the guiding philosophy that helps you create systems that are flexible, independent, and tough enough to handle real-world problems. Let’s walk through the key ideas, one by one, with some simple analogies to make them stick.

The Single Responsibility Principle

At its core, the Single Responsibility Principle (SRP) is beautifully simple: a microservice should do one thing, and do it exceptionally well. It needs to own a single, clear-cut business capability.

Imagine a world-class chef’s kitchen. You have a saucier who masters sauces, a pastry chef dedicated to desserts, and a grill cook who perfects everything over the flame. Each expert focuses on their domain, leading to incredible quality. They don’t try to do each other’s jobs. Your microservices should be just like those specialist chefs.

In a typical e-commerce site, this looks like:

- A User Authentication Service that does nothing but manage logins, passwords, and security tokens.

- A Product Catalogue Service that is the single source of truth for all product information.

- An Order Processing Service that only handles the journey of an order from cart to completion.

This sharp focus is what stops your services from swelling into “mini-monoliths.” It keeps them lean, making them easier for a team to understand, maintain, and safely update without sending shockwaves through your entire application.

Understanding High Cohesion and Low Coupling

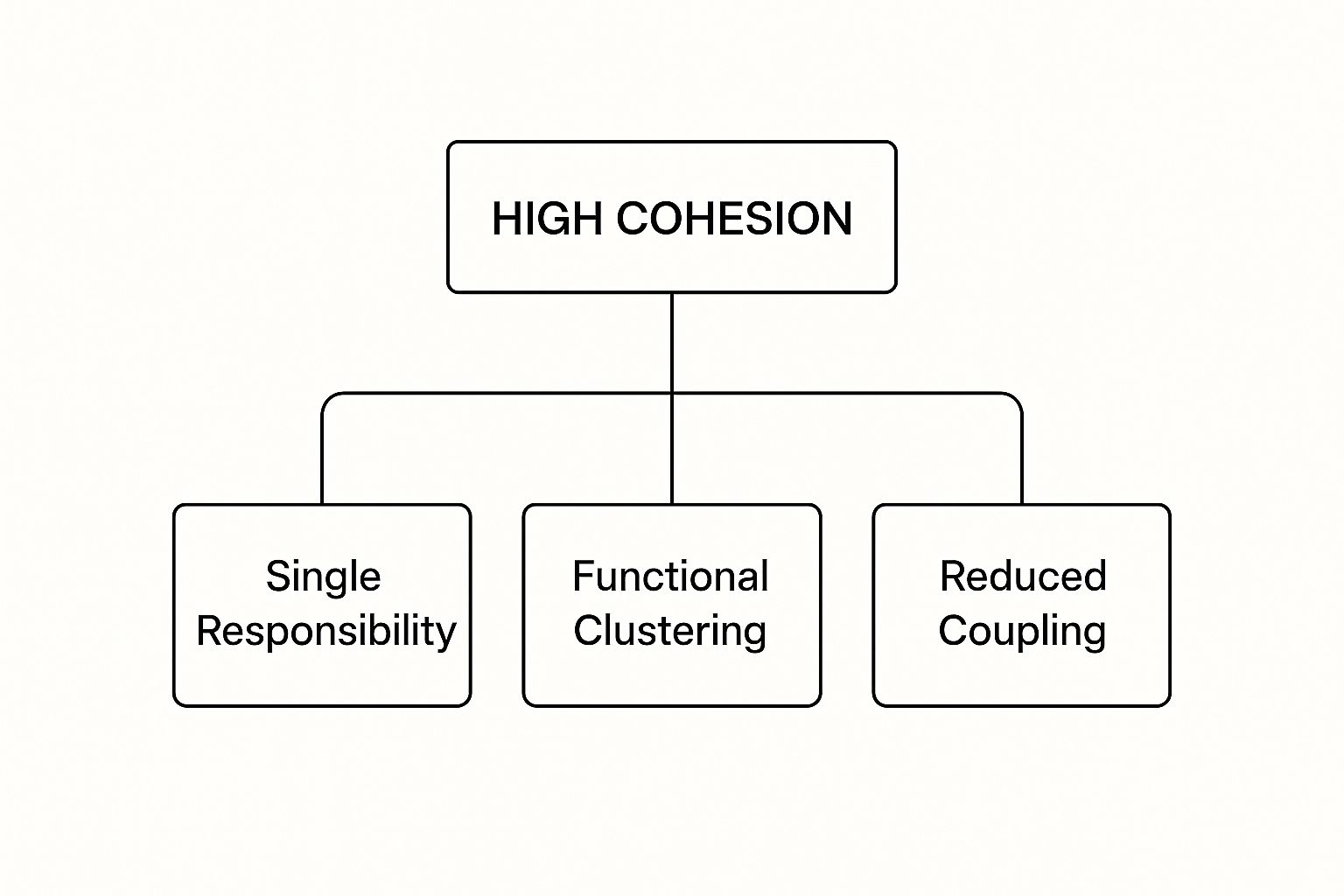

Sticking to the Single Responsibility Principle naturally leads you to two of the most desired states in software design: high cohesion and low coupling.

High Cohesion is when everything inside a service truly belongs together. All its code and data are tightly focused on its single purpose. Think back to our saucier: their knives, whisks, pans, and ingredients are all highly cohesive because they all serve the goal of making perfect sauces.

Low Coupling, on the other hand, means that your services are independent. They don’t need to know about the inner workings of other services. A change to one shouldn’t force a change in another. Our saucier can refine their béarnaise recipe without forcing the grill cook to change how they sear a steak. This independence is the secret sauce that allows different teams to develop and deploy their services in parallel.

The Power of the Bounded Context

So, SRP tells us a service should do one thing, but how do we decide where one “thing” ends and another begins? That’s where the idea of a Bounded Context comes in. Borrowed from Domain-Driven Design (DDD), a Bounded Context draws a clear line around a specific business area.

It’s like the different departments in a company. The word “customer” means something different to the Sales team (a lead, a potential deal) than it does to the Support team (an existing user with a history). Each department operates in its own context with its own language and priorities.

A Bounded Context acts as a protective bubble around a business capability. Inside this bubble, terms are unambiguous, and the business logic is consistent. Ideally, each microservice lives entirely within one Bounded Context.

For instance, the concept of a “Product” in an e-commerce system is different depending on who you ask:

- Inventory Context: Here, a “Product” is about its SKU, stock level, and warehouse location.

- Marketing Context: Now, a “Product” is defined by its catchy description, beautiful photos, and customer reviews.

- Shipping Context: In this context, a “Product” is all about its weight, dimensions, and where it can be sent.

If you tried to build one giant “Product” service to manage all of this, you’d end up with a tangled mess. Instead, you create separate services an Inventory Service, a Catalogue Service, a Shipping Service each with its own clear boundary.

Embracing Decentralised Governance

In the old world of monoliths, everything was standardised. One database technology, one programming language, one way of doing things. Microservices throw that idea out the window and instead champion decentralised governance.

This principle empowers each team to choose the right tools for their specific job. The team building the search service might use Elasticsearch because it’s brilliant for text search. Meanwhile, the team handling financial transactions will likely opt for a rock-solid relational database like PostgreSQL for its reliability.

This freedom delivers some serious advantages:

- Best Tool for the Task: Teams aren’t forced to use a one-size-fits-all technology that isn’t a good fit.

- Sparks Innovation: It’s much easier for a single team to experiment with a new database or framework without risking the whole system.

- True Ownership: Teams feel a much stronger sense of accountability when they have the autonomy to make their own technical decisions.

The philosophy here is “smart endpoints, dumb pipes.” The intelligence and logic live inside each microservice, and they communicate using simple, universal protocols. No central authority is bottlenecking progress.

Designing for Failure

In any distributed system, things will go wrong. It’s not a question of if, but when. A network will glitch, a server will crash, or a third-party API will go down. The designing for failure principle forces you to accept this reality from day one and build resilience right into your architecture.

Think of a modern ship built with watertight compartments. If the hull is breached in one spot, bulkheads contain the flooding to that single compartment, keeping the rest of the ship safe and afloat. Your microservices need those same bulkheads.

A failure in a non-essential service, like a product recommendation engine, should never take down your entire shop. The user might miss out on seeing “you might also like,” but they can still find products and, most importantly, check out. This is achieved with patterns like:

- Circuit Breakers: This stops your application from endlessly hammering a service that’s already failing, giving it a chance to recover.

- Timeouts: By setting quick timeouts, you ensure one slow service can’t stall an entire user request.

- Fallbacks: When a service is down, you can provide a sensible default like showing a generic message instead of personalised content to soften the impact.

By anticipating failure, you build a system that is fundamentally more robust and reliable. High availability is no longer an afterthought; it’s part of the design. For anyone looking to deepen their expertise in this area, you can read our detailed guide on strategies for cloud high availability. This proactive mindset is what separates fragile systems from truly resilient ones.

Decomposing a Monolith into Microservices

Breaking apart a legacy monolith often feels like trying to untangle a massive, knotted ball of yarn. It’s an intimidating task where one wrong move can create an even bigger mess. But it doesn’t have to be that way. With a clear strategy, you can transform this complex challenge into a series of manageable steps.

The secret isn’t a massive, all-at-once rewrite. In fact, that’s usually a recipe for disaster. The most successful projects chip away at the monolith incrementally. You let the new microservices architecture grow alongside the old system until, one day, the monolith can be gracefully retired.

Let’s walk through this process with a fictional e-commerce company, “BharatKart,” as they move away from their cumbersome, all-in-one application. This story will help make the abstract principles of decomposition feel much more concrete and real.

Identifying the First Piece to Extract

At BharatKart, the monolithic application handles absolutely everything: user accounts, product catalogues, inventory management, orders, and payments. The development team is feeling the pain. A tiny change to the payments feature requires a full, high-stakes redeployment of the entire system. It’s slow and risky. They know something has to change, and the first step is picking the right piece to carve out.

What makes an ideal first service? You’re looking for a few key traits:

- Loosely Coupled: The feature should have minimal and clearly defined connections to the rest of the monolith.

- High Value: Separating it needs to deliver a tangible win, like better scalability or faster development cycles for that feature.

- Relatively Stable: It’s wise to start with a function that isn’t constantly changing, as this simplifies the initial migration.

After a thorough analysis, the BharatKart team lands on the Product Review feature. It’s a perfect candidate. The feature is fairly self-contained, and separating it would let them innovate on user engagement without messing with the critical, transaction-heavy parts of the monolith. This is a textbook example of decomposition by business capability, one of the foundational microservices design principles.

The Strangler Fig Pattern in Action

To pull off the extraction without any service interruptions, the team turns to a brilliant strategy known as the Strangler Fig Pattern. The name comes from a type of vine that grows around a host tree, eventually enveloping and replacing it. In software, this means building a new system (the “vine”) around the old one (the “tree”), gradually taking over its functions until the old system is no longer needed.

The Strangler Fig Pattern is a powerful, low-risk method for incremental migration. It lets you build and test new services in a live production environment while the old system keeps running, ensuring a completely seamless transition for your users.

Here’s a breakdown of how BharatKart puts this pattern to work:

- Introduce a Proxy: The first move is to place a proxy layer in front of the monolith. At the start, this proxy does nothing more than pass all traffic for product reviews straight to the existing code within the monolith.

- Build the New Service: In parallel, the team builds a brand-new, independent “Reviews Service.” It has its own dedicated database and a clean API for submitting and retrieving reviews.

- Divert Traffic: Once the new service is built, tested, and deployed, they tweak the proxy’s configuration. Now, any request related to product reviews gets routed to the new Reviews Service instead of the monolith. The switch is flipped.

- Remove Old Code: After a period of careful monitoring to confirm the new service is working flawlessly, the team can confidently delete the old product review code and its associated database tables from the monolith.

Just like that, the monolith is a little bit smaller and a little bit simpler. The new Reviews Service can now be scaled, updated, and deployed all on its own.

By repeating this cycle for other functions like “User Profiles” or “Notifications,” BharatKart can slowly but surely “strangle” their monolith, replacing it piece by piece with a modern, flexible microservices architecture. This methodical approach turns a daunting overhaul into a series of calculated, successful wins.

7. Give Each Service Its Own Database

One of the biggest mental hurdles when moving from a monolith to microservices is letting go of the single, massive database. In monolithic applications, having one database is standard practice, giving you the power of strong consistency through ACID (Atomicity, Consistency, Isolation, Durability) transactions.

But in a distributed world, that shared database becomes a huge bottleneck. It creates the very same tight coupling between services that we’re trying so hard to eliminate. This brings us to a foundational principle of microservices architecture: the database-per-service pattern.

The rule is simple but non-negotiable: each microservice is the sole owner of its domain data. No other service can touch its database directly. Think of it like this: every service gets its own private vault. If another service needs information from that vault, it can’t just break in. It has to go through the front desk the service’s API and make a formal request.

This approach gives each service true independence and stops data dependencies from creeping back in. However, it also opens up a whole new can of worms. If a single business action needs to update data across several services, how do you keep everything in sync?

The Distributed Transaction Dilemma

Let’s walk through a classic e-commerce example. A customer clicks “Buy Now.” This one simple action triggers a cascade of events across different services:

- The Order Service needs to create a new order record.

- The Inventory Service has to deduct the purchased items from its stock count.

- The Billing Service must process the customer’s payment.

What happens if one of these steps breaks? Let’s say the payment gets declined by the Billing Service. The Order Service has already created an order, and the Inventory Service has already reserved the stock. You can’t just hit “undo.” This is where traditional, all-or-nothing transactions completely fall apart, and we need patterns built for this new reality.

In a distributed system, you are essentially trading the simplicity of immediate, strong consistency for the resilience and scalability of eventual consistency. Your system will become consistent over time, but it might be momentarily out of sync while different processes complete their work.

This trade-off is at the very heart of data management in microservices. Instead of relying on rigid transactions that can lock up parts of the system, we adopt patterns designed specifically for the distributed, asynchronous nature of this architecture.

The Saga Pattern Comes to the Rescue

One of the most effective solutions for this problem is the Saga pattern. A Saga is essentially a sequence of local transactions. Each transaction updates the data within a single service and then kicks off the next step, usually by broadcasting an event that other services are listening for.

Let’s apply this to our e-commerce flow:

- A user places an order. The Order Service creates an order record with a “pending” status and then publishes an

OrderCreatedevent. - The Inventory Service, which is subscribed to that event, receives it and attempts to reserve the stock. If it succeeds, it publishes its own

InventoryReservedevent. - The Billing Service is listening for

InventoryReserved, so it processes the payment and publishes aPaymentSucceededevent. - Finally, the Order Service listens for

PaymentSucceededand, upon receiving it, updates the order status from “pending” to “confirmed.”

But what about failures? If the Billing Service publishes a PaymentFailed event instead, the Saga kicks into reverse. It executes a series of compensating transactions to undo the previous steps. The Inventory Service would receive this failure event and release the reserved stock. The Order Service would then change the order status to “cancelled.” This ensures the entire system returns to a consistent state without ever needing a global, system-wide transaction lock.

Choosing Your Data Consistency Pattern

Deciding on the right data management strategy means carefully balancing your needs for consistency, availability, and complexity. The table below compares a few common patterns to help you choose.

| Pattern | Best For | Complexity | Consistency Guarantee |

|---|---|---|---|

| Eventual Consistency | High-availability systems where a temporary data lag is acceptable. | Moderate | Eventual |

| Saga Pattern | Complex, multi-step business processes requiring data integrity. | High | Eventual |

| API Composition | Read operations that need to pull data from multiple services. | Low | Varies |

Ultimately, getting data management right is a make-or-break skill for building successful microservices. It forces a shift in thinking away from the familiar comfort of a centralised database and towards patterns built for the resilient, scalable, and distributed applications of today.

Avoiding Common Microservices Design Pitfalls

Moving to a microservices architecture is often sold as the key to unlocking agility and resilience. But tread carefully. The path is littered with traps that can lead you to a system with all the headaches of a distributed network and none of the rewards. To truly build something robust and maintainable, you have to know what not to do.

One of the sneakiest and most common mistakes is stumbling into a distributed monolith. This is where your services look separate on a diagram but are so tangled up in practice that they can’t function independently. You end up in a situation where deploying one tiny change requires a coordinated, all-hands-on-deck effort across multiple teams the very problem you were trying to escape.

The Distributed Monolith Trap

So, how does this happen? A distributed monolith usually grows from fuzzy service boundaries. Imagine your “Order Service” has to make a series of synchronous, blocking calls to the “Inventory Service,” then the “Shipping Service,” and finally the “User Service” just to process a single purchase. You don’t have independent services; you have a fragile, distributed process masquerading as an architecture.

The root cause is almost always tight coupling. This could mean services sharing a single database (a huge red flag!) or being chained together by synchronous communication. A glitch in one service doesn’t just affect that service; it can trigger a domino effect, bringing the whole operation crashing down. What you’re left with is a system that’s infinitely more complex to troubleshoot than the monolith you left behind.

The core danger of a distributed monolith is that you get the worst of both worlds: the network latency and operational complexity of microservices combined with the tight coupling and deployment bottlenecks of a monolith.

This isn’t just a theoretical problem; it’s a major challenge for teams everywhere. In India, for example, the microservices market is booming, with projections showing a compound annual growth rate of around 19.2% through 2035. As industries like banking and healthcare rush to adopt these architectures, the risk of making costly mistakes grows. Getting the design principles right from the start is absolutely critical for business continuity. You can dive deeper into this trend in this market research on India’s microservices architecture.

Performance Killers to Avoid

Beyond the distributed monolith, a few other classic mistakes can kill your system’s performance and make it impossible to maintain. Spotting these early can save you a world of pain down the road.

- Chatty Inter-Service Communication: This is what happens when one user action triggers a storm of back-and-forth network calls between services. Each trip across the network adds latency, and when you multiply that by dozens of calls, your application grinds to a halt. It’s far better to design coarser-grained APIs or switch to asynchronous communication patterns.

- Neglecting Observability: With a monolith, finding a bug is relatively simple. But in a distributed system, a single request might weave its way through five or six different services. Without the right tools, tracing that request is like trying to find a needle in a haystack. If you don’t build in centralised logging, distributed tracing, and solid monitoring from day one, you’re setting your team up for a debugging nightmare.

- Ignoring Deployment Strategy: The promise of deploying services independently is a huge draw. But that magic doesn’t happen on its own it requires a mature CI/CD pipeline and a clear strategy. Without robust automation, managing deployments becomes a chaotic, manual mess prone to human error. For a solid foundation, take a look at our detailed guide on effective microservice deployment patterns.

By actively watching out for these common pitfalls, you can steer your project clear of the rocks and build an architecture that truly delivers on the promises of scalability, resilience, and manageability.

Answering Your Key Microservices Questions

As teams move from theory to practice, specific questions always pop up. This section tackles those queries head-on with concise, experience-based answers.

How Small Should a Microservice Be?

Size isn’t determined by lines of code. It’s about owning a clear business function end to end.

Think back to the Single Responsibility Principle and Bounded Context. A service should fit a two-pizza team no more. If you use “and” to describe its duties (for example, “handles orders and sends notifications”), you’re probably overloading it.

Try these pointers:

- Give each service one distinct purpose.

- Keep teams small enough to rewrite the code in a sprint.

- Split a service whenever you catch phrases like “and then also”.

A “User Management Service” is tight and coherent. But add “Product Reviews” and you create two competing responsibilities. Always lean on functional boundaries, not arbitrary size targets.

Is a Monolithic Architecture Always Bad?

Not at all. For new projects, a monolith can be the quickest route to market. It offers:

- Simple deployments with one package.

- Less network complexity to debug.

- Faster feedback loops in early development.

The real risk is a “big ball of mud.” Instead, aim for a modular monolith with clear internal boundaries. This approach lets you:

- Launch fast on a single codebase.

- Extract microservices only when true complexity demands it.

- Focus on business needs, not trendy architectures.

Choosing an architecture is about making deliberate trade-offs. A monolith prioritises simplicity and speed, while microservices focus on scalability and team autonomy. Neither is inherently “bad”—they’re just different tools for different jobs.

How Do Microservices Handle Transactions?

Traditional two-phase commits tighten coupling and can become single points of failure. Most teams prefer Sagas, a series of local transactions linked by events.

A typical Saga flow:

- Service A updates its data and emits an event.

- Service B reacts, updates its own data, then emits the next event.

- If a step fails, compensating transactions roll back prior changes.

This keeps services autonomous and ensures you eventually reach a consistent state—without locking everything on a single commit.

What Is the Difference Between Orchestration and Choreography?

Imagine a music conductor versus a jazz combo.

| Aspect | Orchestration | Choreography |

|---|---|---|

| Control | One service dictates each step | Services react independently to published events |

| Communication | Direct calls between services | Event-driven, publish/subscribe |

| Coupling | Tighter, with explicit commands | Looser, through shared event contracts |

| Observability | Clear tracing via the orchestrator | Requires event tracing for a full picture |

Orchestration offers clear order but can be brittle if the central service fails. Choreography feels more organic—services “listen and dance,” but you need solid event logging to see the full choreography.

At Signiance Technologies, our cloud architects guide you through these nuanced choices. We help you build systems that are robust, secure, and primed for growth. Discover our approach at Signiance Technologies.