A proper test automation plan is more than just a document; it’s the strategic roadmap that dictates how, what, and why you’re automating. Forget just writing scripts for a moment. This is about building a sustainable framework that ties every single testing effort directly to your business goals. When done right, every automated test provides real, measurable value and a clear return on investment.

Building Your Foundation for Automation Success

Diving into test automation without a solid plan is a classic mistake I’ve seen too many times. It almost always leads to wasted effort and a suite of tests that break with the slightest change. Before you even think about writing a line of code, you have to lay a strong foundation. This initial work is what connects your automation dreams to tangible business outcomes, shifting testing from a cost centre to a genuine value driver.

This is also how you get stakeholders on board. To get buy-in, you need to speak their language. Instead of getting bogged down in technical jargon, frame your goals in terms of business impact. For example, don’t say, “we’ll automate the login tests.” Instead, try, “we’ll shrink the manual regression testing cycle for user login from two days down to just two hours.” See the difference?

This push for efficiency isn’t just a trend; it’s a massive market shift. Here in India, the automation testing market is expected to jump from USD 1.3 million in 2024 to a staggering USD 4.8 million by 2033. This boom is fuelled by the relentless demand for faster feedback and fewer errors, pushing companies to weave automation into the very fabric of their quality strategy. A recent IMARC Group report digs deeper into these market dynamics if you’re interested.

Conducting a Thorough Feasibility Analysis

One of the first, most crucial steps in your test automation planning is a feasibility analysis. The point here isn’t to ask if automation is possible—it’s to figure out where it’s practical and will deliver the biggest bang for your buck. You have to carefully assess which parts of your application are ripe for automation and, just as importantly, which should remain in the hands of manual testers.

When you’re doing this analysis, I always recommend focusing on these areas:

- Application Stability: Zero in on features that are stable. If the UI or core functionality is constantly changing, you’ll be trapped in a nightmare of endless script maintenance.

- Test Case Complexity: Your best starting points are the simple, repetitive, and data-driven tests. Highly complex, exploratory tests that rely on human intuition are terrible candidates for automation.

- Technology Stack Compatibility: This one’s a deal-breaker. Make sure your chosen automation tools and frameworks actually work with your application’s tech stack, whether it’s built on React, Angular, or .NET.

A common pitfall I see teams fall into is chasing 100% automation coverage. Let me be clear: the goal should be strategic coverage. A well-designed suite that automates 60% of your most critical, high-risk features is infinitely more valuable than a poorly maintained suite that tries to cover everything and fails.

To help you get started, I’ve put together a simple checklist. Use it with your team to have a structured conversation about what to automate first.

Automation Feasibility Checklist

This checklist will help you and your team methodically evaluate different parts of your application to decide what makes the most sense to automate.

| Assessment Area | Key Questions to Ask | High Priority (Yes/No) |

|---|---|---|

| Business Criticality | Is this feature essential for revenue or core user workflows? What is the business impact if it fails? | Yes |

| Repetitive Tasks | Is this test executed frequently, like in every regression cycle? Is it a tedious, manual process? | Yes |

| Feature Stability | Has this feature’s UI and functionality been stable for the last few release cycles? | Yes |

| Data-Driven Testing | Does this test need to be run with multiple, large datasets to ensure coverage? | Yes |

| Cross-Browser/Device | Does this functionality need to be validated across many different browsers, devices, or operating systems? | Yes |

| Complex Logic | Does the test require human intuition, subjective validation (e.g., “does this look good?”), or complex steps? | No |

| One-Off Tests | Is this a test for a new feature that will likely only be run once or twice before the feature changes? | No |

By running through these questions, you’ll quickly build a prioritised list of candidates that are technically feasible and aligned with your business objectives, giving your automation efforts a much higher chance of success.

Setting Clear and Achievable Automation Goals

With your feasibility analysis done, you’re ready to set some real, meaningful goals. Vague targets like “improve quality” are useless because you can’t measure them. You need to define specific, measurable, achievable, relevant, and time-bound (SMART) goals.

These precise targets will guide your team and, more importantly, help you prove the value of your work.

Here are some examples of strong automation goals from projects I’ve worked on:

- Boost test coverage of our payment gateway from 40% to 90% within the next three months.

- Cut the time for a full regression test run before release from 40 person-hours to just 4 automated hours.

- Slash the number of critical bugs discovered in production by 25% over the next two quarters.

Setting these clear benchmarks from day one gives your team a clear direction and builds a powerful business case for your entire test automation planning initiative.

Alright, you’ve got your high-level goals sorted. Now comes the real work: figuring out what to actually automate. It’s easy to get excited and want to automate everything, but I’ve seen that movie before, and it doesn’t end well. Trying to boil the ocean is the fastest way to create a monstrous, flaky test suite that’s more trouble than it’s worth.

The smart approach? Be surgical. Don’t ask, “What can we automate?” Instead, you should be asking, “What should we automate to get the biggest bang for our buck?” This means shifting your thinking to a risk-based model. Your mission is to pinpoint the parts of your application that are both critical and high-risk. That’s where you’ll find your best return on investment.

Adopting a Risk-Based Model

So, how do you find those high-risk areas? Start by mapping out your user workflows and thinking about what would happen if they broke. Which features failing would cause the most chaos for your users and your business? Start right there.

Typically, these are core functions like:

- User login and authentication flows

- Shopping cart and payment processing

- Critical data submission forms (like a new patient registration)

By tackling these high-value areas first, you’re not just protecting the business; you’re also scoring some quick, visible wins. Nothing builds momentum and gets stakeholders on your side faster than showing immediate, tangible results.

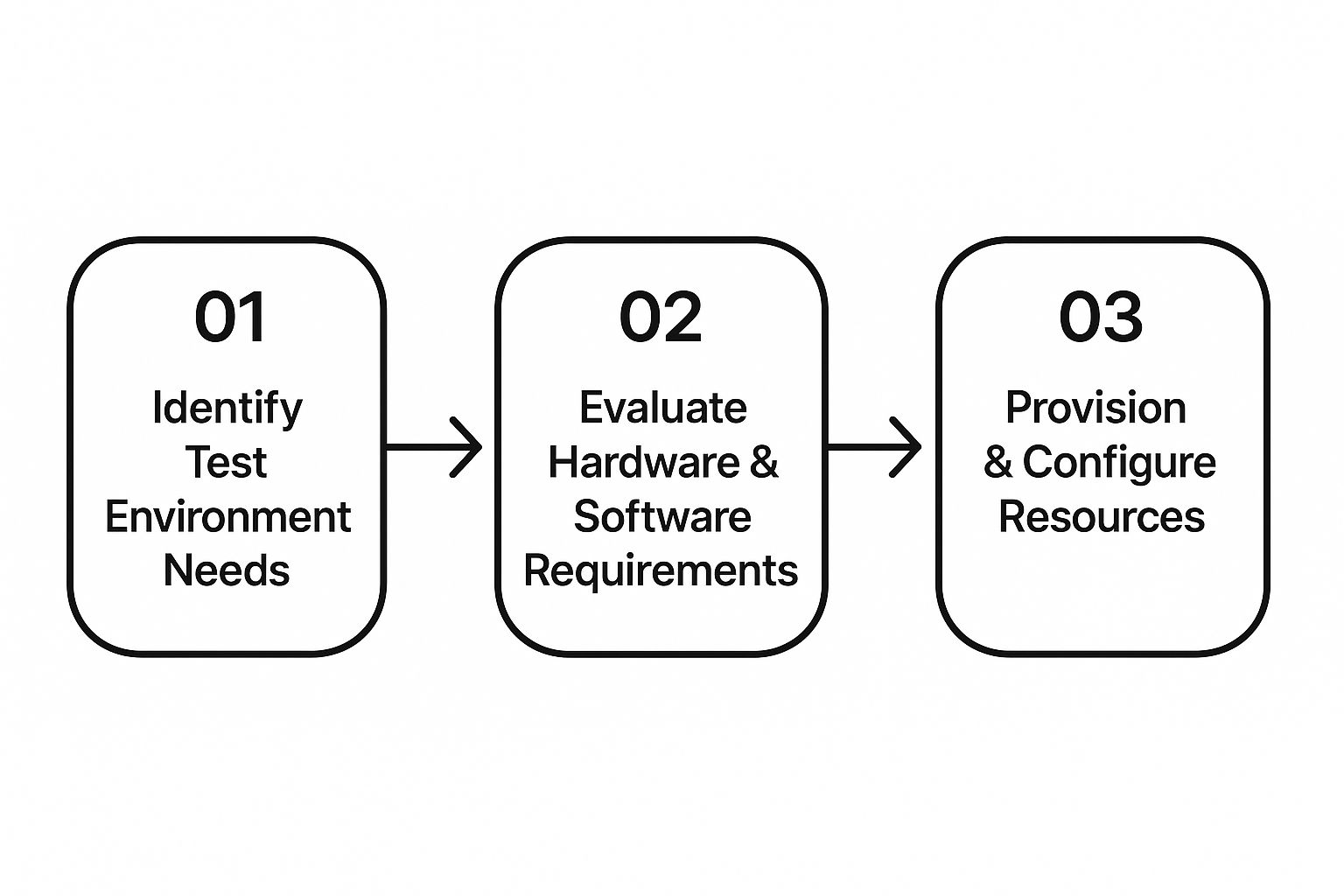

This diagram helps visualise how to get your environment ready for these prioritised tests.

As you can see, a structured setup is non-negotiable. It’s the foundation that allows your most important tests to run reliably, time and time again.

Balancing Your Tests with the Automation Pyramid

Once you know what to test, the next question is how. This is where the test automation pyramid becomes your best friend. It’s a simple but powerful model for building a healthy test suite that’s fast, stable, and doesn’t become a maintenance nightmare. The whole idea is to get the right mix of different test types.

- Unit Tests (The Foundation): These are your bread and butter. They should make up the vast majority of your tests. Unit tests check tiny, individual pieces of code in isolation. They are incredibly fast and give developers instant feedback, which means catching bugs when they’re still small and cheap to fix.

- Integration Tests (The Middle Layer): Moving up the pyramid, integration tests check how different parts of your system talk to each other. Think of testing the connection between your application and a third-party API or making sure it writes to the database correctly. They’re a bit slower than unit tests but are essential for finding those tricky interaction bugs.

- UI/End-to-End Tests (The Peak): Right at the top, you have your end-to-end tests. These mimic a real user clicking through your application’s interface from start to finish. They are incredibly valuable for verifying critical user journeys, but they are also the slowest, most fragile, and most expensive tests you’ll write. Use them sparingly.

A classic mistake I see all the time is the “ice-cream cone” anti-pattern tons of slow, brittle UI tests and hardly any unit tests. This is a surefire recipe for a slow, unreliable CI/CD pipeline, which completely defeats the purpose of automating in the first place.

By sticking to the pyramid’s structure, you build a balanced portfolio of tests. This strategic approach to test automation planning is fundamental. It’s what allows you to maintain quality while still delivering software quickly and reliably.

Picking the Right Tools and Framework

Alright, you’ve mapped out what to automate and set your priorities. Now comes the part that trips up a lot of teams: choosing your toolkit. The market is absolutely saturated with options, from powerful open-source libraries to slick commercial platforms. It’s easy to get overwhelmed.

The secret isn’t finding the “best” tool, but the right tool for your specific situation your team’s skills, your application’s technology, and, of course, your budget.

Open-Source vs. Commercial: The Classic Debate

One of the first big decisions you’ll face is whether to go with an open-source or a commercial solution. There’s no single right answer here, just trade-offs.

Open-source tools like Selenium, Playwright, and Cypress are massively popular for good reason. They’re free, highly flexible, and backed by huge, active communities. The catch? They usually demand more technical know-how from your team. Think of it like getting a box of top-tier engine parts; you still need the expertise to build and maintain the car yourself.

On the other side of the fence, you have commercial platforms like Katalon or Testim. These often provide a polished, all-in-one experience right out of the box. You’ll find user-friendly interfaces, dedicated customer support, and built-in reporting, which can seriously cut down the time it takes to get started. The main trade-off is the licensing fee, a deal-breaker for some startups or teams on a tight budget.

This choice is happening within a market that’s absolutely booming. The global automated software testing market is predicted to jump from an estimated $101.35 billion in 2025 to a staggering $284.73 billion by 2032. Here in India, the trend is even more pronounced, driven by the massive shift to DevOps and the emergence of AI-powered testing. The local market is on track to surpass $7 billion by 2025. You can dig deeper into these figures in recent market research.

When you’re deciding between these two paths, it’s helpful to lay out the pros and cons clearly.

Tool Selection Criteria Comparison

| Evaluation Criterion | Open-Source Tools (e.g., Selenium) | Commercial Tools (e.g., Katalon) |

|---|---|---|

| Initial Cost | Free to use; costs are in personnel time for setup and maintenance. | Requires subscription or licensing fees, which can be significant. |

| Team Skill Requirement | Generally requires strong coding skills and technical expertise. | Often low-code or no-code, making it accessible to manual testers. |

| Community & Support | Relies on community forums (e.g., Stack Overflow) and documentation. | Offers dedicated, professional support with SLAs. |

| Flexibility & Customisation | Highly customisable; can be tailored to any specific need. | More rigid; you’re often limited to the features provided by the vendor. |

| Speed of Implementation | Can be slower to set up a comprehensive framework from scratch. | Faster to get started with out-of-the-box features and integrations. |

Ultimately, this table shows that the “best” option is the one that best fits your team’s DNA and your project’s constraints.

How to Make a Smart Choice

Don’t just pick a tool because it’s popular or has a flashy feature. That’s a recipe for failure. Your decision needs to be a calculated one, based on a solid evaluation of your team’s reality.

Before you commit, run every potential tool through this checklist:

- Your Team’s Skillset: Are your testers comfortable writing code, or do you need a low-code option that empowers everyone to contribute? Be honest about this.

- Your Application’s Technology: This is a hard-and-fast rule. The tool must work seamlessly with your tech stack, whether it’s React, Angular, Java, or something else. A mismatch here is a complete dead end.

- CI/CD Integration: How well does it play with your existing pipeline? Whether you use Jenkins, GitLab CI, or GitHub Actions, your automation tool needs to plug in effortlessly to enable true continuous testing.

- Scalability and Maintenance: Think long-term. How much effort will it take to maintain your test scripts a year from now? A good tool and framework should make your tests reusable and easy to update as your app evolves. Understanding how this fits into the wider delivery process is key, as we discuss in our guide on automated software deployment.

I’ve seen teams make this mistake time and again: they fall in love with a tool for one specific feature and ignore the bigger picture. The tool is just one piece of the puzzle. The real key to long-term success is a solid test automation framework whether you build it yourself or buy it. A good framework gives you structure, promotes code reuse, and keeps maintenance from spiralling out of control.

By taking a methodical approach and weighing these factors, you can cut through the marketing noise and choose a tool that truly empowers your team and aligns with your goals.

Weaving Automation into Your DevOps Pipeline

Your test automation plan really comes alive when it’s no longer a separate, siloed task. The real magic happens when you weave it directly into your delivery pipeline, making it a fundamental part of your DevOps and CI/CD workflow. This is where you’ll see those significant gains in speed and quality.

The whole point is to create a seamless, rapid feedback loop. Every single time a developer commits new code, your CI server whether that’s Jenkins, GitLab CI, or GitHub Actions should automatically kick off a relevant set of tests. This immediate validation is the heart of shifting left; you catch bugs moments after they’re created, not weeks down the line in a manual QA phase.

But let’s be realistic. You can’t run your entire, massive regression suite on every single commit. That would grind development to a halt. Effective test automation planning is all about being strategic and creating different test suites for different stages of the pipeline.

Matching Test Suites to Pipeline Stages

Think of your pipeline as a series of quality gates. Each gate needs a different level of scrutiny, and you have to strike a balance between speed and thoroughness.

- The Smoke Test Suite (Runs on Every Commit): This is your first line of defence. It’s a tiny, lightning-fast suite of tests think 5-10 minutes tops that runs every time code is pushed. Its sole purpose is to verify that the absolute most critical parts of the application haven’t fallen over. Can users still log in? Are the core API endpoints responding? A failure here should immediately block the bad code from moving any further.

- The Full Regression Suite (Runs on Nightly Builds): This is the big one. It’s the comprehensive suite covering a wide swathe of functionality. Because it’s more time-consuming, it makes sense to run it overnight on the main development branch. This gives you confidence that new features haven’t accidentally broken something old, providing a stable, trusted baseline for the team to start with the next morning.

This tiered strategy gives developers the fast feedback they need to stay productive, without ever sacrificing the deep, rigorous testing that ensures quality. To get this right, you need a well-thought-out structure, much like what you’d find in a complete DevOps implementation roadmap.

A truly mature CI/CD pipeline treats tests as first-class citizens. A broken test should be seen as just as urgent as broken production code. The moment your team starts ignoring test failures, the entire value of your automation investment evaporates.

Taming Your Environments and Data for Rock-Solid Reliability

I’ve seen it countless times: an automated test fails in the pipeline, and it has nothing to do with a bug in the code. The real culprit? A flaky test environment or inconsistent data. These “flaky” tests are a nightmare they destroy trust in the automation and waste precious developer time investigating non-issues.

To get ahead of this, your test automation plan absolutely must include a strategy for managing these dependencies.

- Environment Provisioning: You need clean, consistent test environments for every single run. The best way to achieve this is with infrastructure-as-code (IaC) tools. Using something like Terraform or Ansible lets you programmatically spin up and tear down identical environments on demand.

- Test Data Management: You also need to automate how you set up and clean up your test data. This ensures every test starts from a known, reliable state. No more failures because of corrupted data left over from a previous run.

By baking these practices into your process, you stop just writing scripts and start building a robust, automated quality assurance machine. This deep integration is what elevates your test automation from a simple checking tool into a strategic asset that helps you deliver better software, faster and safer.

Measuring Success For Long-Term Value

It’s a common misconception to see test automation as a one-and-done project. The reality? It’s an ongoing commitment to quality. If you don’t build a way to measure its effectiveness right into your test automation planning, you’re just throwing money at a problem without knowing if it’s working.

Without solid metrics, your automation efforts are just another line item in the budget. To prove real value, you need to show how automation impacts the bottom line. That means looking far beyond simple pass/fail rates and connecting your testing directly to business outcomes and team efficiency.

Key Metrics That Actually Matter

I’ve learned that you need a balanced scorecard to tell the full story. You want to track metrics that show tangible improvements in speed, quality, and cost. Here are a few of the most impactful ones I always recommend keeping an eye on:

- Test Execution Time: How long does your full regression suite take to run? The goal isn’t just to see this time go down; it’s to keep it stable even as your test suite grows. That’s a true sign of efficiency.

- Defect Detection Rate: This is your core quality metric. You need to know how many bugs your automated tests are catching versus those found manually or, even worse, by customers in production. A rising rate here is a very good thing.

- Reduced Manual Testing Effort: Put a number on the hours you’re saving. For example, if a regression cycle used to take two testers three full days (48 hours), and automation now handles 80% of that, you’ve just freed up nearly 40 hours of skilled engineering time.

- Mean Time to Resolution (MTTR): Once a test finds a bug, how fast is it fixed? A low MTTR means your automated tests are giving developers clear, actionable feedback they can use immediately.

These metrics aren’t just numbers; they’re the building blocks for a powerful reporting dashboard that proves your worth.

Communicating Value With Dashboards

Your dashboard should be the single source of truth for everyone, from the engineers in the trenches to the executives in the boardroom. A well-designed dashboard doesn’t just show data; it tells a compelling story of progress over time.

You’ll want to tailor the views for your audience. Your technical teams will be interested in flaky test rates and execution speed. Business stakeholders, on the other hand, want to see the drop in production defects and the direct impact on release velocity. This kind of clear communication is a cornerstone of successful DevOps automation, as it closes the gap between the technical work and its business value.

A great test automation plan is a living document. It should bake in regular reviews to assess metrics, refactor brittle scripts, and ruthlessly prune tests that no longer add value. An unmaintained test suite is a useless test suite.

Embracing The Future Of Maintenance

To get that long-term value, regular maintenance is non-negotiable. This is where AI-driven tools are really starting to change the game. By 2025, it’s predicted that 85% of enterprises will adopt cross-platform test automation tools, which could boost application quality by 40%. In India’s booming IT sector, AI-powered automation is quickly becoming the norm, with tools offering predictive testing and self-healing scripts.

Gartner even forecasts that over 80% of test automation frameworks will feature AI-based self-healing by 2025, creating far more resilient testing environments. This shift is crucial for companies aiming to stay agile.

This forward-thinking maintenance approach ensures your test suite doesn’t just keep up with development—it actively accelerates it, enabling faster, more reliable software delivery for years to come.

Answering the Tough Questions About Test Automation Planning

When you start talking about formalising a test automation strategy, the same questions always seem to pop up. It’s only natural. Teams are often wrestling with the same hurdles. Let’s walk through some of the most common queries I hear and get you some clear, experience-based answers to help you plan with confidence.

What’s the Biggest Mistake I Could Make?

Without a doubt, the single biggest pitfall is chasing 100% automation coverage without a crystal-clear scope. I’ve seen it happen time and again. It almost always results in a bloated, fragile test suite that’s a nightmare to maintain. In the end, it costs far more to keep alive than the value it ever delivers. It’s the classic quantity-over-quality trap.

Smart planning is all about prioritisation. You have to focus your efforts on tests that cover business-critical functions and high-risk areas. My advice? Start with the most stable, high-value user journeys. This lets you build momentum and show a real return on investment right out of the gate. A phased, strategic rollout will beat a “big bang” attempt to automate everything, every single time.

How Do I Get Management on Board?

Look, management thinks in terms of business metrics, not technical jargon. To get their buy-in, you have to frame your proposal around solid business outcomes. Stop talking about specific frameworks or scripts; start talking about the bottom line.

You need to present a plan that clearly shows the expected return on investment. For example, instead of talking about test scripts, talk about results:

- “We can cut down the regression testing cycle from five days to just four hours.”

- “This will let us increase test coverage on our critical payment gateways by 70%.”

- “We’ll be able to decrease the time-to-market for new features by 20%.”

Use the data you gathered during your initial feasibility study. That’s your ammunition to build a business case that’s simply too compelling to ignore. A solid plan backed by clear, measurable objectives is your best weapon for securing that executive support.

A bit of hard-won advice: nothing proves value like a pilot project. Pick one high-impact area, automate it, and then present the before-and-after numbers. The metrics will do the talking for you, making it a whole lot easier to get the budget for a broader rollout.

Should We Involve Our Manual Testers in Planning?

Absolutely. Sidelining your manual testing team is a massive, costly mistake. These folks have an incredible amount of domain knowledge. They understand user behaviour on a deep level and have an almost sixth sense for finding an application’s weakest points. Their insights are pure gold for figuring out which test cases are the best candidates for automation.

Bring them into the conversation right from the very beginning. A truly great test automation plan doesn’t just list tests; it also outlines a strategy for upskilling the manual QA team. When you empower them to contribute to the automation effort, whether by writing simple scripts or helping with maintenance, you strengthen the entire quality function and build a much more collaborative, effective culture.

Ready to build a cloud and DevOps foundation that supports world-class automation? Signiance Technologies provides the expert guidance and technical excellence to design and implement secure, scalable, and efficient pipelines. Transform your testing strategy by visiting us at https://signiance.com.