What Is EKS? Understanding Amazon's Kubernetes Service

Amazon Elastic Kubernetes Service (EKS) simplifies running Kubernetes on Amazon Web Services (AWS). Instead of managing the complexities of setting up and maintaining your own Kubernetes control plane, EKS handles it for you. This allows you to concentrate on deploying and managing your applications, not the underlying infrastructure. This managed service is increasingly important for businesses modernizing their applications and adopting cloud-native practices.

This managed approach offers several key advantages. It reduces the operational overhead and specialized expertise needed to run Kubernetes. It also seamlessly integrates with other AWS services, simplifying storage, networking, and security. This integration is particularly helpful for businesses looking to utilize the robust AWS infrastructure. You might be interested in: How to master GitOps with Kubernetes using Argo CD.

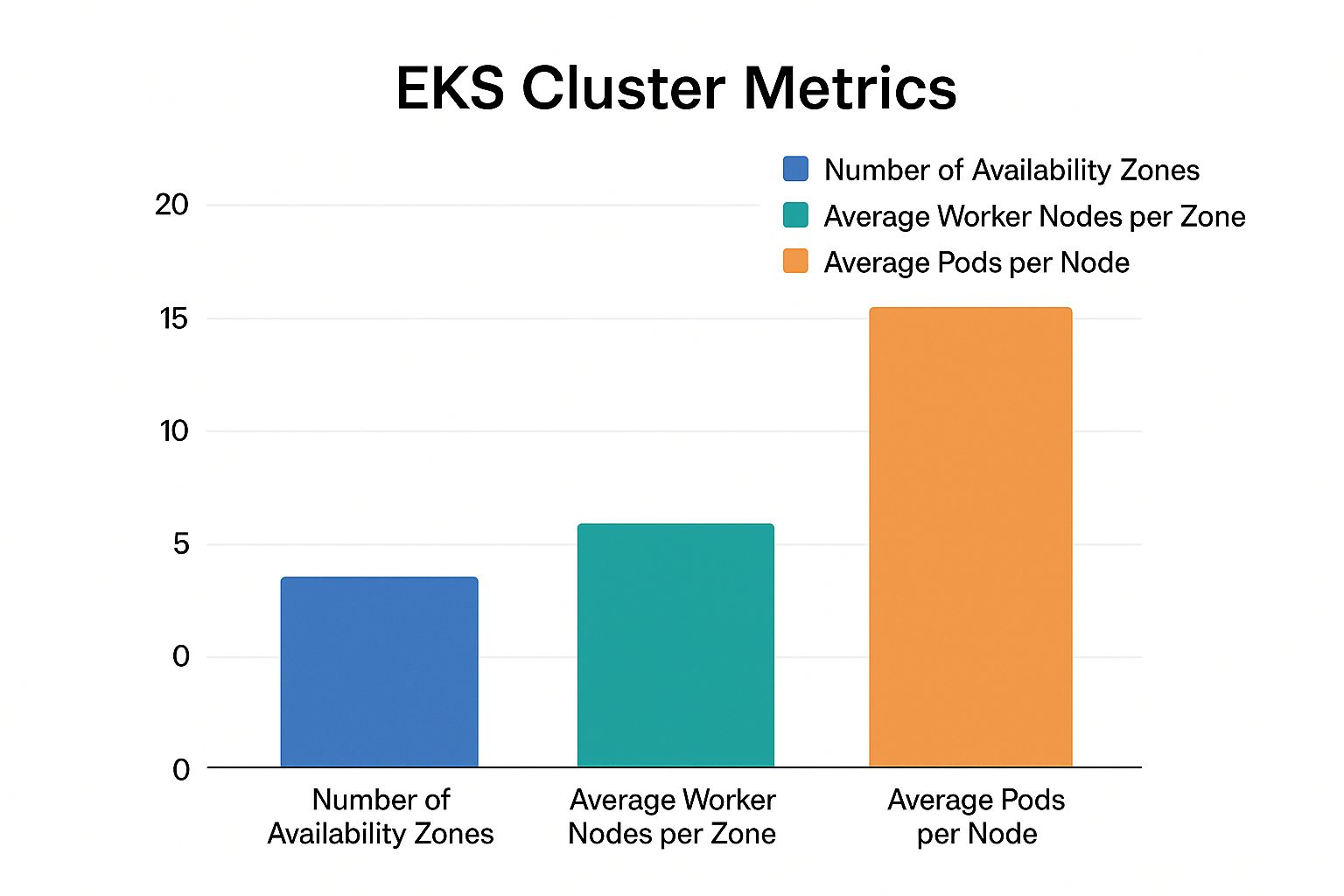

Amazon EKS is a managed container service allowing users to run Kubernetes on AWS without managing their own control plane. Introduced in 2017, EKS has become a cornerstone of AWS, providing a scalable and secure platform for containerized applications. It integrates with various AWS services, such as EC2 for worker nodes, ELB for load balancing, and EBS for persistent storage. EKS supports up to 100 availability zones (AZs) across 31 regions, enabling high availability and fault tolerance. Learn more about EKS cluster metrics.

Why Choose EKS? Key Benefits for Your Business

EKS offers several compelling benefits for organizations:

-

Reduced Operational Burden: EKS simplifies Kubernetes management, freeing your team to focus on development and innovation.

-

Scalability and Reliability: With support for numerous AZs across multiple regions, EKS provides the scalability and reliability needed for mission-critical applications.

-

Cost Optimization: EKS lets you utilize AWS's cost-effective pricing models, potentially lowering your overall infrastructure costs.

-

Security and Compliance: EKS integrates with AWS's robust security features, ensuring your containerized applications meet stringent security and compliance requirements.

-

Seamless Integration: EKS works seamlessly with other AWS services, creating a unified and efficient cloud environment.

EKS empowers businesses to modernize their applications, embrace cloud-native technologies, and gain a competitive edge. Its managed nature, scalability, and security features make it an attractive option for organizations across diverse industries. With its continued evolution and integration with emerging technologies, EKS is positioned to become even more central to cloud strategies.

Inside EKS: Architecture That Powers Modern Applications

This section delves into the architecture of Amazon EKS. We'll explore the interplay between the components managed by AWS and the infrastructure you control. This knowledge is essential for building resilient applications and effective troubleshooting.

The Control Plane: Managed by AWS

The control plane forms the core of EKS. It's the central management hub for the entire Kubernetes cluster. Importantly, AWS manages this control plane, freeing you from operational overhead. Key components like the API server, scheduler, and etcd are distributed across multiple availability zones for high availability.

This distribution ensures resilience and fault tolerance. If one availability zone encounters problems, the others continue to function seamlessly.

Worker Nodes: Your Domain

While AWS manages the control plane, you manage the worker nodes. These are the virtual machines or instances where your applications reside and execute. You have complete control over their configuration, including the operating system, security settings, and application dependencies.

This flexibility allows you to tailor the environment to your specific application requirements. You can select the optimal instance types for your workloads and fine-tune their configuration for peak performance.

Interaction and Communication: How It All Works

The EKS control plane and your worker nodes maintain constant communication through secure channels via the Kubernetes API. This ensures that your applications consistently operate in their desired state.

For instance, when deploying a new application version, the control plane schedules its deployment onto available worker nodes and monitors its health. The control plane also monitors the health of your worker nodes and takes corrective action if necessary. Effective monitoring of your EKS cluster is essential for performance and stability. For more insights, explore best practices for Kubernetes monitoring.

Key Components of EKS Architecture

To provide a more comprehensive view, the following table details the core components of EKS architecture. It describes their management, primary functions, and availability.

EKS Architecture Components: This table outlines the key components of EKS architecture and their responsibilities.

| Component | Managed By | Primary Function | Availability |

|---|---|---|---|

| Control Plane | AWS | Manages the Kubernetes cluster | Multiple Availability Zones |

| Worker Nodes | You | Run your containerized applications | Configurable |

| Kubernetes API | AWS | Facilitates communication between components | Highly Available |

| Networking | You | Connects your worker nodes and the control plane | Configurable |

| Security Groups | You | Control network traffic to your worker nodes | Configurable |

| IAM Roles | You | Manage access permissions to AWS resources | Configurable |

This table clarifies the distinct roles and interactions within the EKS architecture. Understanding these components allows for efficient leveraging of EKS to build and manage robust, scalable, and resilient applications.

By grasping these fundamentals, you can optimize performance, troubleshoot effectively, and confidently harness the power of Kubernetes within the AWS environment.

EKS vs. Alternatives: Choosing Your Container Strategy

The infographic above provides a visual representation of a typical EKS cluster architecture. It highlights key metrics like the distribution of resources across three availability zones, which is a common setup for redundancy. Each availability zone usually contains an average of five worker nodes, responsible for running the actual workloads. Furthermore, each worker node can manage approximately 20 pods, the smallest deployable units in Kubernetes. This distributed architecture ensures high availability and efficient resource utilization. Understanding the role of a CI Server is also crucial for effective container management.

Comparing EKS With Other Orchestration Solutions

When choosing a container orchestration platform, it's essential to evaluate various options based on your specific needs. This section compares Amazon EKS with other popular choices, including self-managed Kubernetes, Amazon ECS, Google Kubernetes Engine (GKE), and Azure Kubernetes Service (AKS). The following table summarizes key features and differences.

To help you select the best fit for your containerized applications, we've compiled a comparison table highlighting the strengths and weaknesses of each platform.

Comparison of Container Orchestration Solutions: A side-by-side comparison of EKS with other popular container management options.

| Feature | Amazon EKS | Self-Managed Kubernetes | Amazon ECS | Google GKE | Azure AKS |

|---|---|---|---|---|---|

| Kubernetes | Managed | Self-Managed | N/A | Managed | Managed |

| Control Plane | AWS Managed | User Managed | AWS Managed | Google Managed | Azure Managed |

| Operational Overhead | Low | High | Low | Low | Low |

| Scalability | High | Configurable | High | High | High |

| AWS Integration | Native | Requires Configuration | Native | Limited | Limited |

| Cost | Pay-as-you-go | Infrastructure Costs | Pay-as-you-go | Pay-as-you-go | Pay-as-you-go |

As the table shows, each solution has its own trade-offs. Self-managing Kubernetes provides granular control but increases operational overhead. Amazon ECS offers a simpler managed experience but doesn't utilize Kubernetes. GKE and AKS provide managed Kubernetes on other cloud platforms, which may limit AWS integration.

When EKS Shines: Ideal Use Cases

EKS is a compelling choice in various scenarios. If your team has limited Kubernetes experience, EKS simplifies operations considerably by managing the control plane. For organizations heavily invested in the AWS ecosystem, EKS integrates seamlessly with other AWS services, streamlining tasks like storage, networking, and security.

EKS is also a strong contender when high availability and scalability are paramount. Its ability to distribute workloads across multiple availability zones makes it well-suited for mission-critical applications. This is particularly relevant for organizations operating in the IN region, benefiting from its expanding and reliable cloud infrastructure. Furthermore, EKS's emphasis on security and compliance aligns with the requirements of various sectors in India.

Mastering EKS Costs: Strategies That Actually Work

Managing costs in the cloud is a critical aspect of any successful operation, especially with services like Amazon EKS. This section explores practical strategies for optimizing your EKS spending without sacrificing performance. These insights are drawn from the experiences of cloud economists and DevOps experts working with organizations in the IN region.

Right-Sizing Your Cluster Resources

One of the most effective ways to control EKS costs is through right-sizing. This means choosing the correct instance types and number of worker nodes to match your workload demands. Over-provisioning results in wasted resources and higher expenses, while under-provisioning can negatively impact performance.

Regularly evaluate your cluster's resource utilization and adjust accordingly. For instance, if your CPU utilization consistently remains low, consider downsizing to smaller instance types or decreasing the number of nodes. If your application encounters performance bottlenecks, scaling up might be necessary. Striking the right balance ensures cost-effective operation and maintains application stability. For additional best practices, check out this resource on optimizing AWS CodePipeline.

Leveraging Spot Instances for Cost Savings

EC2 Spot Instances offer a powerful cost-saving opportunity. These instances provide access to unused EC2 capacity at significantly reduced prices. While spot instances can be interrupted with short notice, they are well-suited for fault-tolerant workloads such as batch processing and big data tasks.

Strategically using spot instances for non-critical applications can significantly reduce your EKS costs. Running your testing and development environments on spot instances, for example, is a great way to lower infrastructure expenses. This allows organizations to achieve maximum resource efficiency and minimize spending. Amazon EKS provides several tools to manage costs effectively, including leveraging services like EC2 Spot Instances to lower expenses and monitoring inter-AZ data transfer costs. For more in-depth information, explore the official documentation on EKS cost optimization.

Implementing Auto-Scaling for Dynamic Workloads

Auto-scaling dynamically adjusts the number of worker nodes in response to real-time demand. This ensures your cluster scales up during peak periods to maintain optimal performance and scales down during off-peak hours to reduce costs.

By adapting to workload fluctuations, auto-scaling prevents over-provisioning. This ensures that you pay only for the resources your applications actively consume, maximizing resource utilization and cost efficiency.

Monitoring and Forecasting for Effective Budgeting

Implementing robust monitoring and forecasting tools is essential for accurately tracking and predicting your EKS costs. This proactive approach allows for informed decision-making and effective cost management. Understanding your cost drivers and projected expenses enables you to identify areas for optimization and budget accordingly. Continuous monitoring empowers businesses to control spending and make data-driven decisions regarding their EKS infrastructure. By implementing these practical cost optimization strategies, you can effectively manage your EKS expenditure while ensuring the performance and reliability your applications demand.

From Zero to EKS: Setting Up Your First Cluster

Creating your first Amazon EKS (Elastic Kubernetes Service) cluster might seem like a complex undertaking. However, with a structured approach, it's a very achievable process. This guide offers practical advice, leveraging the knowledge of engineers experienced in deploying numerous EKS clusters. We'll explore different methods for cluster creation, ranging from the AWS Management Console and the AWS CLI to Infrastructure-as-Code (IaC) tools like Terraform and the AWS Cloud Development Kit (CDK).

Choosing Your Deployment Method

There are several ways to launch an EKS cluster, each with its own advantages and disadvantages. The AWS Management Console presents a user-friendly interface, perfect for initial exploration and learning the ropes. For automated and repeatable deployments, the AWS CLI offers a command-line alternative. However, IaC tools like Terraform and AWS CDK are generally the preferred method for managing infrastructure as code, allowing for version control, automation, and reproducible deployments.

-

AWS Management Console: Ideal for beginners and initial setup, providing a visual interface for cluster creation.

-

AWS CLI: Allows for scripting and automation, making it suitable for repetitive tasks and integration with other command-line tools.

-

Terraform: A popular IaC tool that manages infrastructure across multiple cloud providers, enabling consistent and repeatable deployments.

-

AWS CDK: Lets you define cloud infrastructure using familiar programming languages such as Python and JavaScript, simplifying infrastructure management through code.

Selecting the right method depends on your team's skills, automation needs, and overall infrastructure management strategy. The AWS Console is a great starting point, but production environments typically benefit from the reliability and consistency of IaC.

Key Configuration Decisions

After selecting your deployment method, several key configuration decisions await. Networking setup is critical, dictating how your worker nodes interact with the control plane and the external world. Security groups function as virtual firewalls, controlling traffic flow to your worker nodes. IAM role configuration governs access permissions, ensuring components have the required privileges to interact with AWS resources. Lastly, worker node management involves setting up and maintaining the instances that host your applications.

Careful consideration of these configuration elements is essential. A well-designed network ensures optimal performance and security. Implementing robust security groups is fundamental to protecting your cluster. Correctly configuring IAM roles mitigates security risks while granting necessary permissions. Effective worker node management contributes to application stability and efficient resource utilization.

Operational Tasks: Beyond Cluster Creation

Once your EKS cluster is up and running, several operational tasks are crucial for its smooth operation. Scaling your cluster up or down based on demand is vital for performance and cost management. Regularly updating your cluster with the latest Kubernetes releases ensures security and access to new features. Monitoring provides valuable insights into the health and performance of your cluster. Finally, effective troubleshooting skills are essential for quickly resolving any emerging issues. Learn more in our article about how to master container image creation.

By mastering these operational aspects, you can guarantee a robust, reliable, and secure EKS cluster. Each step plays a significant role in maintaining a healthy and efficient Kubernetes environment. Understanding these concepts and implementing the right tools empowers you to manage your EKS clusters confidently and effectively support your applications.

Embracing Best Practices

Implementing best practices is essential throughout the entire lifecycle of your EKS cluster, from the initial setup to ongoing operations. This involves adhering to AWS guidelines, drawing on the collective experience of the Kubernetes community, and continually optimizing your cluster based on real-world performance data.

By adopting these principles, you can maximize the benefits of EKS and build a high-performing, secure, and cost-effective Kubernetes environment that effectively supports your organization's goals.

EKS in India: Adoption Trends and Regional Insights

The Indian cloud market is growing rapidly, creating exciting opportunities and unique challenges for businesses adopting Amazon EKS. This section explores how Indian companies are modernizing their IT infrastructure with EKS, focusing on sector-specific applications and regional factors.

Sector-Specific EKS Adoption in India

Indian businesses across various sectors are finding EKS valuable. In the financial sector, EKS helps companies meet stringent security and compliance regulations while rapidly deploying new financial services. E-commerce companies leverage EKS's scalability to handle traffic spikes during peak shopping seasons. EKS also supports the healthcare sector by enabling the secure and reliable management of sensitive patient data and facilitating innovative application development. India's technology sector, a key economic driver, uses EKS to rapidly build and deploy software solutions.

This diverse adoption demonstrates EKS's versatility and adaptability to the specific needs of different industries. It underscores the growing importance of containerization and cloud-native technologies in India.

Addressing Region-Specific Considerations

Several key factors influence EKS adoption for organizations operating in India. Data residency requirements are often paramount, requiring sensitive data to be stored within India. AWS's expanding infrastructure in India, with multiple data centers and Availability Zones, addresses this need directly.

Cost optimization is another crucial factor. EKS allows businesses to manage costs efficiently using various pricing models and features like spot instances. The availability of skilled cloud talent in India further fuels EKS adoption, providing the expertise needed to implement and manage EKS effectively. AWS has been significantly investing in its Indian infrastructure, launching data centers and Availability Zones to enhance high availability and scalability for services like EKS. This expansion will likely support further EKS deployments, particularly in sectors like finance and healthcare that demand robust security and compliance. Additionally, AWS EKS integrates well with other AWS services, which are increasingly popular in India due to their scalability and reliability. For more detailed statistics on AWS and EKS, visit CloudZero.

Learning From Successful Implementations

Examining successful EKS implementations in India provides valuable insights. Several Indian enterprises have already adopted EKS, navigating regional challenges and reaping the benefits of containerization. Their experiences offer practical lessons for others.

These case studies often highlight effective architectural decisions, migration strategies, and operational best practices. They showcase how companies overcome obstacles related to data residency, cost management, and talent acquisition. By studying these examples, organizations can streamline their EKS journeys and avoid common pitfalls.

EKS and the Future of Cloud in India

As the Indian cloud market evolves, EKS is poised to play a larger role in shaping the future of cloud computing in the region. Its capacity to support modern application development, enhance scalability, and ensure security makes it a vital tool for businesses seeking a competitive edge.

For technical leaders in India, understanding the regional dynamics of EKS adoption is essential for effective cloud planning and strategy. By incorporating these regional insights, businesses can maximize EKS's value and leverage its potential to drive innovation and growth.

The Future of EKS: Where Container Technology Is Heading

The world of container technology, especially with platforms like Amazon EKS, is constantly changing. This article explores emerging trends shaping the future of EKS and how these advancements might impact your container strategy.

Serverless Kubernetes and EKS

Serverless Kubernetes is rapidly gaining popularity. This approach removes the complexities of managing the underlying infrastructure, allowing developers to concentrate on deploying and running their applications. EKS is adapting to this trend with features like AWS Fargate for EKS, eliminating the need to manage worker nodes. This simplifies operations and improves cost efficiency.

This shift towards serverless Kubernetes greatly reduces the management burden. It allows for more efficient scaling based on demand, offering a compelling way to leverage the benefits of Kubernetes without the infrastructure management overhead.

GitOps and EKS Automation

GitOps, a set of practices using Git as the single source of truth for infrastructure and application deployments, is becoming increasingly important for managing Kubernetes clusters. Using Git for configuration management allows teams to automate deployments, rollbacks, and other operational tasks. This enhances reliability and reduces human error. EKS integrates well with GitOps tools, further streamlining workflows.

Tools like Argo CD and Flux CD enable automated deployment and management of applications on EKS based on changes in a Git repository. This automation is particularly valuable for businesses rapidly adopting cloud-native practices and requiring efficient workflows.

Service Mesh and Enhanced Communication

Service mesh technologies are gaining traction for managing communication between microservices within a Kubernetes cluster. A service mesh offers features like traffic management, security, and observability, simplifying the operation of complex applications. EKS users can utilize service mesh solutions like Istio and Linkerd to enhance the resilience and security of their microservices.

This improved inter-service communication is crucial for businesses operating microservices architectures. It provides greater control, security, and monitoring capabilities, which are especially important for enterprise IT departments and cloud architects.

AI/ML and EKS Evolution

AI/ML workloads are increasingly deployed on Kubernetes, and EKS is no different. Kubernetes' scalability and flexibility make it well-suited for running resource-intensive AI/ML tasks. EKS is continuously evolving to better support these workloads with features like GPU integration and optimized scheduling, making it a compelling platform for organizations looking to harness the power of AI/ML.

This evolving landscape is constantly pushing the boundaries of container technology. By staying informed about these trends, organizations can make strategic decisions about their EKS deployments and ensure their cloud-native application development investments are future-proof.

Ready to maximize your cloud potential? Partner with Signiance Technologies for expert guidance on cloud architecture, DevOps automation, and managed cloud services tailored to your needs.